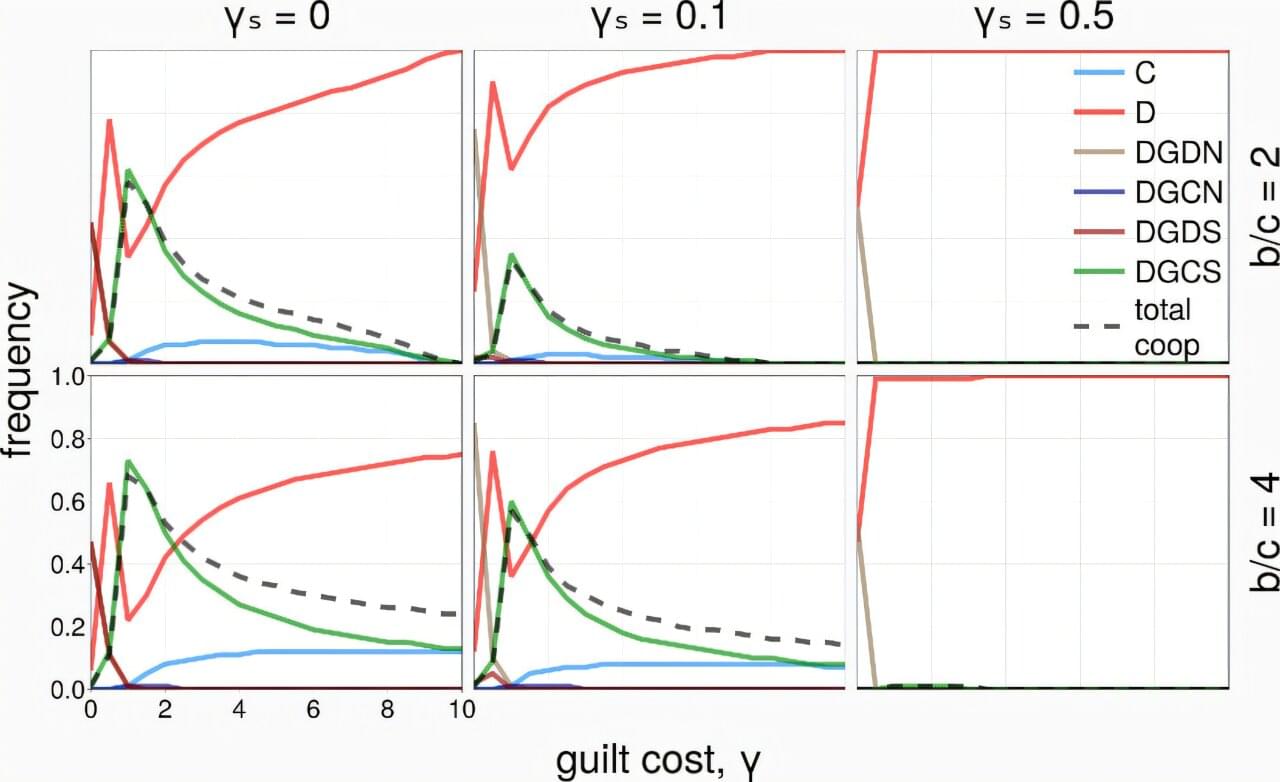

Guilt is a highly advantageous quality for society as a whole. It might not prevent initial wrongdoings, but guilt allows humans to judge their own prior judgments as harmful and prevents them from happening again. The internal distress caused by feelings of guilt often—but not always—results in the person taking on some kind of penance to relieve themselves from internal turmoil. This might be something as simple as admitting their wrongdoing to others and taking on a slight stigma of someone who is morally corrupt. This upfront cost might be initially painful, but can relieve further guilt and lead to better cooperation for the group in the future.

As we interact more and more with artificial intelligence and use it in almost every aspect of our modern society, finding ways to instill ethical decision-making becomes more critical. In a recent study, published in the Journal of the Royal Society Interface, researchers used game theory to explore how and when guilt evolves in multi-agent systems.

The researchers used the “prisoners’ dilemma”—a game where two players must choose between cooperating and defecting. Defecting provides an agent with a higher payoff, but they must betray their partner. This, in turn, makes it more likely that the partner will also defect. However, if the game is repeated over and over, cooperation results in a better payoff for both agents.