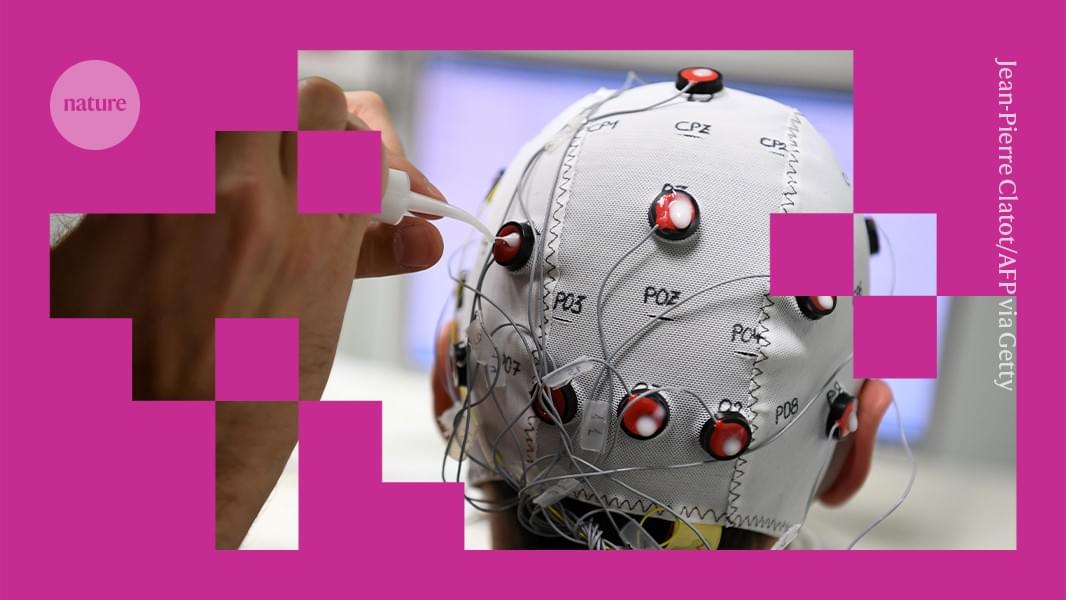

Neural networks are computing systems designed to mimic both the structure and function of the human brain. Caltech researchers have been developing a neural network made out of strands of DNA instead of electronic parts that carries out computation through chemical reactions rather than digital signals.

An important property of any neural network is the ability to learn by taking in information and retaining it for future decisions. Now, researchers in the laboratory of Lulu Qian, professor of bioengineering, have created a DNA-based neural network that can learn. The work represents a first step toward demonstrating more complex learning behaviors in chemical systems.

A paper describing the research appears in the journal Nature on September 3. Kevin Cherry, Ph.D., is the study’s first author.