If all artists take inspiration from previous artists’ work, does AI art really pose a new threat to human creativity?

“Introducing the first soft material that can maintain a high enough electrical conductivity to support power hungry devices.” and self-healing.

The newest development in softbotics will have a transformative impact on robotics, electronics, and medicine. Carmel Majidi has engineered a soft material with metal-like conductivity and self-healing properties that, for the first time, can support power-hungry devices.

“Softbotics is about seamlessly integrating robotics into everyday life, putting humans at the center,” explained Majidi, a professor of mechanical engineering.

Engineers work to integrate robots into our everyday lives with the hope of improving our mobility, health, and well-being. For example, patients might one day recover from surgery at home thanks to a wearable robot monitoring aid. To integrate robots seamlessly, they need to be able to move with us, withstand damage, and have electrical functionality without being encased in a hard structure.

Consider humanity’s astounding progress in science during the past three hundred years. Now take a deep breath and project forward three billion years. Assuming humans survive, can we even conceive of what our progeny might be like? Will we colonize the galaxy, the universe?

Free access to Closer to Truth’s library of 5,000 videos: http://bit.ly/376lkKN

Support the show with Closer To Truth merchandise: https://bit.ly/3P2ogje.

Watch more interviews on the future of humanity: https://closertotruth.com/video/bosni-007/?referrer=7919

Nick Bostrom is a Swedish-born philosopher with a background in theoretical physics, computational neuroscience, logic, and artificial intelligence, as well as philosophy. He is the most-cited professional philosopher in the world under the age of 50.

Register for free at CTT.com for subscriber-only exclusives: https://bit.ly/3He94Ns.

A Paperclip Maximizer is an example of artificial intelligence run amok performing a job, potentially seeking to turn all the Universe into paperclips. But it’s also an example of a concept called Instrumental Convergence, where two entities with wildly different ultimate goals might end up acting very much alike. This concept is very important to preparing ourselves for future automation and machine minds, and we’ll explore that today.

Visit our Website: http://www.isaacarthur.net.

Support us on Patreon: https://www.patreon.com/IsaacArthur.

SFIA Merchandise available: https://www.signil.com/sfia/

Social Media:

Facebook Group: https://www.facebook.com/groups/1583992725237264/

Reddit: https://www.reddit.com/r/IsaacArthur/

Twitter: https://twitter.com/Isaac_A_Arthur on Twitter and RT our future content.

SFIA Discord Server: https://discord.gg/53GAShE

Listen or Download the audio of this episode from Soundcloud: Episode’s Audio-only version: https://soundcloud.com/isaac-arthur-148927746/the-paperclip-maximizer.

Episode’s Narration-only version: https://soundcloud.com/isaac-arthur-148927746/the-paperclip-…ation-only.

Credits:

The Paperclip Maximizer.

Episode 201a, Season 5 E36a.

Written by:

This AI tool automatically animates, lights, and composes CG characters into a live-action scene. No complicated 3D software, no expensive production hardware—all you need is a camera.

Wonder Dynamics: https://wonderdynamics.com.

Blender Addons: https://bit.ly/3jbu8s7

Join Weekly Newsletter: https://bit.ly/3lpfvSm.

Patreon: https://www.patreon.com/asknk.

Discord: https://discord.gg/G2kmTjUFGm.

██████ Assets & Resources ████████████

Blender Addons: https://bit.ly/3jbu8s7

FlippedNormals Deals: https://flippednormals.com/ref/anselemnkoro/

FiberShop — Realtime Hair Tool: https://tinyurl.com/2hd2t5v.

GET Character Creator 4 — https://bit.ly/3b16Wcw.

Humble Bundles: https://www.humblebundle.com/membership?refc=F0hxTa.

Get Humble Bundle Deals: https://www.humblebundle.com/?partner=asknk.

GET Axyz Anima: https://bit.ly/2GyXz73

Learn More with Domestica: http://bit.ly/3EQanB5

GET ICLONE 8 — https://bit.ly/38QDfbb.

Unity3D Asset Bundles: https://bit.ly/384jRuy.

Cube Brush Deals: https://cubebrush.co/marketplace?on_sale=true&ref=anselemnkoro.

Motion VFX: https://motionvfx.sjv.io/5b6q03

Action VFX Elements: https://www.actionvfx.com/?ref=anselemnkoro.

WonderShare Tools: http://bit.ly/3Os3Rnp.

Sketchfab: https://bit.ly/331Y8hq.

███████ Blender Premium Tutorials █████████

Blender Tutorials #1: https://bit.ly/3nbfTEu.

Blender Tutorials #2: https://tinyurl.com/yeyrkreh.

Learn HardSurface In Blender: https://blendermarket.com/creators/blenderbros?ref=110

Learn to Animate in Blender: https://bit.ly/3A1NWac.

Cinematic Lighting In Blender: https://blendermarket.com/products/cinematic-lighting-in-blender?ref=110

Post Pro: https://blendermarket.com/products/postpro-?ref=110

███████ Extras ██████████████████

Basically we are nearing if not already in the age of infinity. What this means is that full automation can be realized imagine not needing really to work to survive bit we could thrive and work on harder things like new innovative things. Basically we could automate all work so we could automate the planet to get to year million or year infinity maybe even days or months once realized full automation could lead to more even for physics where one could finally find the theory of everything or even master algorithm. 😀 Really in the age of infinity anything could be possible from solving impossible problems to nearly anything.

These assistants won’t just ease the workload, they’ll unleash a wave of entrepreneurship.

GPT-4 is reportedly six times larger than GPT-3, according to a media report, and Elon Musk’s exit from OpenAI has cleared the way for Microsoft.

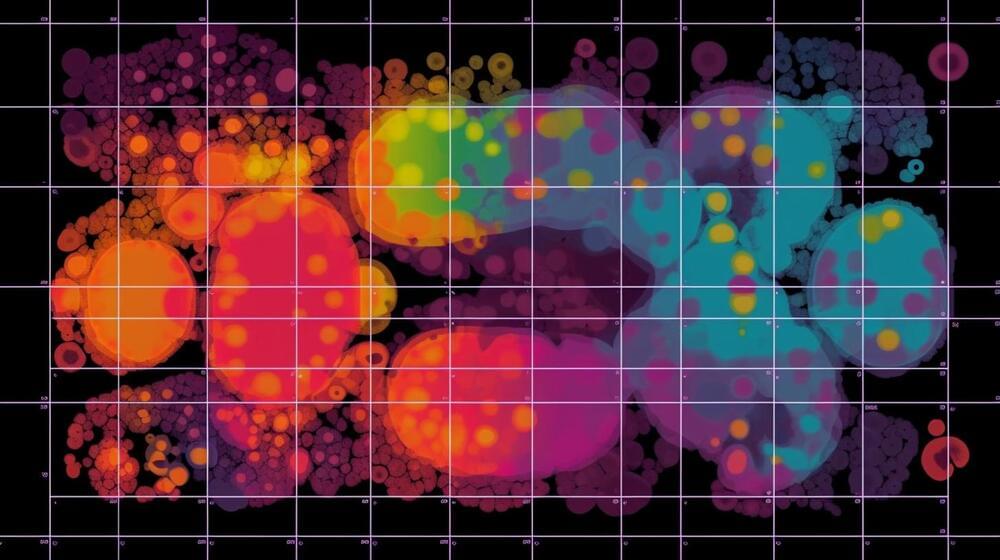

The US website Semafor, citing eight anonymous sources familiar with the matter, reports that OpenAI’s new GPT-4 language model has one trillion parameters. Its predecessor, GPT-3, has 175 billion parameters.

Semafor previously revealed Microsoft’s $10 billion investment in OpenAI and the integration of GPT-4 into Bing in January and February, respectively, before the official announcement.

Saw this coming 5 or 10 years back. Soon concept of being a model will be non existent. Probably by 2030. And, once can realistically animate and walk around and speak, end of actors and acting also.

Levi’s is partnering with an AI company on computer-generated fashion models to “supplement human models.” The company frames the move as part of a “digital transformation journey” of diversity, equity, inclusion and sustainability. Although that sounds noble on the surface, Levi’s is essentially hiring a robot to generate the appearance of diversity while ridding itself of the burden of paying human beings who represent the qualities it wants to be associated with its brand.

Levi Strauss is partnering with Amsterdam-based digital model studio Lalaland.ai for the initiative. Founded in 2019, the company’s mission is “to see more representation in the fashion industry” and “create an inclusive, sustainable, and diverse design chain.” It aims to let customers see what various fashion items would look like on a person who looks like them via “hyper-realistic” models “of every body type, age, size and skin tone.”

Levi’s announcement echoes that branding, saying the partnership is about “increasing the number and diversity of our models for our products in a sustainable way.” The company continues, “We see fashion and technology as both an art and a science, and we’re thrilled to be partnering with Lalaland.ai, a company with such high-quality technology that can help us continue on our journey for a more diverse and inclusive customer experience.”