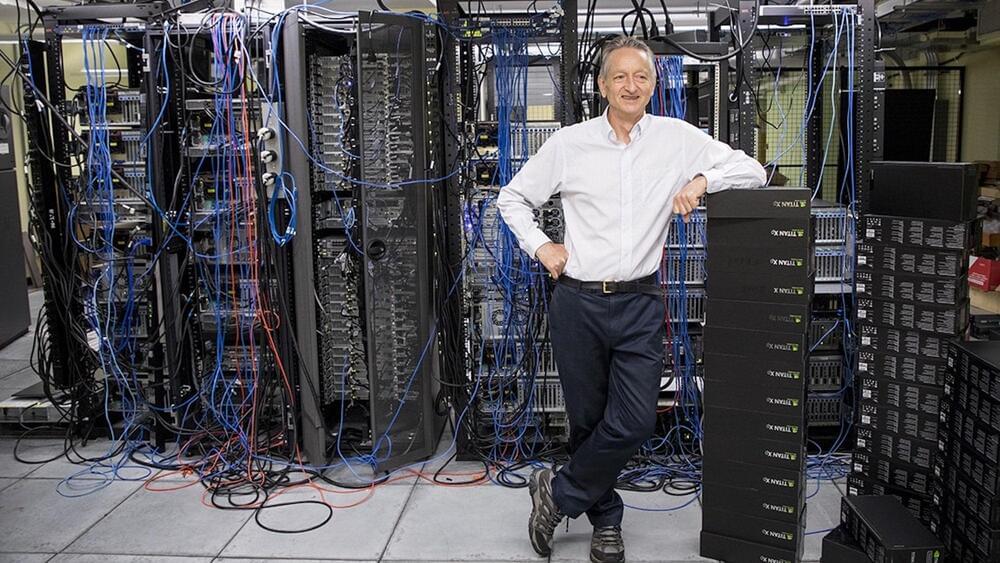

Defining computational neuroscience The evolution of computational neuroscience Computational neuroscience in the twenty-first century Some examples of computational neuroscience The SpiNNaker supercomputer Frontiers in computational neuroscience References Further reading

The human brain is a complex and unfathomable supercomputer. How it works is one of the ultimate mysteries of our time. Scientists working in the exciting field of computational neuroscience seek to unravel this mystery and, in the process, help solve problems in diverse research fields, from Artificial Intelligence (AI) to psychiatry.

Computational neuroscience is a highly interdisciplinary and thriving branch of neuroscience that uses computational simulations and mathematical models to develop our understanding of the brain. Here we look at: what computational neuroscience is, how it has grown over the last thirty years, what its applications are, and where it is going.