The data centers and high-performance computers that run artificial intelligence programs, such as large language models, aren’t limited by the sheer computational power of their individual nodes. It’s another problem—the amount of data they can transfer among the nodes—that underlies the “bandwidth bottleneck” that currently limits the performance and scaling of these systems.

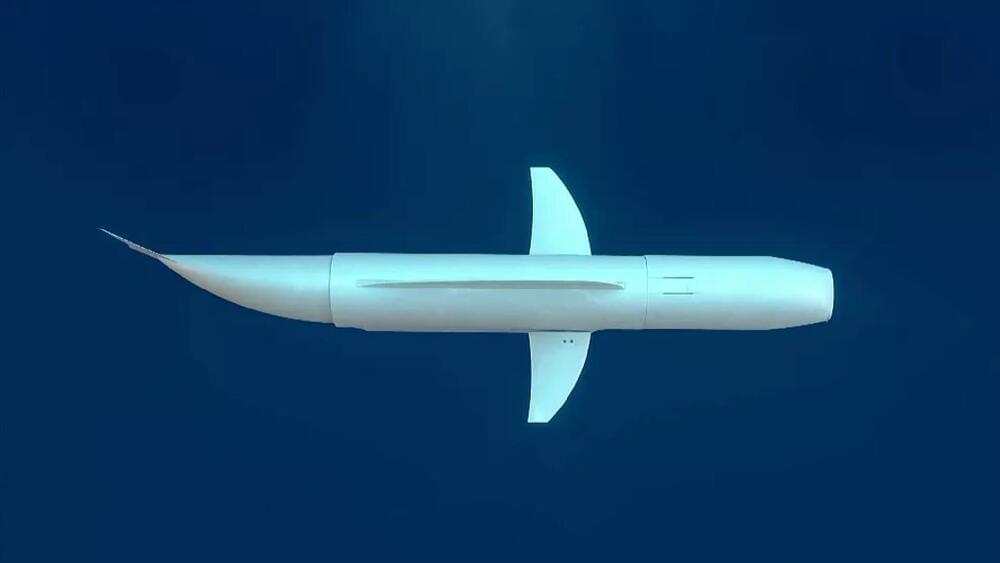

The nodes in these systems can be separated by more than one kilometer. Since metal wires dissipate electrical signals as heat when transferring data at high speeds, these systems transfer data via fiber-optic cables. Unfortunately, a lot of energy is wasted in the process of converting electrical data into optical data (and back again) as signals are sent from one node to another.

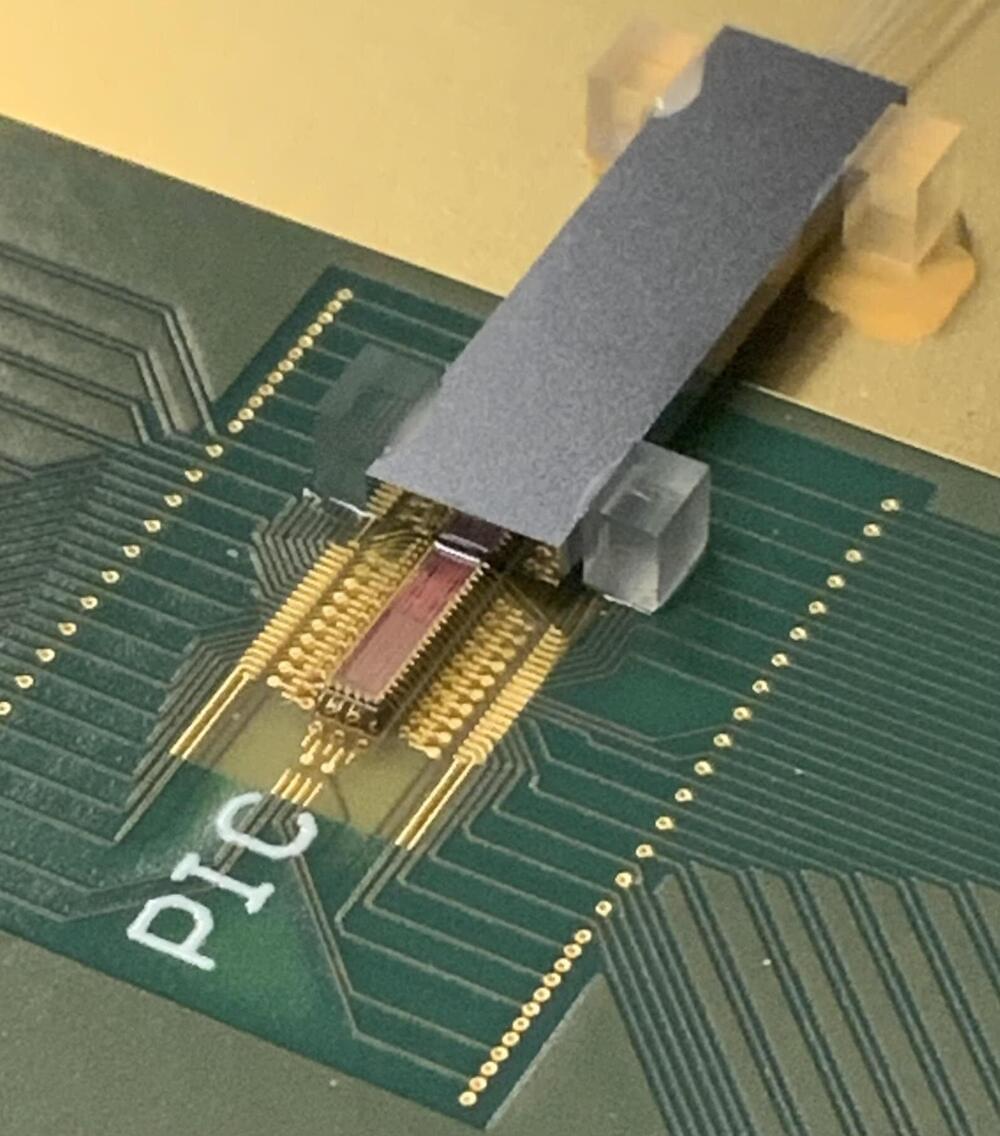

In a study published in Nature Photonics, researchers at Columbia Engineering demonstrate an energy-efficient method for transferring larger quantities of data over the fiber-optic cables that connect the nodes. This new technology improves on previous attempts to transmit multiple signals simultaneously over the same fiber-optic cables. Instead of using a different laser to generate each wavelength of light, the new chips require only a single laser to generate hundreds of distinct wavelengths of light that can simultaneously transfer independent streams of data.