A robot fish named Belle could be the “spy on the marine life” that researchers have been looking for.

Artificial intelligence (AI) proponents say the technology will transform every industry from healthcare and automobiles to yogurt.

Danone S.A., a renowned multinational food company, plans to use AI and a top-secret robot stomach to revolutionize its operations and drive growth in the highly competitive yogurt market.

By harnessing the power of AI, Danone aims to improve its products and operations on several fronts to boost profits and keep the company at the forefront of the yogurt industry.

Keep Your Digital Life Private and Stay Safe Online: https://nordvpn.com/safetyfirst.

Welcome to an enlightening journey through the 7 Stages of AI, a comprehensive exploration into the world of artificial intelligence. If you’ve ever wondered about the stages of AI, or are interested in how the 7 stages of artificial intelligence shape our technological world, this video is your ultimate guide.

Artificial Intelligence (AI) is revolutionizing our daily lives and industries across the globe. Understanding the 7 stages of AI, from rudimentary algorithms to advanced machine learning and beyond, is vital to fully grasp this complex field. This video delves deep into each stage, providing clear explanations and real-world examples that make the concepts accessible for everyone, regardless of their background.

Throughout this video, we demystify the fascinating progression of AI, starting from the basic rule-based systems, advancing through machine learning, deep learning, and the cutting-edge concept of self-aware AI. Not only do we discuss the technical aspects of these stages, but we also explore their societal implications, making this content valuable for technologists, policy makers, and curious minds alike.

Leveraging our in-depth knowledge, we illuminate the intricate complexities of artificial intelligence’s 7 stages. By the end of the video, you’ll have gained a robust understanding of the stages of AI, the applications and potential of each stage, and the future trajectory of this game-changing technology.

#artificialintelligence.

#ai.

#airevolution.

Subscribe for more!

An international team of explorers, led by Japan, will send a tiny robotic rover to the Martian moon of Phobos very soon.

A Japanese-led mission to Mars has just signed an agreement with German and French partners to build a rover to explore Phobos.

The rover will be transported on Japan’s planned Martian Moons eXploration (MMX) mission and operate on Phobos’ hostile, low-gravity surface.

A newly created real-life Transformer is capable of reconfiguring its body to achieve eight distinct types of motion and can autonomously assess the environment it faces to choose the most effective combination of motions to maneuver.

The new robot, dubbed M4 (for Multi-Modal Mobility Morphobot) can roll on four wheels, turn its wheels into rotors and fly, stand on two wheels like a meerkat to peer over obstacles, “walk” by using its wheels like feet, use two rotors to help it roll up steep slopes on two wheels, tumble, and more.

A robot with such a broad set of capabilities would have applications ranging from the transport of injured people to a hospital to the exploration of other planets, says Mory Gharib (Ph. D. ‘83), the Hans W. Liepmann Professor of Aeronautics and Bioinspired Engineering and director of Caltech’s Center for Autonomous Systems and Technologies (CAST), where the robot was developed.

AI-powered augmented reality devices will give human beings ‘superpowers’ to detect lies and ‘read’ emotions of people they are talking to, a futurist has claimed.

Speaking exclusively to DailyMail.com, Devin Liddell, Principal Futurist at Teague, said that computer vision systems built into headsets or glasses will pick up emotional cues that un-augmented human eyes and instincts cannot see.

The technology would let people know if their date is lying or is sexually aroused, along with spotting a lying politician.

And so it begins. I’ve seen one job already on glass door that requires knowledge of AI and I only barely started looking. I wasn’t even specifically looking for AI jobs. I’ve seen other articles where ChatGPT can be used to make thousands in side hustles. So far, so good. I’ll have to check out those job hustles and see if I can make use of those articles. Just one job is enough for me. One article claimed some jobs will pay you as much as 800k if you know AI.

Generative artificial intelligence is all the rage now but the AI boom is not just all hype, said Dan Ives from Wedbush Securities, who calls it the “fourth industrial revolution playing out.”

“This is something I call a 1995 moment, parallel with the internet. I do not believe that this is a hype cycle,” the managing director and senior equity research analyst told CNBC’s “Squawk Box Asia” on Wednesday.

The fourth industrial revolution refers to how technological advancements like artificial intelligence, autonomous vehicles and the internet of things are changing the way humans live, work and relate to one another.

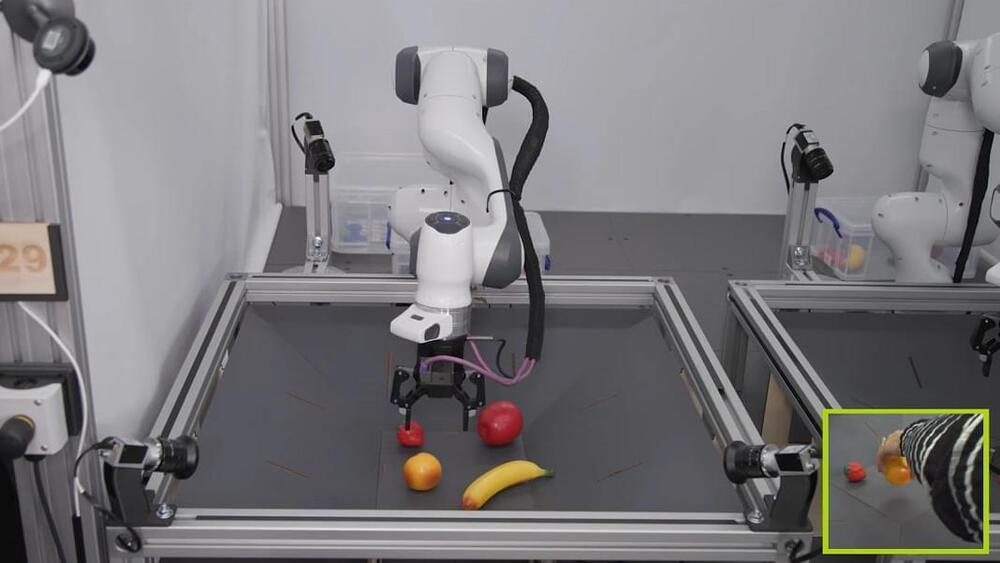

While autonomous robots have started to move out of the lab and into the real world, they remain fragile. Slight changes in the environment or lighting conditions can easily throw off the AI that controls them, and these models have to be extensively trained on specific hardware configurations before they can carry out useful tasks.

This lies in stark contrast to the latest LLMs, which have proven adept at generalizing their skills to a broad range of tasks, often in unfamiliar contexts. That’s prompted growing interest in seeing whether the underlying technology—an architecture known as a transformer—could lead to breakthroughs in robotics.

In new results, researchers at DeepMind showed that a transformer-based AI called RoboCat can not only learn a wide range of skills, it can also readily switch between different robotic bodies and pick up new skills much faster than normal. Perhaps most significantly, it’s able to accelerate its learning by generating its own training data.

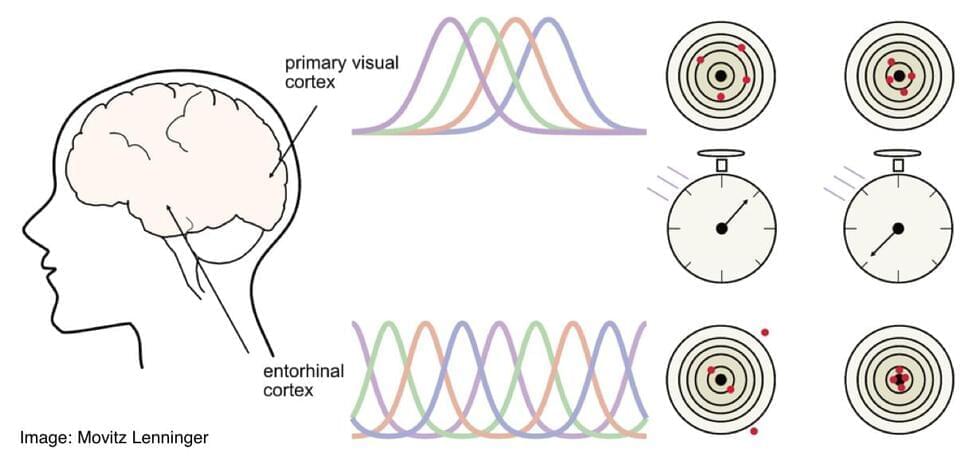

When an animal takes notice of an approaching figure, it needs to determine what it is, and quickly. In nature, competition and survival dictate that it’s better to think fast—that is, for the brain to prioritize processing speed over accuracy. A new study shows that this survival principle may already be wired in the way the brain processes sensory information.

Kumar and fellow KTH neuroscientist Pawel Herman collaborated with KTH information theorists Movitz Lenninger and Mikael Skoglund to study input processing in the brain using information theory and computer models of the brain. Neuroscientist Arvind Kumar, an associate professor at KTH Royal Institute of Technology, says that the study offers a new view of neural coding of different types of inputs in the brain.

The new study surprisingly shows that initial visual processing is “quick but sloppy” in comparison to information processing in other parts of the brain’s vast neural network, where accuracy is prioritized over speed. The paper is published in the journal eLife.