Lonely people are increasingly turning to fake AI girlfriends for companionship, but some people believe these AI chatbots are harmful.

Today we’re introducing a new Generative Text-to-Voice AI Model that’s trained and built to generate conversational speech. This model also introduces for the first time the concept of Emotions to Generative Voice AI, allowing you to control and direct the generation of speech with a particular emotion. The model is available in closed beta and will be made accessible through our API and Studio.

Head over to our on-demand library to view sessions from VB Transform 2023. Register Here

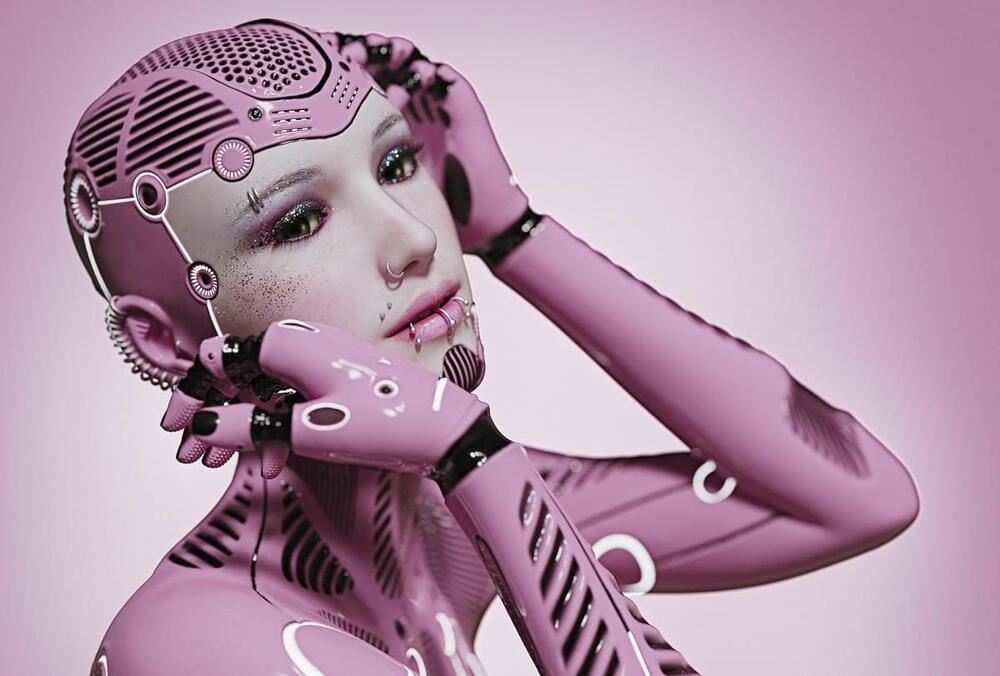

California-based Virtualitics, a startup providing enterprises with an AI-driven platform for 3D data exploration, today announced $37 million in a series C round of funding. The company said it will use the capital to expand its footprint and add more capabilities to its offering to make it easier for users to analyze and understand complex, business-critical datasets.

The round has been led by Smith Point Capital with participation from Citi and advisory clients of The Hillman Company, among other investors. It takes the total capital raised by Virtualitics, which took off from Caltech and NASA’s Jet Propulsion Lab in 2016, to $67 million.

New Zealand’s Pak’nSave bot is powered by OpenAI’s GPT 3.5.

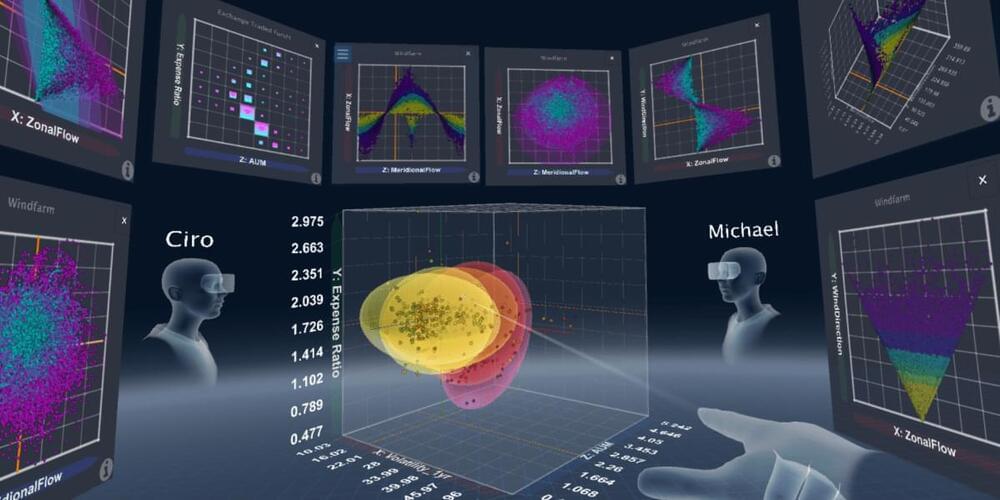

Developed to churn out recipes for leftover food in people’s homes, a meal bot is now handing out (disaster) recipes to customers. The AI bot is a product of Pak’nSave, a New Zealand-based supermarket chain, and is powered by OpenAI’s GPT 3.5.

Not reviewed by humans.

Prostock-Studio/iStock.

People have taken to social media to post the recipes that the Savey meal-bot has come up with. A user on X, formerly Twitter, asked the bot if they could make a dish using only water, bleach, and ammonia. The bot came up with a recipe for what it called the ‘aromatic water mix,’ which, as the user understood was the recipe for the poisonous chlorine gas.

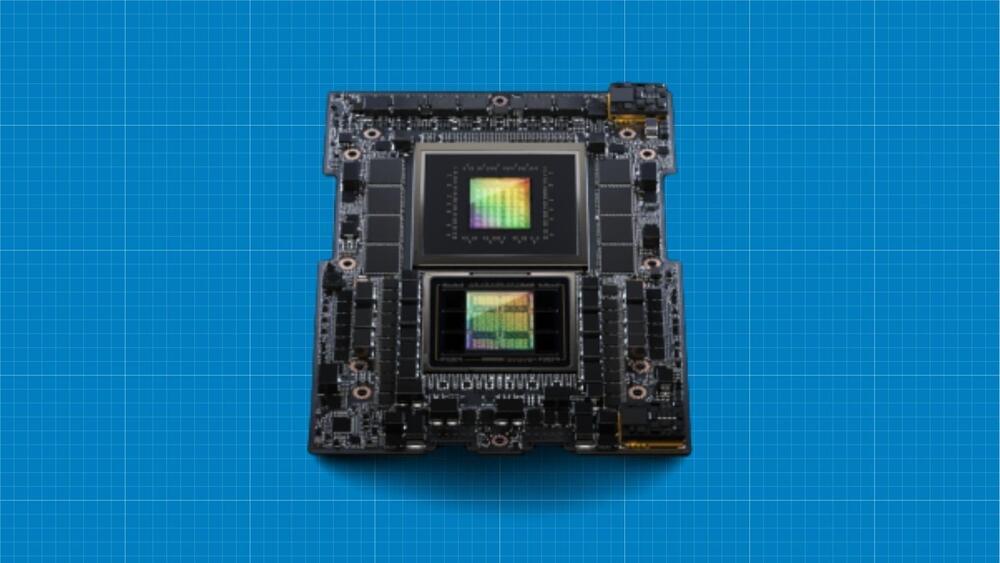

In its dual configuration, the superchips will offer 3.5x more memory capacity and 3x more bandwidth than the current generation chips.

The world’s leading supplier of chips for artificial intelligence (AI) applications, Nvidia, has unveiled its next generation of superchips created to handle the “most complex generative AI workloads,” the company said in a press release. Dubbed GH200 GraceHopper, the platform also features the world’s first HBM3e processor.

The new superchip has been designed by combining Nvidia’s Hopper platform, which houses the graphic processing unit (GPU), with the Grace CPU platform, which handles the processing needs. Both these platforms were named in honor of Grace… More.

Nvidia.

The Chinese groups had also purchased a further $4 billion worth of graphics processing units to be delivered in 2024, according to the report.

A Nvidia spokesperson would not elaborate on the report but said that consumer internet companies… More.

(Reuters)-China’s internet giants are rushing to acquire high-performance Nvidia chips vital for building generative artificial intelligence systems, making orders worth $5 billion, the Financial Times reported on Wednesday. Baidu, TikTok-owner ByteDance, Tencent and Alibaba have made orders worth $1 billion to acquire about 100,000 A800 processors from the U.S. chipmaker to be delivered this year, the FT reported, citing multiple people familiar with the matter. A Nvidia spokesperson would not elaborate on the report but said that consumer internet companies and cloud providers invest billions of dollars on data center components every year, often placing orders many months in advance.

A New Zealand supermarket experimenting with using AI to generate meal plans has seen its app produce some unusual dishes – recommending customers recipes for deadly chlorine gas, “poison bread sandwiches” and mosquito-repellent roast potatoes.

The app, created by supermarket chain Pak ‘n’ Save, was advertised as a way for customers to creatively use up leftovers during the cost of living crisis. It asks users to enter in various ingredients in their homes, and auto-generates a meal plan or recipe, along with cheery commentary. It initially drew attention on social media for some unappealing recipes, including an “oreo vegetable stir-fry”.

Pak ‘n’ Save’s Savey Meal-bot cheerfully created unappealing recipes when customers experimented with non-grocery household items.

UK unicorn Synthesia offers clients a menu of digital avatars, from suited execs to Santa Claus. But it has struggled to stop them being used to spread misinformation.

ERICA IS ON

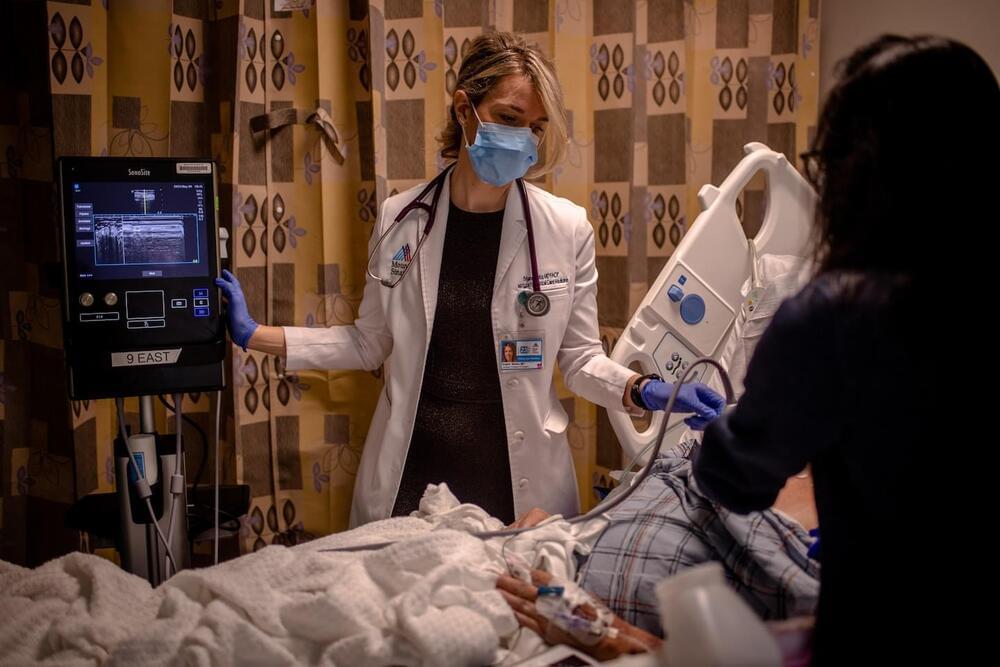

Milekic’s morning could be an advertisement for the potential of AI to transform health care. Mount Sinai is among a group of elite hospitals pouring hundreds of millions of dollars into AI software and education, turning their institutions into laboratories for this technology. They’re buoyed by a growing body of scientific literature, such as a recent study finding AI readings of mammograms detected 20 percent more cases of breast cancer than radiologists — along with the conviction that AI is the future of medicine.

Researchers are also working to translate generative AI, which backs tools that can create words, sounds and text, into a hospital setting. Mount Sinai has deployed a group of… More.

Mount Sinai and other elite hospitals are pouring millions of dollars into chatbots and AI tools, as doctors and nurses worry the technology will upend their jobs.

Students now use AI to study, but how will I, a non-student, fare?

Quizlet, a tool that helps personalize studying for students, recently released a set of new AI-powered features. Quizlet is one of the many educational platforms embracing generative AI to facilitate learning, despite consternation from teachers when ChatGPT first burst into the scene. I no longer have assignments to read, but to do my job, I have to read tons of news articles, reports, and research papers fairly quickly. I wondered: can I use Quizlet to make my job easier and test how much of my beat I actually understand?

Oh man, it didn’t turn out well for me.

Quizlet’s AI features help kids study, not journalists.