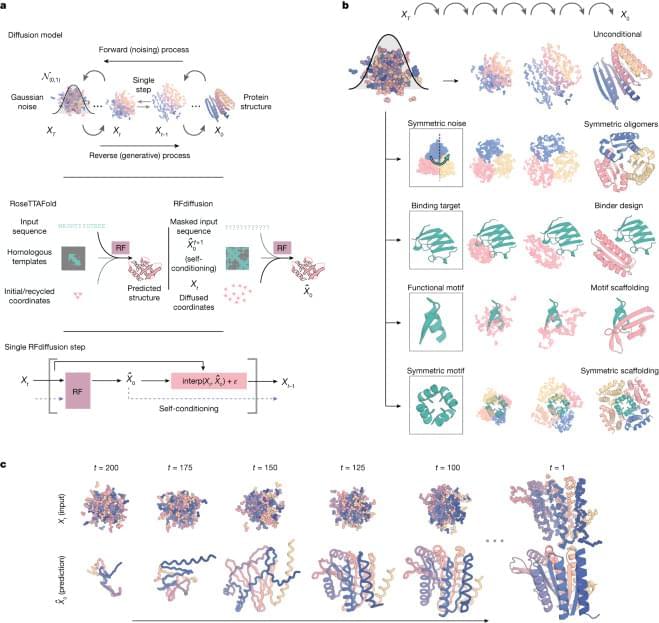

An extremely important landmark paper by Watson et al. from David Baker’s group. They developed RFdiffusion, a generative AI for protein engineering. With this tool, scientists can generate new protein designs. The software can generate novel monomers, multimers, and symmetric protein cages. It can generate proteins that bind to desired targets as well as enzymes that position active sites at a desired location on the structure. I would say that this technology has the potential to dramatically alter how biology and biotechnology is done. I’ve been waiting for something like this since middle school! So exciting to see protein engineering reaching this point!! #computationalbiology #proteinengineering #syntheticbiology #biotechnology #biochemistry #ai #generativeai

Fine-tuning the RoseTTAFold structure prediction network on protein structure denoising tasks yields a generative model for protein design that achieves outstanding performance on a wide range of protein structure and function design challenges.