A new AI method can distinguish between biotic and abiotic samples with 90% accuracy.

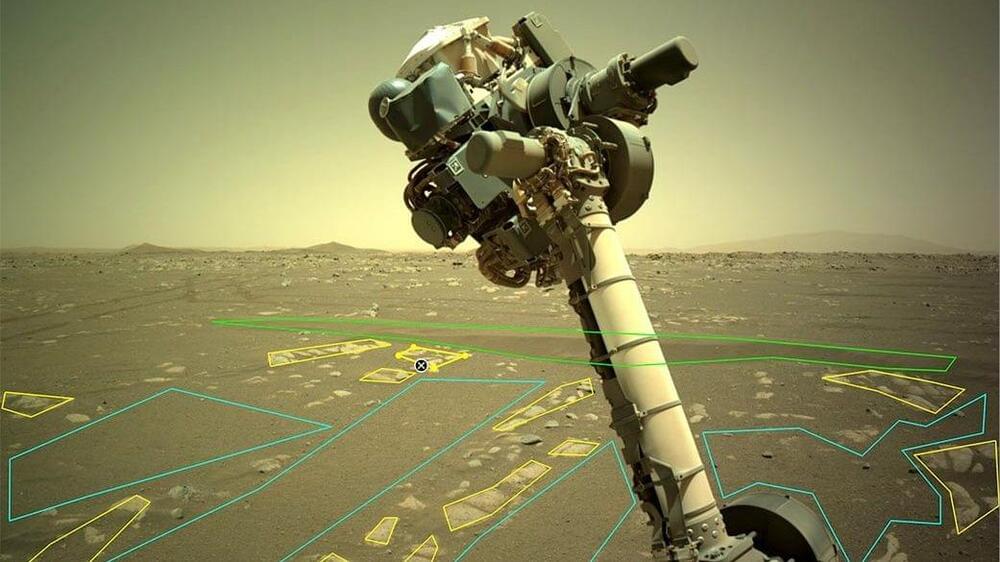

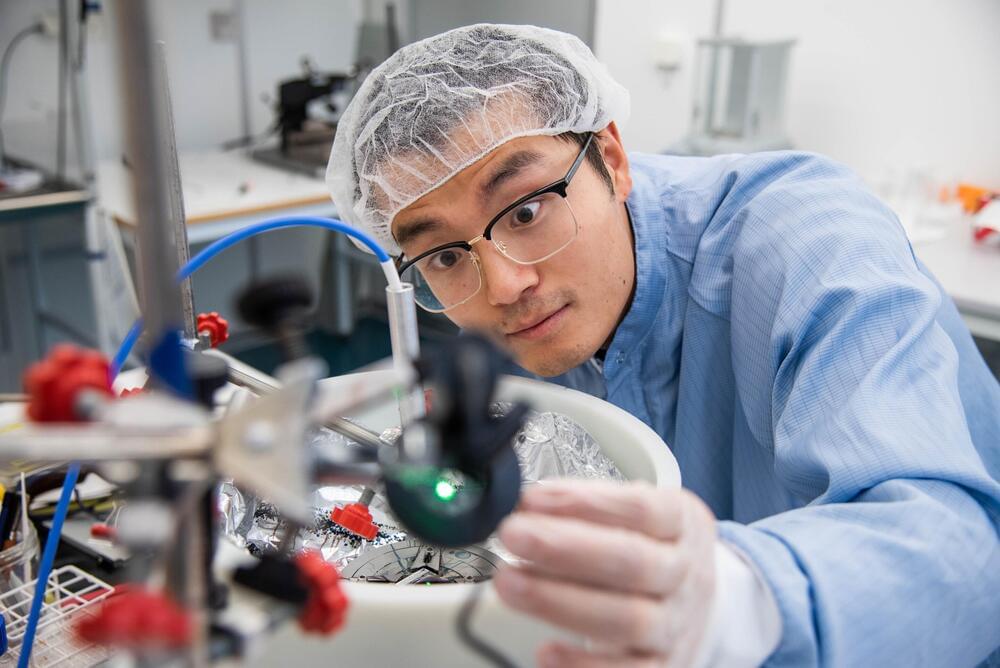

In a recent study published in Science Advances, researchers from the California Institute of Technology, led by Dr. Wei Gao, have developed a machine learning (ML)–powered 3D-printed epifluidic electronic skin for multimodal health surveillance. This wearable platform enables real-time physical and chemical monitoring of health status.

Wearable health devices have the potential to revolutionize the medical world, offering real-time tracking, personalized treatments, and early diagnosis of diseases.

However, one of the main challenges with these devices is that they don’t track data at the molecular level, and their fabrication is challenging. Dr. Gao explained why this served as a motivation for their team.

With the rapid-paced rise of AI in everyday life, nothing, not even the traditional farmer, is untouched by the technology.

A survey of the latest generation of farm tools provides a taste of just how far modern farming has come.

The Ecorobotix, a seven-foot-wide GPS-assisted “table on wheels” as some have described it, is a solar battery-powered unit that roams crop fields and destroys weeds with pinpoint precision. It boasts a 95% efficiency rate, with virtually no waste.

Material used in organic solar cells can also be used as light sensors in electronics. This has been shown by researchers at Linköping University, Sweden, who have developed a type of sensor able to detect circularly polarized red light. Their study, published in Nature Photonics, paves the way for more reliable self-driving vehicles and other uses where night vision is important.

Some beetles with shiny wings, firefly larvae and colorful mantis shrimps reflect a particular kind of light known as circularly polarized light. This is due to microscopic structures in their shell that reflect the electromagnetic light waves in a particular way.

Circularly polarized light also has many technical uses, such as satellite communication, bioimaging and other sensing technologies. This is because circularly polarizing light carries a vast amount of information, due to the fact that the electromagnetic field around the light beam spirals either to the right or to the left.

Source: allgord/iStock.

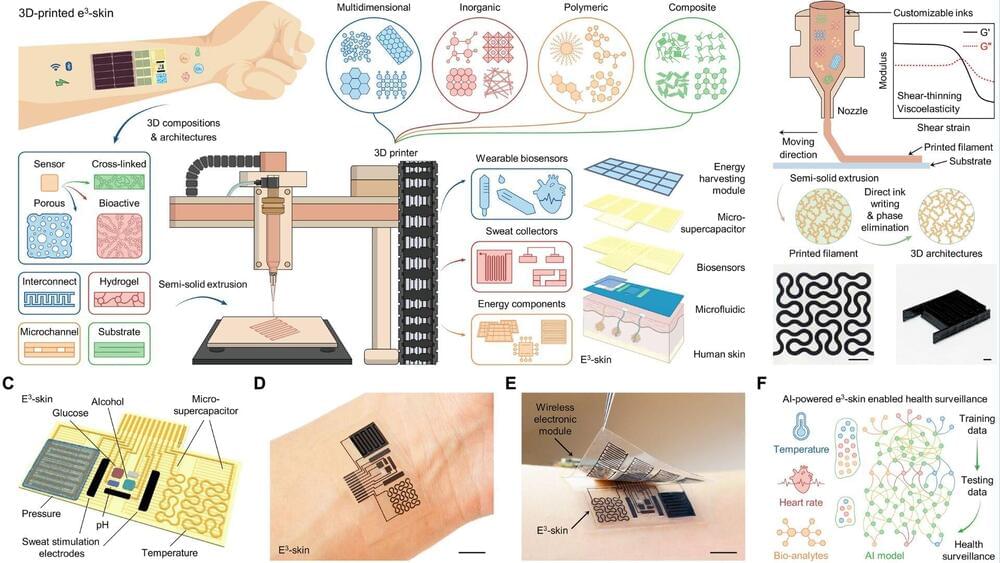

Scientists have developed a new artificial neural network that mimics the brain structures of ants and helps robots recognize and remember routes in complex natural environments, such as cornfields. The approach could improve the performance of agricultural robots that need to move through dense and plant-filled landscapes.

Bill Gates is a staunch advocate for nuclear energy, and although he no longer oversees day-to-day operations at Microsoft, its business strategy still mirrors the sentiment. According to a new job listing first spotted on Tuesday by The Verge, the tech company is currently seeking a “principal program manager” for nuclear technology tasked with “maturing and implementing a global Small Modular Reactor (SMR) and microreactor energy strategy.” Once established, the nuclear energy infrastructure overseen by the new hire will help power Microsoft’s expansive plans for both cloud computing and artificial intelligence.

Among the many, many, (many) concerns behind AI technology’s rapid proliferation is the amount of energy required to power such costly endeavors—a worry exacerbated by ongoing fears pertaining to climate collapse. Microsoft believes nuclear power is key to curtailing the massive amounts of greenhouse emissions generated by fossil fuel industries, and has made that belief extremely known in recent months.

[Related: Microsoft thinks this startup can deliver on nuclear fusion by 2028.].