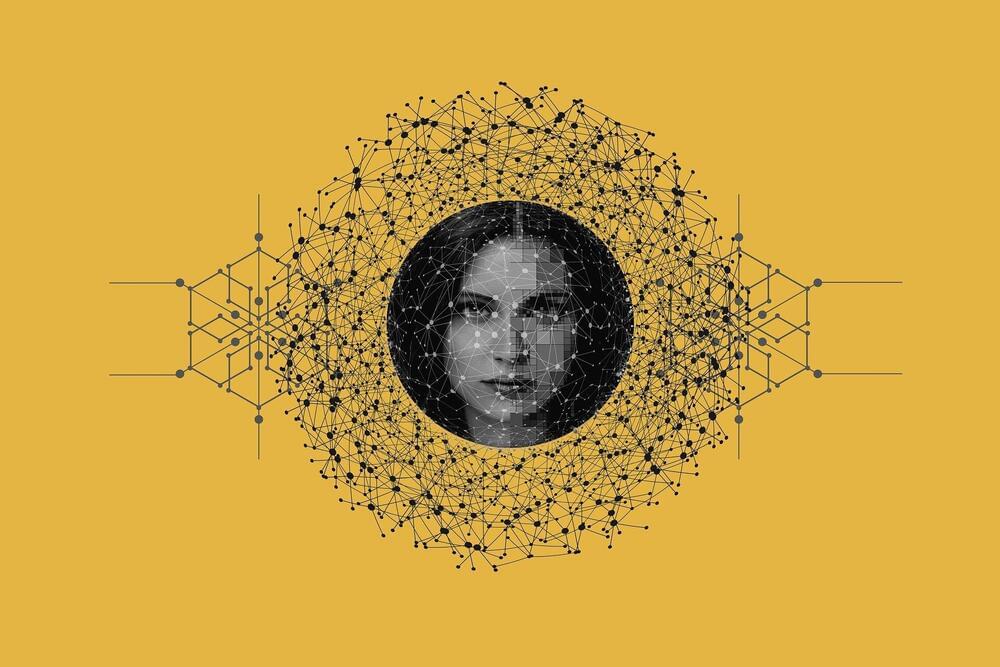

One of the more unexpected products to launch out of this year’s Microsoft Ignite conference is a tool that can create a photorealistic avatar of a person and animate that avatar saying things that the person didn’t necessarily say.

Called Azure AI Speech text to speech avatar, the new feature, available in public preview as of today, lets users generate videos of an avatar speaking by uploading images of a person they wish the avatar to resemble and writing a script. Microsoft’s tool trains a model to drive the animation, while a separate text-to-speech model — either prebuilt or trained on the person’s voice — “reads” the script aloud.

“With text to speech avatar, users can more efficiently create video … to build training videos, product introductions, customer testimonials [and so on] simply with text input,” writes Microsoft in a blog post. “You can use the avatar to build conversational agents, virtual assistants, chatbots and more.”