Liberals prefer Soviet-style woke censorship.

Tesla is going to integrate Elon Musk’s newly launched Grok AI assistant in its electric vehicles, according to the CEO.

Earlier this year, Musk launched a new AI startup, xAI, and said that it will work closely with Tesla.

The company’s mission is “to understand the true nature of the universe”, but in practice, its first project is to build a chatbot or AI assistant à la ChatGPT.

The post included a demonstration video from the researchers, showing how a team of five drones successfully located a set of keys in an outdoor park.

“The drones showcased key abilities, including humanlike dialogue interaction, proactive environmental awareness and autonomous entity control,” the WeChat report said. Autonomous entity control refers to the drone cluster’s ability to adjust flight status in real time based on environmental feedback.

The technology equips each drone with a “human brain”, allowing them to chat with each other using natural language. This ability was developed based on a Chinese open-source large language model called InternLM, according to the report.

The future of smart glasses is about to change drastically with the upcoming release of DigiLens ARGO range next year. These innovative smart glasses will be powered by Phantom Technology’s cutting-edge spatial AI assistant, CASSI. This partnership between DigiLens, based in Silicon Valley, and Phantom Technology, located at St John’s Innovation Centre, brings together the expertise of both companies to create a game-changing wearable device.

Phantom Technology, an AI start-up founded by a group of brilliant minds, has been working diligently over the years to develop advanced human interface technologies for AI wearables. Their breakthrough 3D imaging technology allows users to identify objects in their environment, enhancing their overall experience. With DigiLens incorporating Phantom’s patented optical platform into their consumer product, customers can expect a new era of smart glasses with unparalleled features.

CASSI, the novel spatial AI assistant designed by Phantom Technology, aims to boost productivity and awareness in enterprise settings. This innovative assistant combines computer vision algorithms with a large language model, enabling users to receive step-by-step instructions and assistance for various tasks. Imagine effortlessly locating any physical object or destination in the real world with 3D precision, using your voice to generate instructions, and seamlessly managing tasks using augmented reality.

CNBC’s Andrea Day joins Shep Smith to report on ‘robot nurses’ meant to give a hand to live nurses, who suffered under very difficult conditions during the pandemic. For access to live and exclusive video from CNBC subscribe to CNBC PRO: https://cnb.cx/2NGeIvi.

» Subscribe to CNBC TV: https://cnb.cx/SubscribeCNBCtelevision.

» Subscribe to CNBC: https://cnb.cx/SubscribeCNBC

Turn to CNBC TV for the latest stock market news and analysis. From market futures to live price updates CNBC is the leader in business news worldwide.

The News with Shepard Smith is CNBC’s daily news podcast providing deep, non-partisan coverage and perspective on the day’s most important stories. Available to listen by 8:30pm ET / 5:30pm PT daily beginning September 30: https://www.cnbc.com/2020/09/29/the-news-with-shepard-smith-…%7Cpodcast.

Connect with CNBC News Online.

Get the latest news: http://www.cnbc.com/

Follow CNBC on LinkedIn: https://cnb.cx/LinkedInCNBC

Follow CNBC News on Facebook: https://cnb.cx/LikeCNBC

Follow CNBC News on Twitter: https://cnb.cx/FollowCNBC

Follow CNBC News on Instagram: https://cnb.cx/InstagramCNBC

Elon Musk, renowned entrepreneur and co-founder of OpenAI, made an exciting announcement on November 3. Musk revealed that xAI, a subdivision of OpenAI, will be releasing its first artificial intelligence, Grok, to a select group of users. In his tweet, Musk stated that Grok is “the best that currently exists” in many crucial aspects.

Grok, a groundbreaking AI assistant, promises to revolutionize information accessibility and browsing capabilities. Unlike other models, Grok has the unique ability to offer real-time access to information through the powerful platform. Through this platform, Grok can fetch up-to-date information from the internet on any given topic, providing users with comprehensive and timely insights.

Musk also highlighted Grok’s distinctive feature of possessing internet browsing capabilities, similar to OpenAI’s ChatGPT. This grants Grok the capability to not only provide information but also engage in meaningful conversations with users, making it a truly versatile AI assistant.

Provocative Silicon Valley artist Agnieszka Pilat has strong beliefs regarding the intermingling of art, religion and technology, something that keeps her fertile muse alert amid multiple presentations, exhibitions and appearances at global events such as the recent TED AI 2023 conference in San Francisco.

The Polish-born Pilat’s current SpaceX Artist-in-Residence program at the aerospace firm’s Hawthorne, California facility will likely run through 2024 and comes right before a December exhibition at the National Gallery of Victoria’s Triennial in Australia. There, for three months, her black-and-yellow Boston Dynamics robodogs will be creating autonomous artwork via a series of pre-programmed instructions.

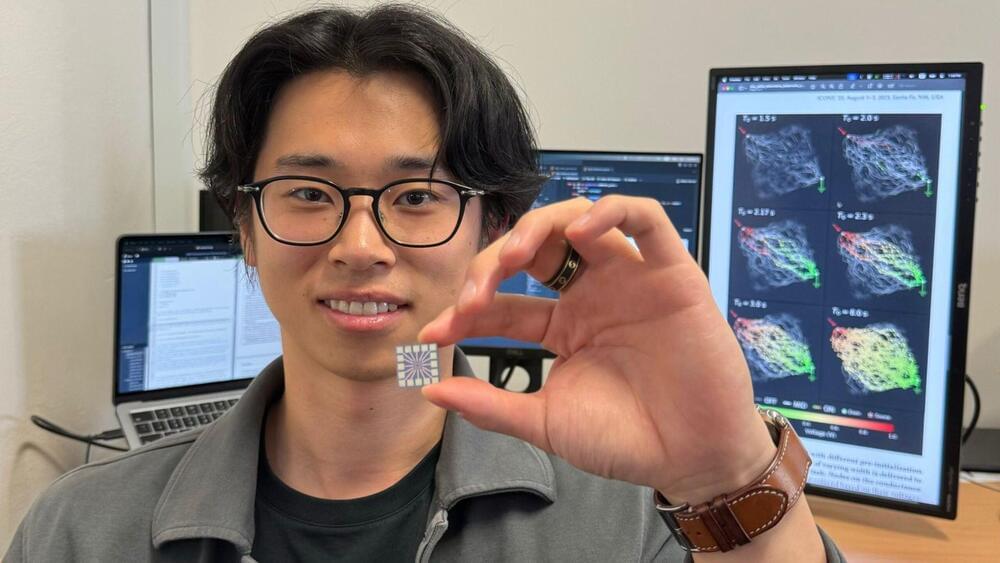

The research, which was published today in Nature Communications, is a joint effort by experts from the University of Sydney and the University of California at Los Angeles.

The artificial brain is made of nanowire networks, tiny wires a billion times smaller than a meter. The cables form random patterns that look like the game ‘Pick Up Sticks,’ but they also act like the neural networks in our brains. These networks can process information in different ways.

The biggest companies in AI aren’t interested in paying to use copyrighted material as training data, and here are their reasons why.

The US Copyright Office is taking public comment on potential new rules around generative AI’s use of copyrighted materials, and the biggest AI companies in the world had plenty to say. We’ve collected the arguments from Meta, Google, Microsoft, Adobe, Hugging Face, StabilityAI, and Anthropic below, as well as a response from Apple that focused on copyrighting AI-written code.

There are some differences in their approaches, but the overall message for most is the same: They don’t think they should have to pay to train AI models on copyrighted work.

The Copyright… More

Most argue training with copyrighted data is fair use.