What happens when quantum computers can finally crack encryption and break into the world’s best-kept secrets? It’s called Q-Day—the worst holiday maybe ever.

For as long as we’ve been building computers, it feels like we’ve been speaking the same language — the language of bits. Think of bits as tiny switches, each stubbornly stuck in either an ‘on’ or ‘off’ position, representing the 1s and 0s that underpin everything digital. And for decades, refining these switches, making them smaller and faster, has been the name of the game. We’ve ridden the wave of Moore’s Law, achieving incredible feats of computation with this binary system. But what if, perhaps, we’ve been looking at computation in just black and white, when a whole spectrum of possibilities exists?

Bravyi, Dial, Gambetta, Gil, and Nazario from IBM Quantum in “The Future of Quantum Computing with Superconducting Qubits” say.

For the first time in history, we are seeing a branching point in computing paradigms with the emergence of quantum processing units (QPUs).

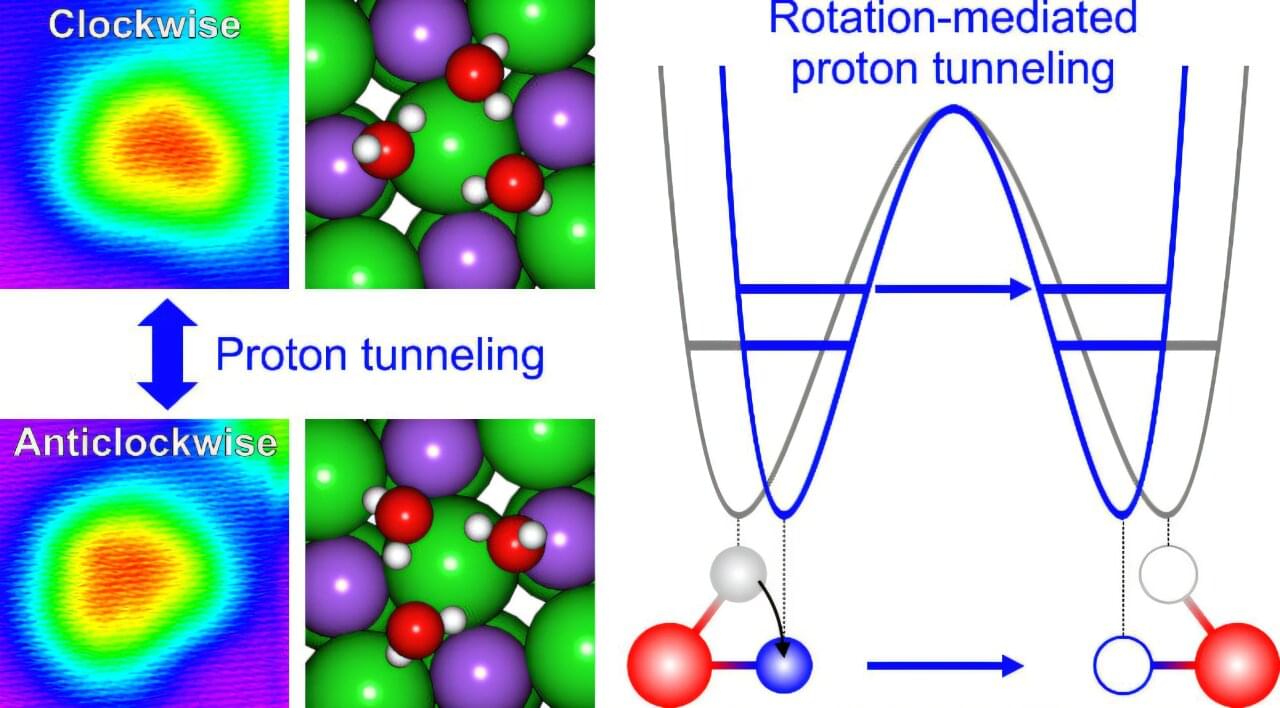

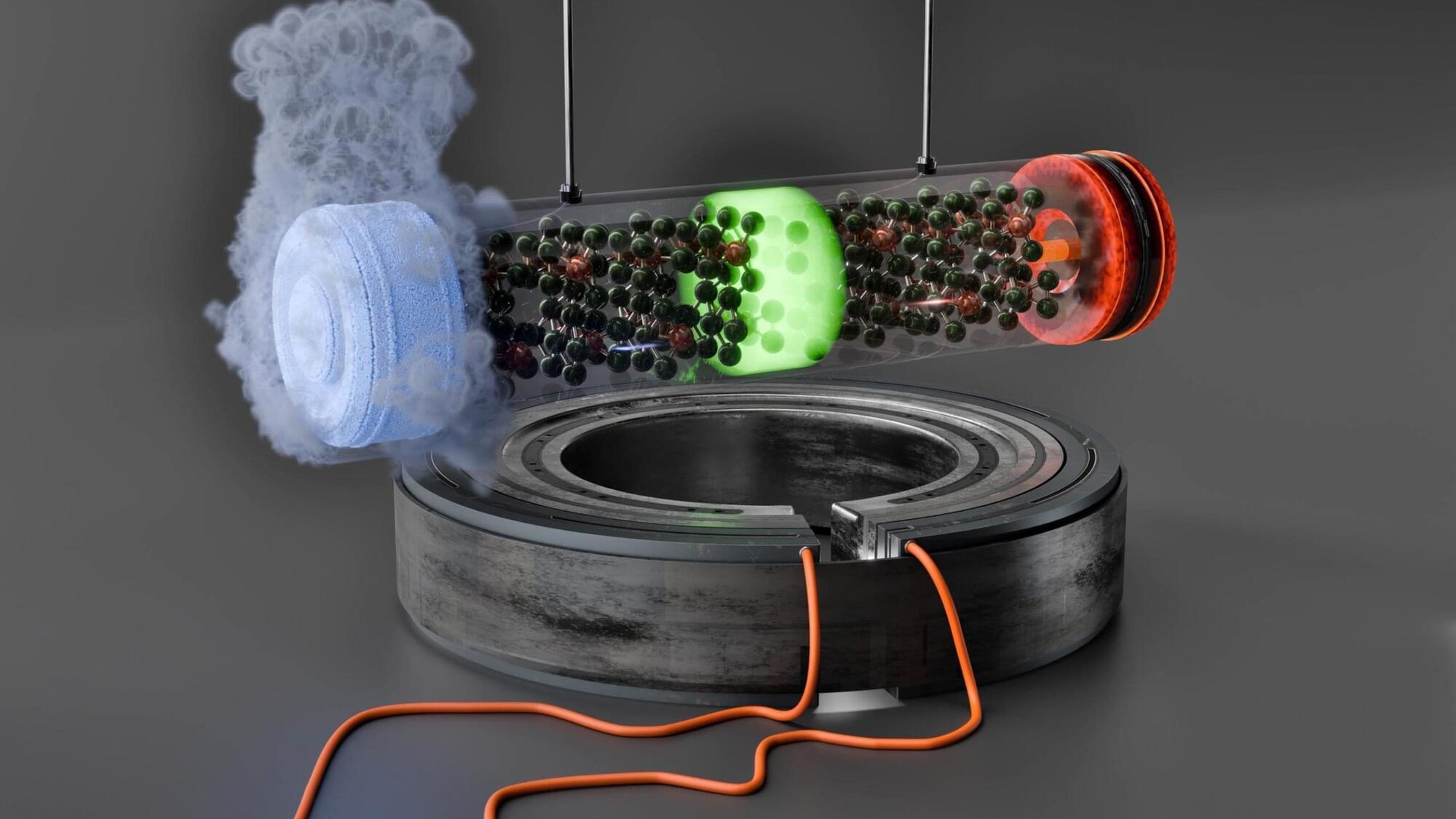

A research team led by Professor Hyung-Joon Shin from the Department of Materials Science and Engineering at UNIST has succeeded in elucidating the quantum phenomenon occurring within a triangular cluster of three water molecules. The work is published in the journal Nano Letters.

Their findings demonstrate that the collective rotational motion of water molecules enhances proton tunneling, a quantum mechanical effect where protons (H+) bypass energy barriers instead of overcoming them. This phenomenon has implications for chemical reaction rates and the stability of biomolecules such as DNA.

The study reveals that when the rotational motion of water molecules is activated, the distances between the molecules adjust, resulting in increased cooperativity and facilitating proton tunneling. This process allows the three protons from the water molecules to collectively surmount the energy barrier.

Metals, as most know them, are good conductors of electricity. That’s because the countless electrons in a metal like gold or silver move more or less freely from one atom to the next, their motion impeded only by occasional collisions with defects in the material.

There are, however, metallic materials at odds with our conventional understanding of what it means to be a metal. In so-called “bad metals”—a technical term, explains Columbia physicist Dmitri Basov—electrons hit unexpected resistance: each other. Instead of the electrons behaving like individual balls bouncing about, they become correlated with one another, clumping up so that their need to move more collectively impedes the flow of an electrical current.

Bad metals may make for poor electrical conductors, but it turns out that they make good quantum materials. In work published on February 13 in the journal Science, Basov’s group unexpectedly observed unusual optical properties in the bad metal molybdenum oxide dichloride (MoOCl2).

Researchers have created a tiny, shape-shifting robot that swims, crawls, and glides freely in the deep sea.

Developed by a team at the Beihang University in China, the robot operated at a depth of 10,600 meters in the Mariana Trench.

Using the same actuator technology, a soft gripper mounted on a submersible’s rigid arm successfully retrieved sea urchins and starfish from the South China Sea, demonstrating its capability for deep-sea exploration and specimen collection.

Joscha Bach is a cognitive scientist focusing on cognitive architectures, consciousness, models of mental representation, emotion, motivation and sociality.

Patreon: / curtjaimungal.

Crypto: https://tinyurl.com/cryptoTOE

PayPal: https://tinyurl.com/paypalTOE

Twitter: / toewithcurt.

Discord Invite: / discord.

iTunes: https://podcasts.apple.com/ca/podcast… https://pdora.co/33b9lfP Spotify: https://open.spotify.com/show/4gL14b9… Subreddit r/TheoriesOfEverything: / theoriesofeverything Merch: https://tinyurl.com/TOEmerch 0:00:00 Introduction 0:00:17 Bach’s work ethic / daily routine 0:01:35 What is your definition of truth? 0:04:41 Nature’s substratum is a “quantum graph”? 0:06:25 Mathematics as the descriptor of all language 0:13:52 Why is constructivist mathematics “real”? What’s the definition of “real”? 0:17:06 What does it mean to “exist”? Does “pi” exist? 0:20:14 The mystery of something vs. nothing. Existence is the default. 0:21:11 Bach’s model vs. the multiverse 0:26:51 Is the universe deterministic 0:28:23 What determines the initial conditions, as well as the rules? 0:30:55 What is time? Is time fundamental? 0:34:21 What’s the optimal algorithm for finding truth? 0:40:40 Are the fundamental laws of physics ultimately “simple”? 0:50:17 The relationship between art and the artist’s cost function 0:54:02 Ideas are stories, being directed by intuitions 0:58:00 Society has a minimal role in training your intuitions 0:59:24 Why does art benefit from a repressive government? 1:04:01 A market case for civil rights 1:06:40 Fascism vs communism 1:10:50 Bach’s “control / attention / reflective recall” model 1:13:32 What’s more fundamental… Consciousness or attention? 1:16:02 The Chinese Room Experiment 1:25:22 Is understanding predicated on consciousness? 1:26:22 Integrated Information Theory of consciousness (IIT) 1:30:15 Donald Hoffman’s theory of consciousness 1:32:40 Douglas Hofstadter’s “strange loop” theory of consciousness 1:34:10 Holonomic Brain theory of consciousness 1:34:42 Daniel Dennett’s theory of consciousness 1:36:57 Sensorimotor theory of consciousness (embodied cognition) 1:44:39 What is intelligence? 1:45:08 Intelligence vs. consciousness 1:46:36 Where does Free Will come into play, in Bach’s model? 1:48:46 The opposite of free will can lead to, or feel like, addiction 1:51:48 Changing your identity to effectively live forever 1:59:13 Depersonalization disorder as a result of conceiving of your “self” as illusory 2:02:25 Dealing with a fear of loss of control 2:05:00 What about heart and conscience? 2:07:28 How to test / falsify Bach’s model of consciousness 2:13:46 How has Bach’s model changed in the past few years? 2:14:41 Why Bach doesn’t practice Lucid Dreaming anymore 2:15:33 Dreams and GAN’s (a machine learning framework) 2:18:08 If dreams are for helping us learn, why don’t we consciously remember our dreams 2:19:58 Are dreams “real”? Is all of reality a dream? 2:20:39 How do you practically change your experience to be most positive / helpful? 2:23:56 What’s more important than survival? What’s worth dying for? 2:28:27 Bach’s identity 2:29:44 Is there anything objectively wrong with hating humanity? 2:30:31 Practical Platonism 2:33:00 What “God” is 2:36:24 Gods are as real as you, Bach claims 2:37:44 What “prayer” is, and why it works 2:41:06 Our society has lost its future and thus our culture 2:43:24 What does Bach disagree with Jordan Peterson about? 2:47:16 The millennials are the first generation that’s authoritarian since WW2 2:48:31 Bach’s views on the “social justice” movement 2:51:29 Universal Basic Income as an answer to social inequality, or General Artificial Intelligence? 2:57:39 Nested hierarchy of “I“s (the conflicts within ourselves) 2:59:22 In the USA, innovation is “cheating” (for the most part) 3:02:27 Activists are usually operating on false information 3:03:04 Bach’s Marxist roots and lessons to his former self 3:08:45 BONUS BIT: On societies problems.

Pandora: https://pdora.co/33b9lfP

Spotify: https://open.spotify.com/show/4gL14b9…

Subreddit r/TheoriesOfEverything: / theoriesofeverything.

Merch: https://tinyurl.com/TOEmerch.

0:00:00 Introduction.

0:00:17 Bach’s work ethic / daily routine.

0:01:35 What is your definition of truth?

0:04:41 Nature’s substratum is a \.

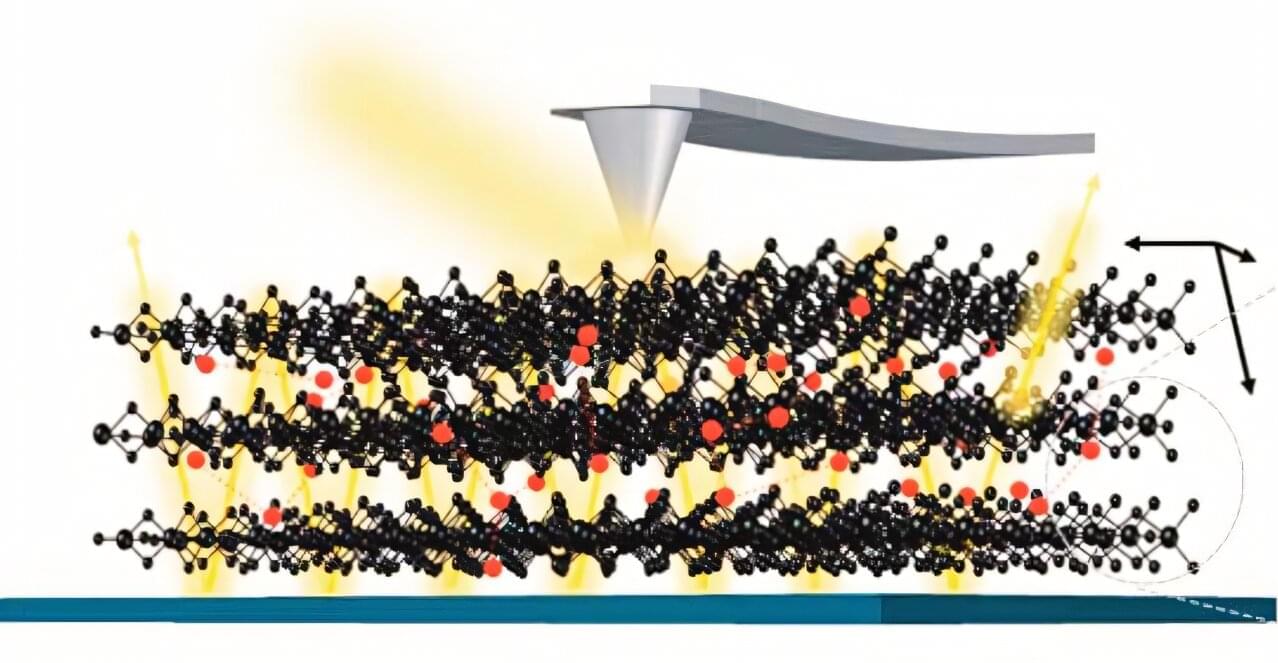

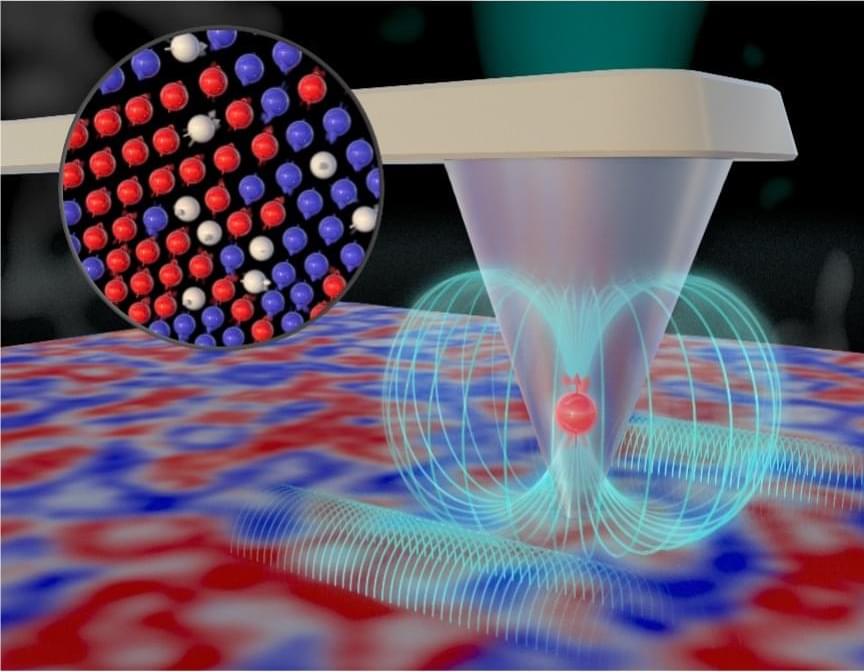

Working at nanoscale dimensions, billionths of a meter in size, a team of scientists led by the Department of Energy’s Oak Ridge National Laboratory revealed a new way to measure high-speed fluctuations in magnetic materials. Knowledge obtained by these new measurements, published in Nano Letters, could be used to advance technologies ranging from traditional computing to the emerging field of quantum computing.

Many materials undergo phase transitions characterized by temperature-dependent stepwise changes of important fundamental properties. Understanding materials’ behavior near a critical transition temperature is key to developing new technologies that take advantage of unique physical properties. In this study, the team used a nanoscale quantum sensor to measure spin fluctuations near a phase transition in a magnetic thin film. Thin films with magnetic properties at room temperature are essential for data storage, sensors and electronic devices because their magnetic properties can be precisely controlled and manipulated.

The team used a specialized instrument called a scanning nitrogen-vacancy center microscope at the Center for Nanophase Materials Sciences, a DOE Office of Science user facility at ORNL. A nitrogen-vacancy center is an atomic-scale defect in diamond where a nitrogen atom takes the place of a carbon atom, and a neighboring carbon atom is missing, creating a special configuration of quantum spin states. In a nitrogen-vacancy center microscope, the defect reacts to static and fluctuating magnetic fields, allowing scientists to detect signals on a single spin level to examine nanoscale structures.

Consciousness is one of the most fundamental aspects of our existence, but it remains barely understood, even defined. Across the world scholars of many disciplines — philosophy, science, social science, theology — are joined on a quest to understand this phenomenon.

Tune into one of the more original and controversial thinkers at the forefront of consciousness research, Stuart Hameroff, as he presents his ideas. Hameroff is an anaesthesiologist who, alongside Roger Penrose, proposes that the source of consciousness is structural, produced from a certain shape in our brain. He expands on this, and much more (such as evolution), in this talk. Have a listen!

To witness such topics discussed live buy tickets for our upcoming festival: https://howthelightgetsin.org/festivals/