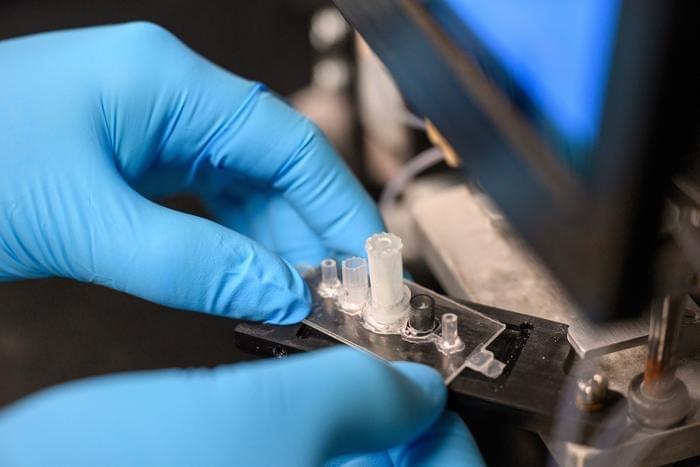

A new automated device can diagnose glioblastoma in under an hour using a novel electrokinetic biochip that detects active EGFRs from blood.

The study, published by a multi-institutional team of researchers…

Researchers used D-Wave’s quantum computing technology to explore the relationship between prefrontal brain activity and academic achievement, particularly focusing on the College Scholastic Ability Test (CSAT) scores in South Korea.

The study, published by a multi-institutional team of researchers across Korea in Scientific Reports, relied on functional near-infrared spectroscopy (fNIRS) to measure brain signals during various cognitive tasks and then applied a quantum annealing algorithm to identify patterns correlating with higher academic performance.

The team identified several cognitive tasks that might boost CSAT score — and that could have significant implications for educational strategies and cognitive neuroscience. The use of a quantum computer as a partner in the research process could also be a step towards practical applications of quantum computing in neuroimaging and cognitive assessment.

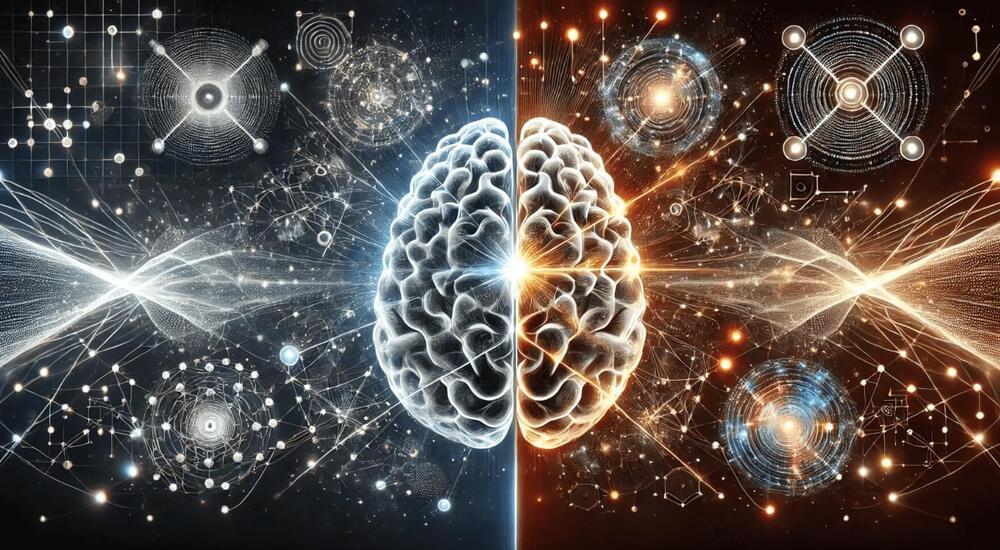

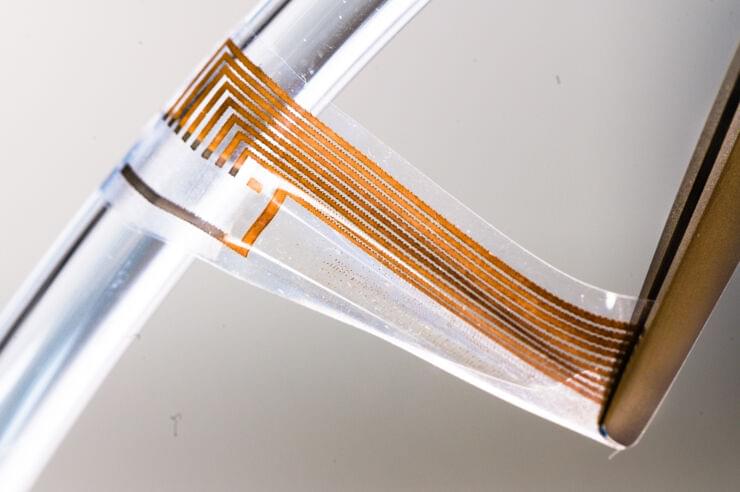

Gold does not readily lend itself to being turned into long, thin threads. But researchers at Linköping University in Sweden have now managed to create gold nanowires and develop soft electrodes that can be connected to the nervous system. The electrodes are soft as nerves, stretchable and electrically conductive, and are projected to last for a long time in the body.

Some people have a “heart of gold,” so why not “nerves of gold”? In the future, it may be possible to use this precious metal in soft interfaces to connect electronics to the nervous system for medical purposes. Such technology could be used to alleviate conditions such as epilepsy, Parkinson’s disease, paralysis or chronic pain. However, creating an interface where electronics can meet the brain or other parts of the nervous system poses special challenges.

“The classical conductors used in electronics are metals, which are very hard and rigid. The mechanical properties of the nervous system are more reminiscent of soft jelly. In order to get an accurate signal transmission, we need to get very close to the nerve fibres in question, but as the body is constantly in motion, achieving close contact between something that is hard and something that is soft and fragile becomes a problem,” says Klas Tybrandt, professor of materials science at the Laboratory of Organic Electronics at Linköping University, who led the research.

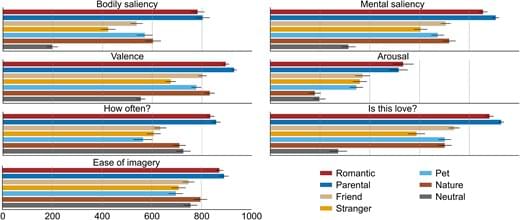

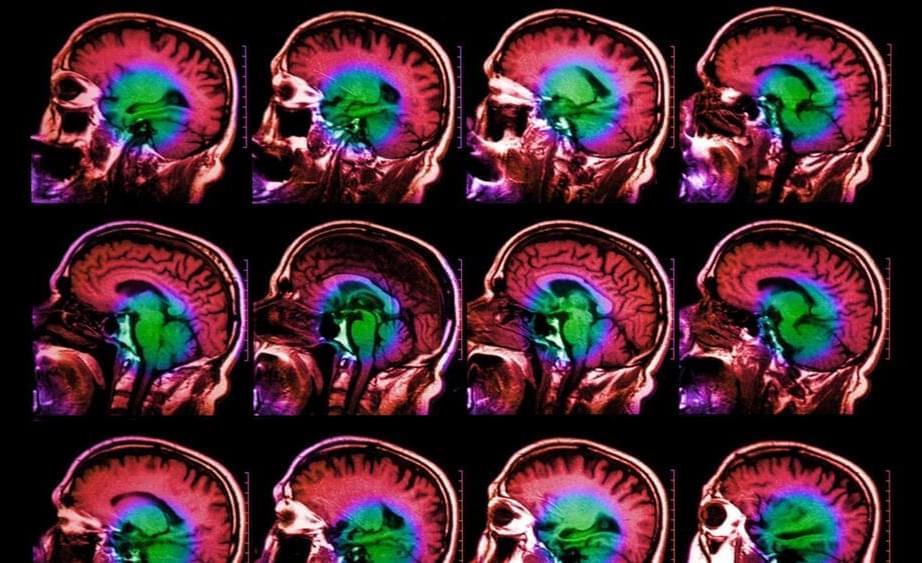

Abstract. Feelings of love are among the most significant human phenomena. Love informs the formation and maintenance of pair bonds, parent-offspring attachments, and influences relationships with others and even nature. However, little is known about the neural mechanisms of love beyond romantic and maternal types. Here, we characterize the brain areas involved in love for six different objects: romantic partner, one’s children, friends, strangers, pets, and nature. We used functional magnetic resonance imaging (fMRI) to measure brain activity, while we induced feelings of love using short stories. Our results show that neural activity during a feeling of love depends on its object. Interpersonal love recruited social cognition brain areas in the temporoparietal junction and midline structures significantly more than love for pets or nature. In pet owners, love for pets activated these same regions significantly more than in participants without pets. Love in closer affiliative bonds was associated with significantly stronger and more widespread activation in the brain’s reward system than love for strangers, pets, or nature. We suggest that the experience of love is shaped by both biological and cultural factors, originating from fundamental neurobiological mechanisms of attachment.

One of the oldest, scarcest elements in the universe has given us treatments for mental illness, ovenproof casserole dishes and electric cars. But how much do we really know about lithium?

This research uncovers diverse neural roles in processing words and complex sentences.

MIT neuroscientists have identified several brain regions responsible for processing language using functional magnetic resonance imaging (fMRI).

However, discovering the specific functions of neurons in those regions has proven difficult because fMRI, which measures changes in blood flow, doesn’t have a high resolution to reveal what small populations of neurons are doing.

Now, using a more precise technique that involves recording electrical activity directly from the brain, MIT neuroscientists have identified different clusters of neurons that appear to process different amounts of linguistic context.

The brain-machine interface race is on. While Elon Musk’s Neuralink has garnered most of the headlines in this field, a new small and thin chip out of Switzerland makes it look downright clunky by comparison. It also works impressively well.

The chip has been developed by researchers at the Ecole Polytechnique Federale de Lausanne (EPFL) and represents a leap forward in the sizzling space of brain-machine-interfaces (BMIs) – devices that are able to read activity in the brain and translate it into real-world output such as text on a screen. That’s because this particular device – known as a miniaturized brain-machine interface (MiBMI) – is extremely small, consisting of two thin chips measuring just 8 mm2 total. By comparison, Elon Musk’s Neuralink device clocks in at comparatively gargantuan size of about 23 × 8 mm (about 0.3 x .9 in).

Additionally, the EPFL chipset uses very little power, is reported to be minimally invasive, and consists of a fully integrated system that processes data in real time. That’s different from Neuralink, which requires the insertion of 64 electrodes into the brain and carries out its processing via an app located on a device outside of the brain.

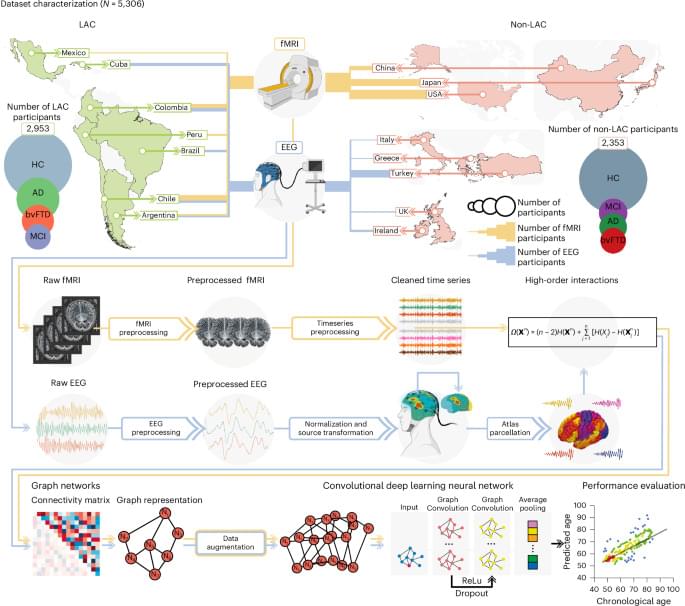

The brain undergoes dynamic functional changes with age1,2,3.

Analyses of neuroimaging datasets from 5,306 participants across 15 countries found generally larger brain-age gaps in Latin American compared with non-Latin American populations, which were influenced by disparities in socioeconomic and health-related factors.

Enjoy the videos and music you love, upload original content, and share it all with friends, family, and the world on YouTube.

A new Nature Human Behaviour study, jointly led by Dr. Margherita Malanchini at Queen Mary University of London and Dr. Andrea Allegrini at University College London, has revealed that non-cognitive skills, such as motivation and self-regulation, are as important as intelligence in determining academic success. These skills become increasingly influential throughout a child’s education, with genetic factors playing a significant role.

The research, conducted in collaboration with an international team of experts, suggests that fostering non-cognitive skills alongside cognitive abilities could significantly improve educational outcomes.

“Our research challenges the long-held assumption that intelligence is the primary driver of academic achievement,” says Dr. Malanchini, Senior Lecturer in Psychology at Queen Mary University of London.