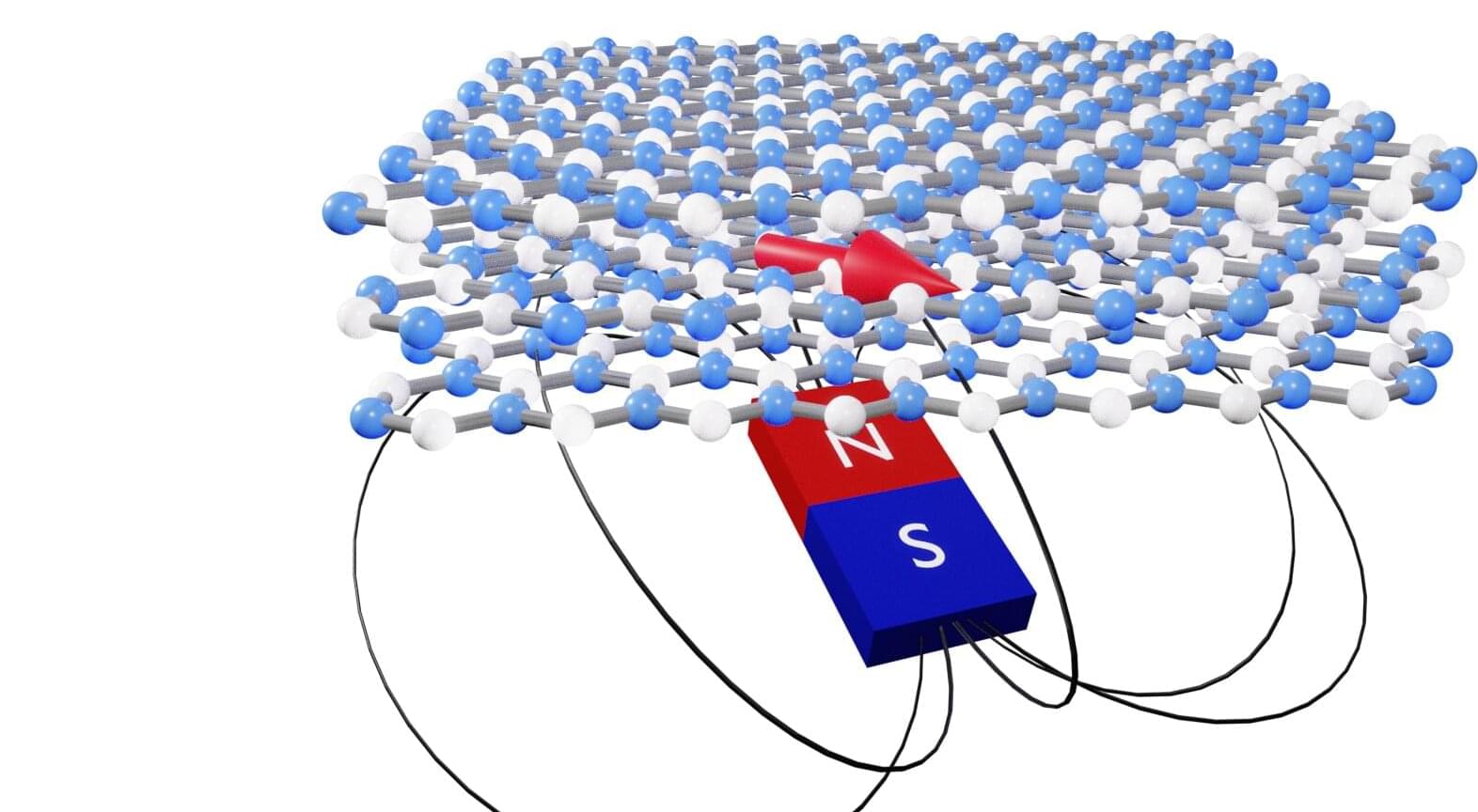

A team of physicists at the University of Cambridge has unveiled a breakthrough in quantum sensing by demonstrating the use of spin defects in hexagonal boron nitride (hBN) as powerful, room-temperature sensors capable of detecting vectorial magnetic fields at the nanoscale. The findings, published in Nature Communications, mark a significant step toward more practical and versatile quantum technologies.

“Quantum sensors allow us to detect nanoscale variations of various quantities. In the case of magnetometry, quantum sensors enable nanoscale visualization of properties like current flow and magnetization in materials leading to the discovery of new physics and functionality,” said Dr. Carmem Gilardoni, co-first author of this study at Cambridge’s Cavendish Laboratory.

“This work takes that capability to the next level using hBN, a material that’s not only compatible with nanoscale applications but also offers new degrees of freedom compared to state-of-the-art nanoscale quantum sensors.”