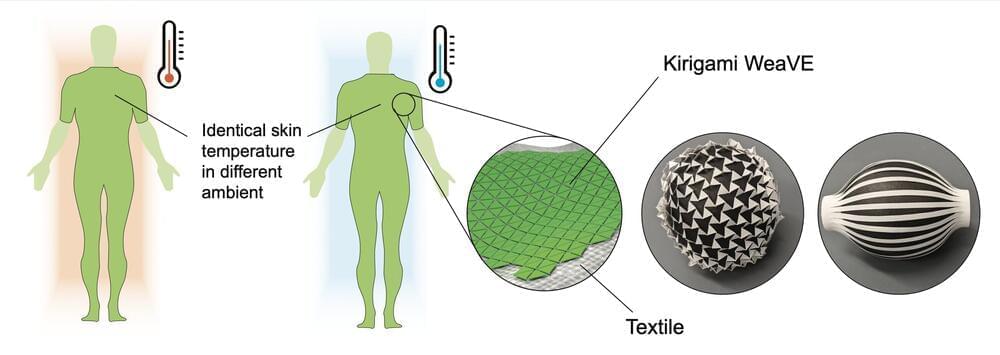

A study, published in PNAS Nexus, describes a fabric that can be modulated between two different states to stabilize radiative heat loss and keep the wearer comfortable across a range of temperatures.

Po-Chun Hsu, Jie Yin, and colleagues designed a fabric made of a layered semi-solid electrochemical cell deployed on nylon cut in a kirigami pattern to allow it to stretch and move with the wearer’s body. Modern clothes are made with a variety of insulating or breathable fabrics, but each fabric offers only one thermal mode, determined by the fabric’s emissivity: the rate at which it emits thermal energy.

The active material in the fabric can be electrically switched between two states—a transmissive dielectric state and a lossy metallic state—each with different emissivity. The fabric can thus keep the wearer comfortable by adjusting how much body heat is retained and how much is radiated away. A user would feel the same skin temperature whether the external temperature was 22.0°C (71.6°F) or 17.1°C (62.8°F). The authors call this fabric a “wearable variable-emittance device,” or WeaVE, and have configured it to be controlled with a smartphone app.