Neutrino energy offers 24/7 power without sun, wind, or fuel. Learn how this silent force may soon charge your phone and power entire homes wirelessly.

Sellers, Broad Institute of Harvard and MIT, 415 Main St., Cambridge, Massachusetts, 2,142, USA. Phone: 617.714.7110; Email: [email protected].

Find articles by Chaturantabut, S. in: JCI | | Google Scholar

1Broad Institute of MIT and Harvard, Cambridge, Massachusetts, USA…

Billions of heat exchangers are in use around the world. These devices, whose purpose is to transfer heat between fluids, are ubiquitous across many commonplace applications: they appear in HVAC systems, refrigerators, cars, ships, aircraft, wastewater treatment facilities, cell phones, data centers, and petroleum refining operations, among many other settings.

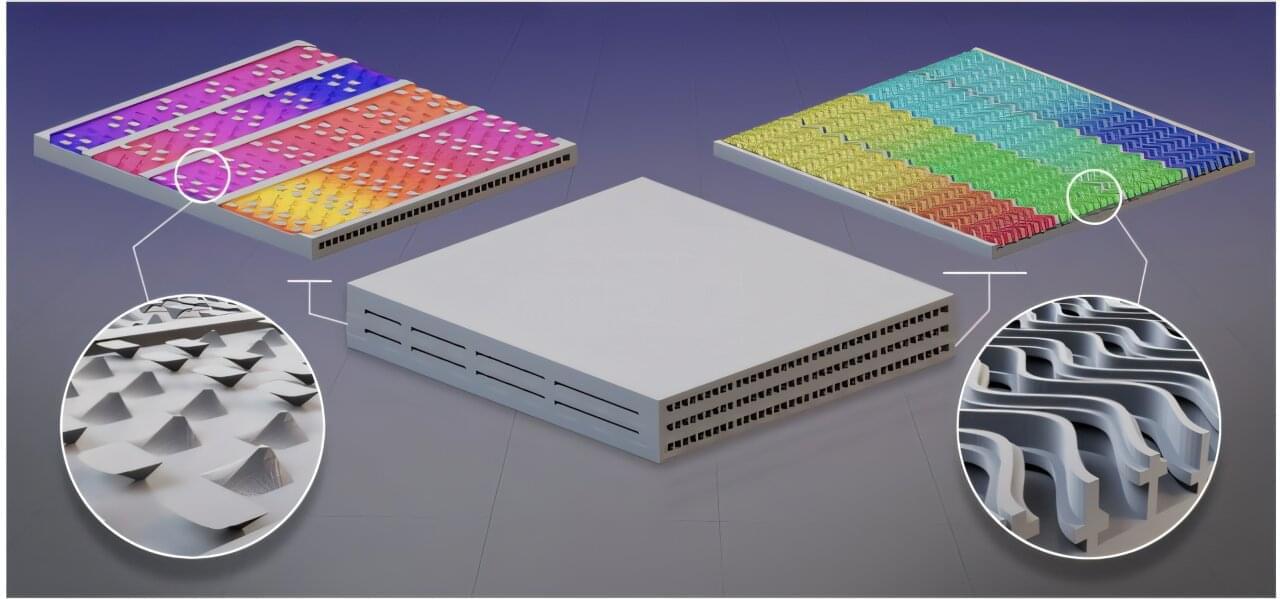

Researchers at the National University of Singapore (NUS) have shown that a single, standard silicon transistor, the core component of microchips found in computers, smartphones, and nearly all modern electronics, can mimic the functions of both a biological neuron and synapse.

A synapse is a specialized junction between nerve cells that allows for the transfer of electrical or chemical signals, through the release of neurotransmitters by the presynaptic neuron and the binding of receptors on the postsynaptic neuron. It plays a key role in communication between neurons and in various physiological processes including perception, movement, and memory.

Year 2021 face_with_colon_three

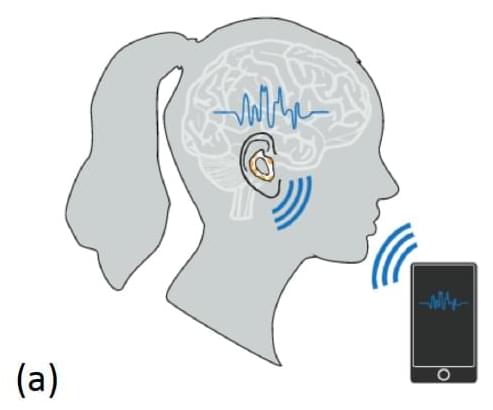

Communication between brain activity and computers, known as brain-computer interface or BCI, has been used in clinical trials to monitor epilepsy and other brain disorders. BCI has also shown promise as a technology to enable a user to move a prosthesis simply by neural commands. Tapping into the basic BCI concept would make smart phones smarter than ever.

Research has zeroed in on retrofitting wireless earbuds to detect neural signals. The data would then be transmitted to a smartphone via Bluetooth. Software at the smartphone end would translate different brain wave patterns into commands. The emerging technology is called Ear EEG.

Rikky Muller, Assistant Professor of Electrical Engineering and Computer Science, has refined the physical comfort of EEG earbuds and has demonstrated their ability to detect and record brain activity. With support from the Bakar Fellowship Program, she is building out several applications to establish Ear EEG as a new platform technology to support consumer and health monitoring apps.

Elon Musk’s Tesla is on the verge of launching a self-driving platform that could revolutionize transportation with millions of affordable robotaxis, positioning the company to outpace competitors like Uber ## ## Questions to inspire discussion ## Tesla’s Autonomous Driving Revolution.

🚗 Q: How is Tesla’s unsupervised FSD already at scale? A: Tesla’s unsupervised FSD is currently deployed in 7 million vehicles, with millions of units of hardware 4 dormant in older vehicles, available at a price point of $30,000 or less.

🏭 Q: What makes Tesla’s autonomous driving solution unique? A: Tesla’s solution is vertically integrated with end-to-end ownership of the entire system, including silicon design, software platform, and OEM, allowing them to keep costs low and push down utilization on ride-sharing networks. Impact on Ride-Sharing Industry.

💼 Q: How will Tesla’s autonomous vehicles affect Uber drivers? A: Tesla’s unsupervised self-driving cars will likely replace Uber’s 1.2 million US drivers, being 4x more useful due to no breaks and no human presence, operating at a per-mile cost below 50% of current Uber rates.

💰 Q: What economic pressure will Tesla’s solution put on Uber? A: Tesla’s autonomous driving solution will create tremendous pressure on Uber, with its cost structure acting as a magnet for high utilization, maintaining low pre-pressure costs for Tesla due to their fundamentally different design. Future Implications.

🤝 Q: What potential strategy might Uber adopt to compete with Tesla? A: Uber may need to approach Tesla to pre-buy their first 2 million Cyber Caps upfront, including production costs, as potentially the only path to compete with Tesla’s autonomous driving solution.

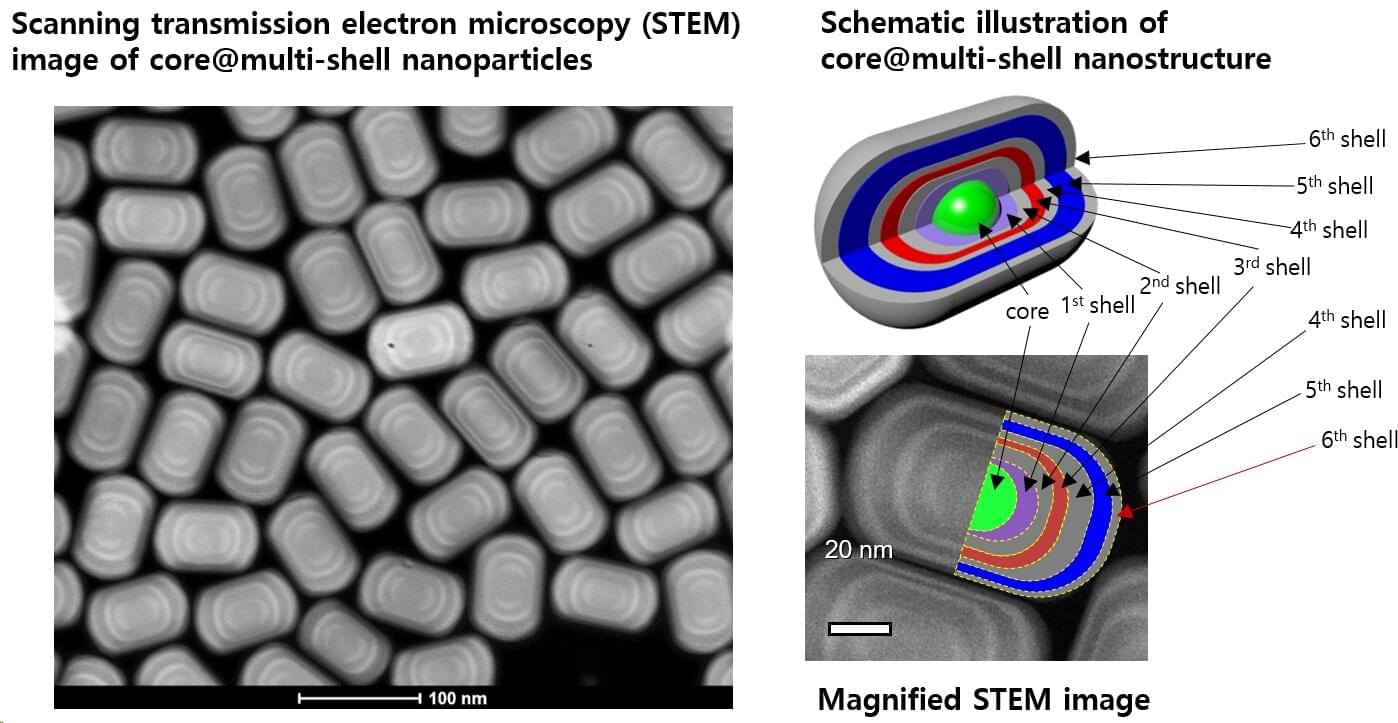

Dr. Ho Seong Jang and colleagues at the Extreme Materials Research Center at the Korea Institute of Science and Technology (KIST) have developed an upconversion nanoparticle technology that introduces a core@multi-shell nanostructure, a multilayer structure in which multiple layers of shells surround a central core particle, and enables high color purity RGB light emission from a single nanoparticle by adjusting the infrared wavelength.

The work is published in the journal Advanced Functional Materials.

Luminescent materials are materials that light up on their own and are used in a variety of display devices, including TVs, tablets, monitors, and smartphones, to allow us to view a variety of images and videos. However, conventional two-dimensional flat displays cannot fully convey the three-dimensional dimensionality of the real world, limiting the sense of depth.

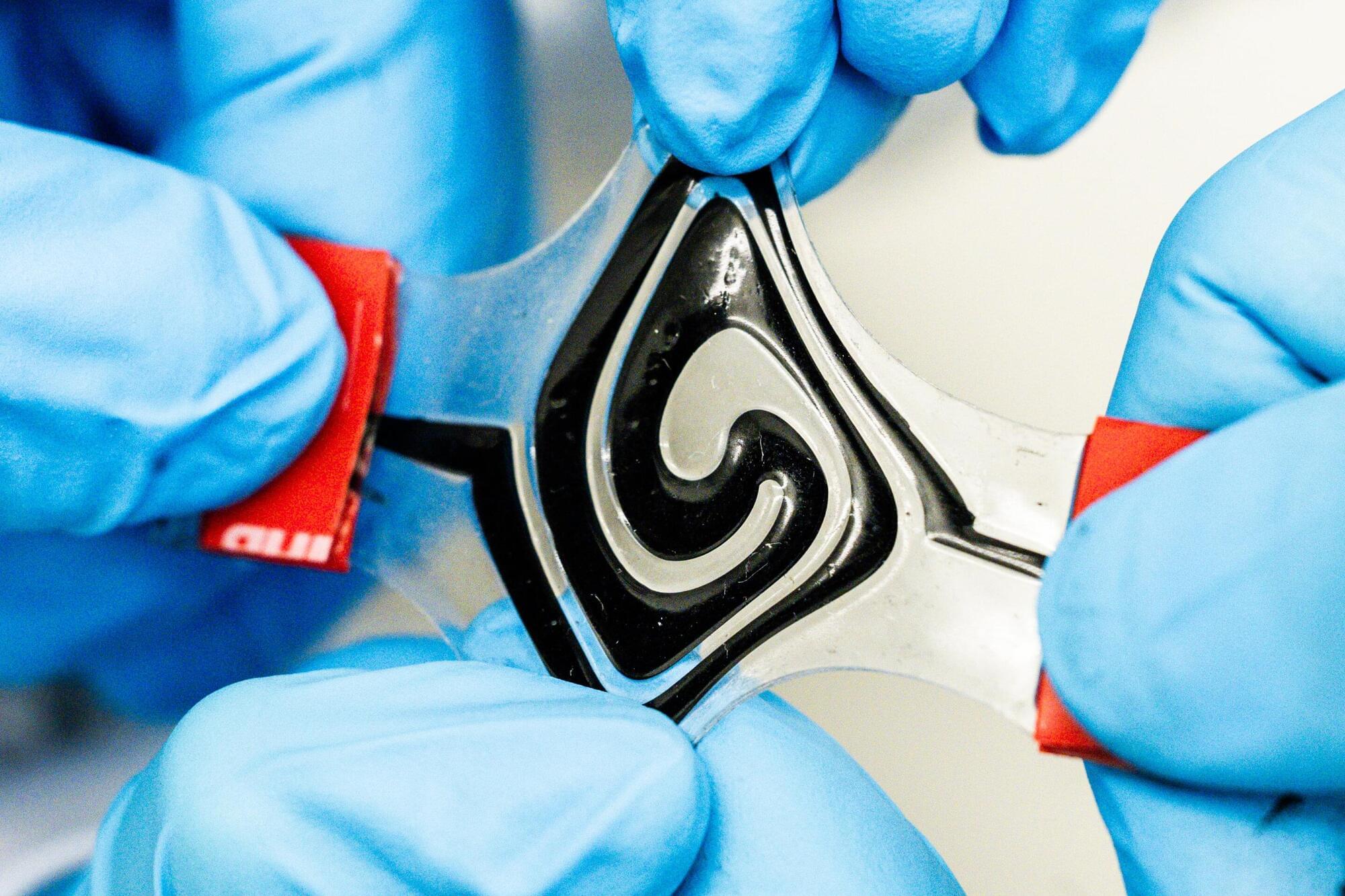

Using electrodes in a fluid form, researchers at Linköping University have developed a battery that can take any shape. This soft and conformable battery can be integrated into future technology in a completely new way. Their study has been published in the journal Science Advances.

“The texture is a bit like toothpaste. The material can, for instance, be used in a 3D printer to shape the battery as you please. This opens up for a new type of technology,” says Aiman Rahmanudin, assistant professor at Linköping University.

It is estimated that more than a trillion gadgets will be connected to the Internet in 10 years’ time. In addition to traditional technology such as mobile phones, smartwatches and computers, this could involve wearable medical devices such as insulin pumps, pacemakers, hearing aids and various health monitoring sensors, and in the long term also soft robotics, e-textiles and connected nerve implants.

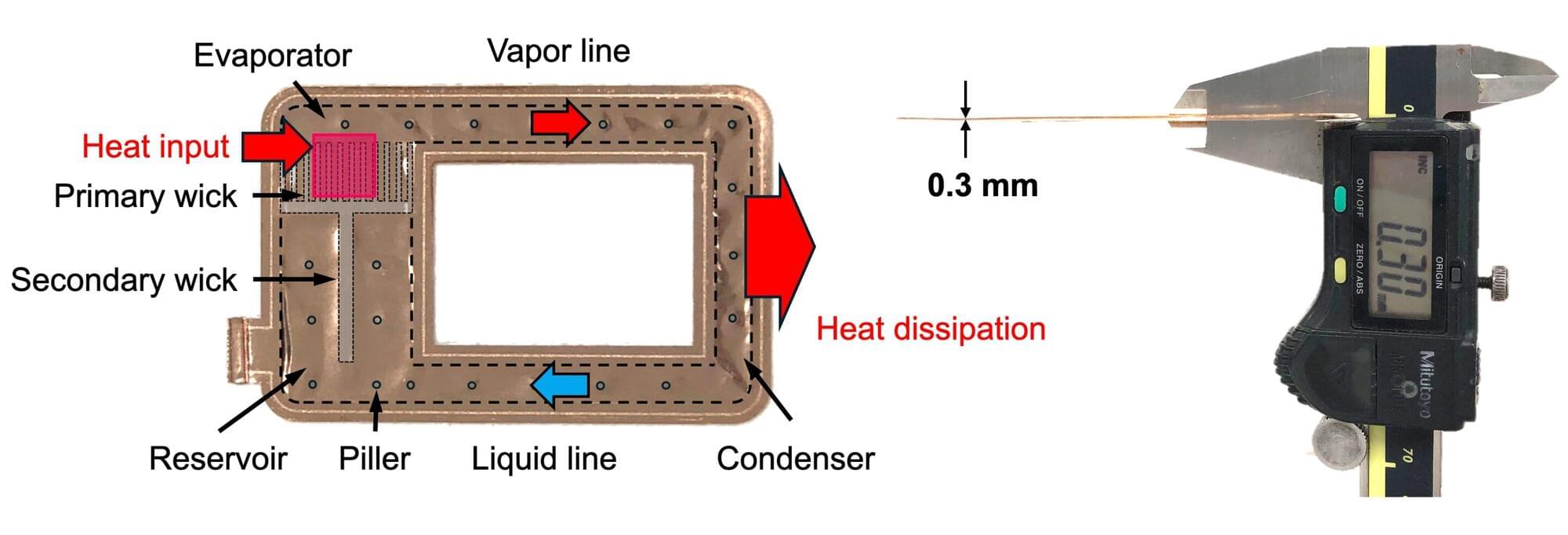

Scientists from Nagoya University in Japan have developed an innovative cooling device—an ultra-thin loop heat pipe—that significantly improves heat control for electronic components in smartphones and tablets. This breakthrough successfully manages heat levels generated during intensive smartphone usage, potentially enabling the development of even thinner mobile devices capable of running demanding applications without overheating or impeding performance.

The clone websites identified by DTI include a carousel of images that, when clicked, download a malicious APK file onto the user’s device. The package file acts as a dropper to install a second embedded APK payload via the DialogInterface. OnClickListener interface that allows for the execution of the SpyNote malware when an item in a dialog box is clicked.

“Upon installation, it aggressively requests numerous intrusive permissions, gaining extensive control over the compromised device,” DTI said.

“This control allows for the theft of sensitive data such as SMS messages, contacts, call logs, location information, and files. SpyNote also boasts significant remote access capabilities, including camera and microphone activation, call manipulation, and arbitrary command execution.”