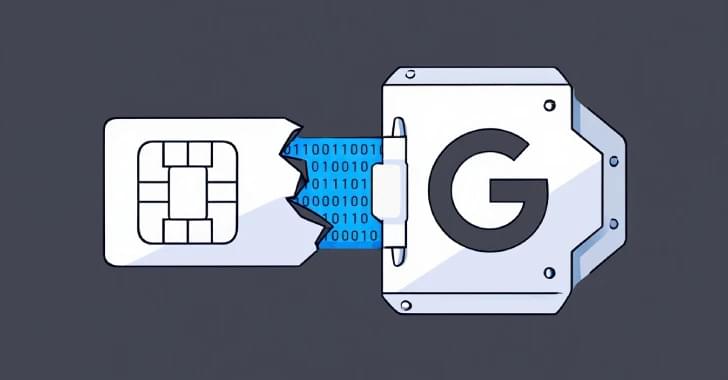

«Kyivstar» plans to launch new Starlink services this year. Their range and capabilities will be expanded over time.

Oleksandr Komarov, CEO of «Kyivstar», told the agency about the company’s plans to Reuters in Rome. According to him, messaging will be launched by the end of 2025, and mobile satellite broadband will be launched in mid-2026.

Field tests of the new communication began in late 2024 as part of an agreement with SpaceX. For its part, Elon Musk’s space company will launch the possibility of direct communication with mobile phones in the country.