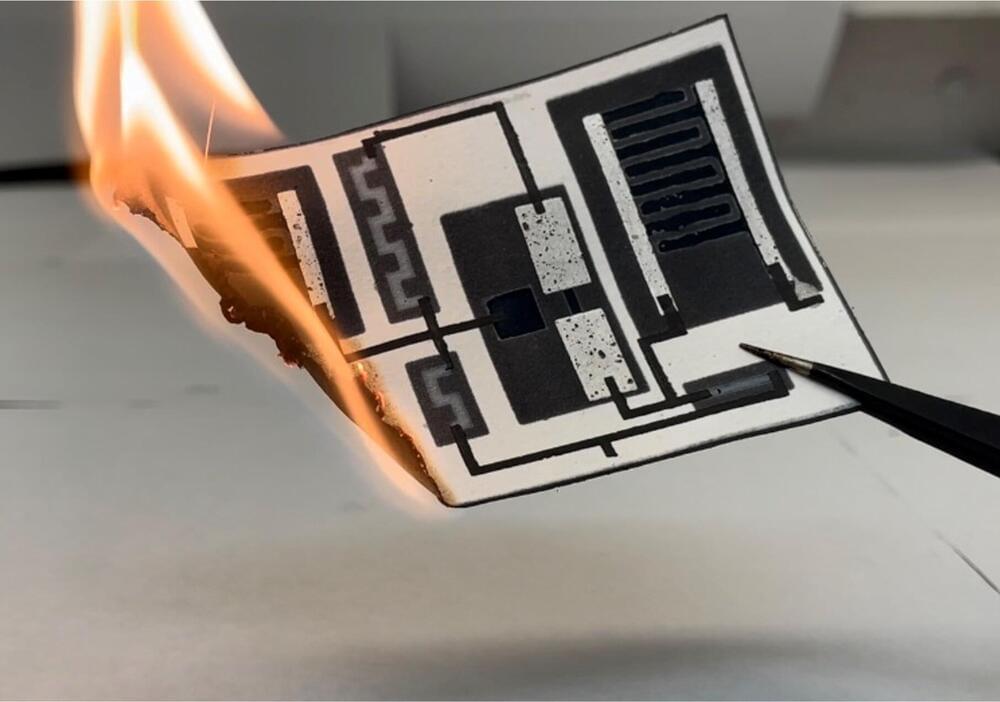

At 200 times stronger than steel, graphene has been hailed as a super material of the future since its discovery in 2004. The ultrathin carbon material is an incredibly strong electrical and thermal conductor, making it a perfect ingredient to enhance semiconductor chips found in many electrical devices.

But while graphene-based research has been fast-tracked, the nanomaterial has hit roadblocks: in particular, manufacturers have not been able to create large, industrially relevant amounts of the material. New research from the laboratory of Nai-Chang Yeh, the Thomas W. Hogan Professor of Physics, is reinvigorating the graphene craze.

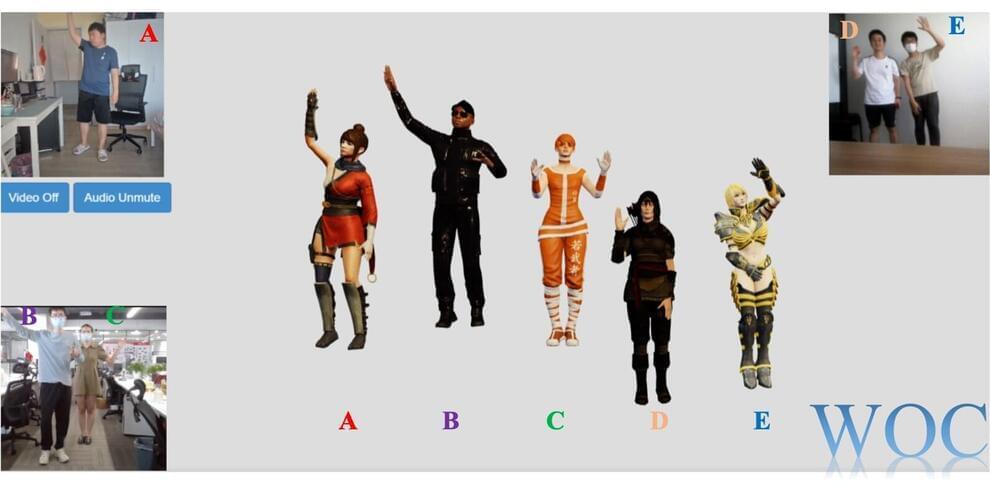

In two new studies, the researchers demonstrate that graphene can greatly improve electrical circuits required for wearable and flexible electronics such as smart health patches, bendable smartphones, helmets, large folding display screens, and more.