“Portrait (He Knew)” was released in 1977 on the album Point Of Know Return. It was clearly about Albert Einstein although not a lot of people seemed to be aware of the fact. It was a great song for a video, and one of my favorite on this particular album, the other’s being “Nobody Home”, “Closet Chronicles”, and “Dust In The Wind”. Point of Know Return was HUGE in 1978! I remember listening to it over and over and over…loved the many instrumental breaks and solos. The video is just layers and layers of masked images and masked video. Tried for some really cool effects and found some pretty neat ones…’specially fond of that tree recoil effect from the atom bomb at that cool little note drag! Anyway, as usual…I hope you find something to enjoy. [Lyrics] He had a thousand ideas, you might have heard his name He lived alone with his vision Not looking for fortune or fame Never said too much to speak of He was off on another plane The words that he said were a mystery Nobody’s sure he was sane But he knew, he knew more than me or you No one could see his view, Oh where was he going to He was in search of an answer The nature of what we are He was trying to do it a new way He was bright as a star But nobody understood him “His numbers are not the way” He’s lost in the deepest enigma Which no one’s unraveled today But he knew, he knew more than me or you No one could see his view, Oh where was he going to And he tried, but before he could tell us he died When he left us the people cried, Oh where was he going to? He had a different idea A glimpse of the master plan He could see into the future A true visionary man But there’s something he never told us It died when he went away If only he could have been with us No telling what he might say But he knew, he knew more than me or you No one could see his view Oh, where was he going to But he knew, you could tell by the picture he drew It was totally something new, Oh where was he going to?

Category: military – Page 29

Diving Into 3 Key DARPA AI Programs

As one of the Department of Defense’s 14 critical technology areas, artificial intelligence has taken center stage in the organization’s research and development endeavors.

According to Matt Turek, deputy director of the Defense Advanced Research Projects Agency’s Information Innovation Office, approximately 70 percent of the agency’s programs now use AI and machine learning. Its priorities are not just to develop systems for U.S. warfighters, but to prevent “strategic surprise” from adversary AI systems.

Taming the Machine, with Nell Watson

Those who rush to leverage AI’s power without adequate preparation face difficult blowback, scandals, and could provoke harsh regulatory measures. However, those who have a balanced, informed view on the risks and benefits of AI, and who, with care and knowledge, avoid either complacent optimism or defeatist pessimism, can harness AI’s potential, and tap into an incredible variety of services of an ever-improving quality.

These are some words from the introduction of the new book, “Taming the machine: ethically harness the power of AI”, whose author, Nell Watson, joins us in this episode.

Nell’s many roles include: Chair of IEEE’s Transparency Experts Focus Group, Executive Consultant on philosophical matters for Apple, and President of the European Responsible Artificial Intelligence Office. She also leads several organizations such as EthicsNet.org, which aims to teach machines prosocial behaviours, and CulturalPeace.org, which crafts Geneva Conventions-style rules for cultural conflict.

Selected follow-ups:

• Nell Watson’s website (https://www.nellwatson.com/)

• Taming the Machine (https://tamingthemachine.com/) — book website.

• BodiData (https://www.bodidata.com/) (corporation)

• Post Office Horizon scandal: Why hundreds were wrongly prosecuted (https://www.bbc.co.uk/news/business-5…) — BBC News.

• Dutch scandal serves as a warning for Europe over risks of using algorithms (https://www.politico.eu/article/dutch…) — Politico.

• Robodebt: Illegal Australian welfare hunt drove people to despair (https://www.bbc.co.uk/news/world-aust…) — BBC News.

• What is the infected blood scandal and will victims get compensation? (https://www.bbc.co.uk/news/health-485…) — BBC News.

• MIRI 2024 Mission and Strategy Update (https://intelligence.org/2024/01/04/m…) — from the Machine Intelligence Research Institute (MIRI)

• British engineering giant Arup revealed as $25 million deepfake scam victim (https://edition.cnn.com/2024/05/16/te…) — CNN

• Zersetzung psychological warfare technique (https://en.wikipedia.org/wiki/Zersetzung) — Wikipedia.

Music: Spike Protein, by Koi Discovery, available under CC0 1.0 Public Domain Declaration.

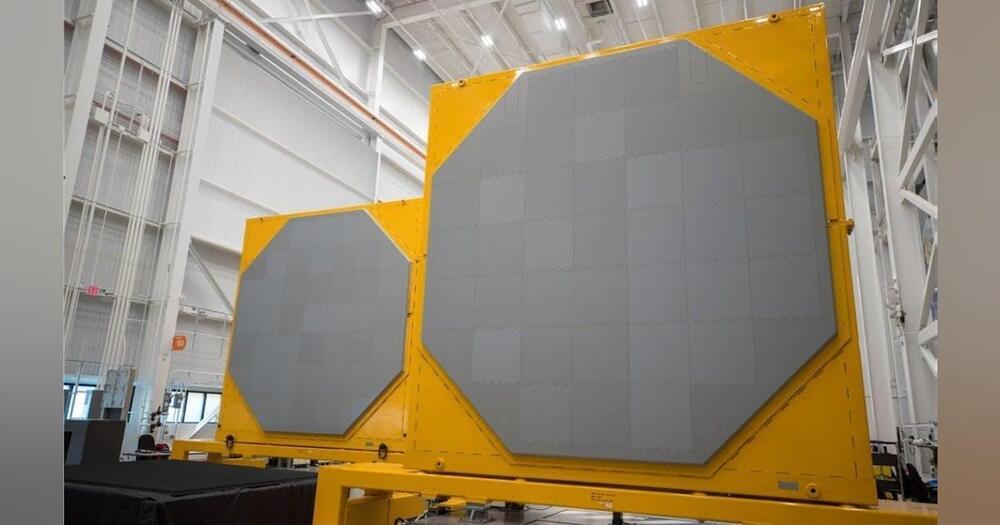

Navy taps RTX Raytheon for critical radar hardware for missile defense aboard Burke-class surface warships

WASHINGTON – Shipboard radar experts at RTX Corp. will build hardware for the new AN/SPY-6(V) Air and Missile Defense Radar (AMDR), which will be integrated into late-model Arleigh Burke-class (DDG 51) Aegis destroyer surface warships under terms of a $677.7 million U.S. Navy order announced Friday.

Officials of the Naval Sea Systems Command in Washington are asking the RTX Raytheon segment in Marlborough, Mass., for AN/SPY-6(V) shipboard radar hardware.

The Raytheon AN/SPY-6(V) AMDR will improve the Burke-class destroyer’s ability to detect hostile aircraft, surface ships, and ballistic missiles, Raytheon officials say. The AMDR will supersede the AN/SPY-1 radar, which has been standard equipment on Navy Aegis Burke-class destroyers and Ticonderoga-class cruisers.

Missile Defense Agency satellites track first hypersonic launch

The constellation will eventually include 100 satellites providing global coverage of advanced missile launches. For now, the handful of spacecraft offers limited coverage. SDA Director Derek Tournear told reporters in April that coordinating tracking opportunities for the satellites is a challenge because they have to be positioned over the venue where missile tests are being performed.

He noted that along with tracking routine Defense Department test flights, the satellites are also scanning global hot spots for missile activity as they orbit the Earth.

The flight the satellites tracked was the first for MDA’s Hypersonic Testbed, or HTB-1. The vehicle serves as a platform for various hypersonic experiments and advanced components and joins a growing inventory of high-speed flight test systems. That includes the Test Resource Management Center’s Multi-Service Advanced Capability Hypersonic Test Bed and the Defense Innovation Unit’s Hypersonic and High-Cadence Airborne Testing Capabilities program.

Blue Origin, SpaceX, ULA win $5.6 billion in Pentagon launch contracts

Join our newsletter to get the latest military space news every Tuesday by veteran defense journalist Sandra Erwin.

The three companies will compete for orders over the contract period starting in fiscal year 2025 through 2029. Under the NSSL program, the Space Force orders individual launch missions up to two years in advance. At least 30 NSSL Lane 1 missions are expected to be competed over the five years.

Researchers ask industry for ways to guarantee the performance and accuracy of artificial intelligence (AI)

Officials of the U.S. Defense Advanced Research Projects Agency (DARPA) in Arlington, Va., issued a broad agency announcement (HR001124S0029) for the Artificial Intelligence Quantified (AIQ) project.

AIQ seeks to find ways of assessing and understanding the capabilities of AI to enable mathematical guarantees on performance. Successful use of military AI requires ensuring safe and responsible operation of autonomous and semi-autonomous technologies.