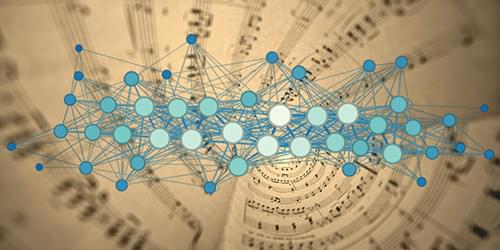

A network-theory model, tested on the work of Johann Sebastian Bach, offers tools for quantifying the amount of information delivered to a listener by a musical piece.

Great pieces of music transport the audience on emotional journeys and tell stories through their melodies, harmonies, and rhythms. But can the information contained in a piece, as well as the piece’s effectiveness at communicating it, be quantified? Researchers at the University of Pennsylvania have developed a framework, based on network theory, for carrying out these quantitative assessments. Analyzing a large body of work by Johan Sebastian Bach, they show that the framework could be used to categorize different kinds of compositions on the basis of their information content [1]. The analysis also allowed them to pinpoint certain features in music compositions that facilitate the communication of information to listeners. The researchers say that the framework could lead to new tools for the quantitative analysis of music and other forms of art.

To tackle complex systems such as musical pieces, the team turned to network theory—which offers powerful tools to understand the behavior of discrete, interconnected units, such as individuals during a pandemic or nodes in an electrical power grid. Researchers have previously attempted to analyze the connections between musical notes using network-theory tools. Most of these studies, however, ignore an important aspect of communication: the flawed nature of perception. “Humans are imperfect learners,” says Suman Kulkarni, who led the study. The model developed by the team incorporated this aspect through the description of a fuzzy process through which a listener derives an “inferred” network of notes from the “true” network of the original piece.