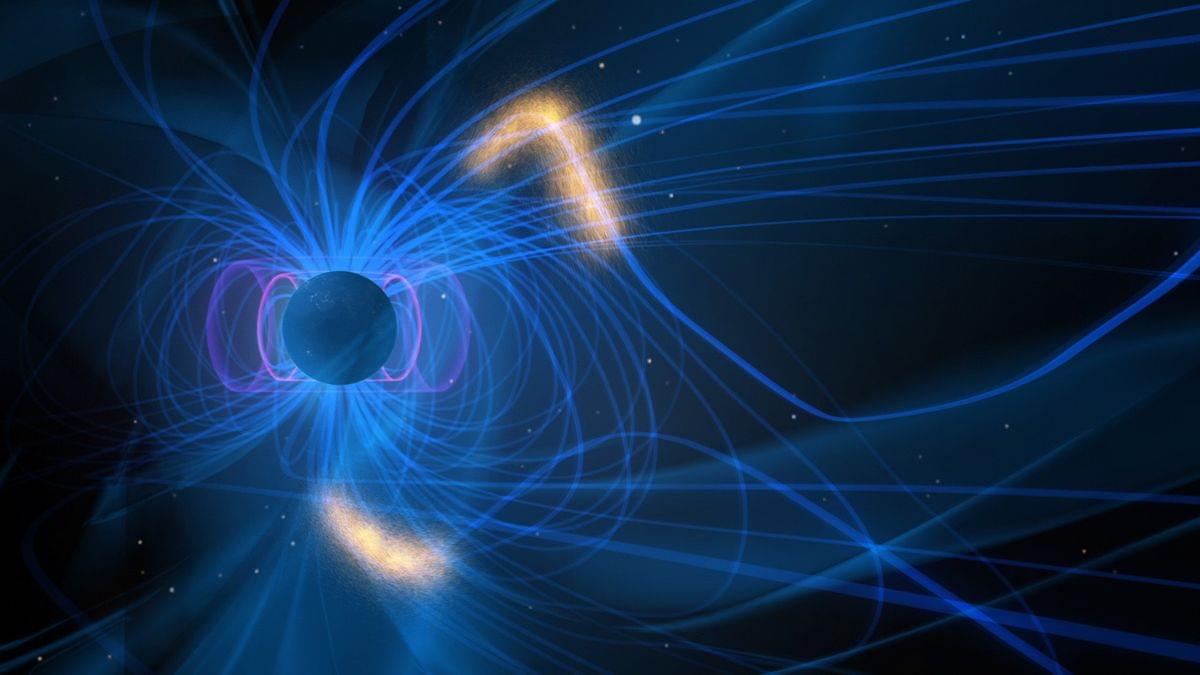

Could a planet orbiting a black hole sustain life? We dive into the challenges and wonders of living in such an extreme cosmic environment. Discover what it might be like to live near a black hole, where time slows, gravity warps, and the universe takes on a truly alien form.

Watch my exclusive video Big Alien Theory https://nebula.tv/videos/isaacarthur–… Nebula using my link for 40% off an annual subscription: https://go.nebula.tv/isaacarthur Get a Lifetime Membership to Nebula for only $300: https://go.nebula.tv/lifetime?ref=isa… Use the link gift.nebula.tv/isaacarthur to give a year of Nebula to a friend for just $30. Visit our Website: http://www.isaacarthur.net Join Nebula: https://go.nebula.tv/isaacarthur Support us on Patreon: / isaacarthur Support us on Subscribestar: https://www.subscribestar.com/isaac-a… Facebook Group:

/ 1,583,992,725,237,264 Reddit:

/ isaacarthur Twitter:

/ isaac_a_arthur on Twitter and RT our future content. SFIA Discord Server:

/ discord Credits: Black Sun Rising: Living On A Planet Around A Black Hole Episode 487; February 20, 2025 Written, Produced & Narrated by: Isaac Arthur Editor: Briana Brownell Graphics: Jeremy Jozwik, Ken York YD Visual, Udo Scroeter Select imagery/video supplied by Getty Images Music Courtesy of Epidemic Sound http://epidemicsound.com/creator Phase Shift, “Forest Night” Chris Zabriskie, “Unfoldment, Revealment”, “A New Day in a New Sector”, “Oxygen Garden” Stellardrone, “Red Giant”, “Billions and Billions“

Get Nebula using my link for 40% off an annual subscription: https://go.nebula.tv/isaacarthur.

Get a Lifetime Membership to Nebula for only $300: https://go.nebula.tv/lifetime?ref=isa…

Use the link gift.nebula.tv/isaacarthur to give a year of Nebula to a friend for just $30.

Visit our Website: http://www.isaacarthur.net.

Join Nebula: https://go.nebula.tv/isaacarthur.

Support us on Patreon: / isaacarthur.

Support us on Subscribestar: https://www.subscribestar.com/isaac-a…

Facebook Group: / 1583992725237264

Reddit: / isaacarthur.

Twitter: / isaac_a_arthur on Twitter and RT our future content.

SFIA Discord Server: / discord.

Credits:

Black Sun Rising: Living On A Planet Around A Black Hole.

Episode 487; February 20, 2025

Written, Produced & Narrated by: Isaac Arthur.

Editor: Briana Brownell.

Graphics: Jeremy Jozwik, Ken York YD Visual, Udo Scroeter.

Select imagery/video supplied by Getty Images.

Music Courtesy of Epidemic Sound http://epidemicsound.com/creator.

Phase Shift, \