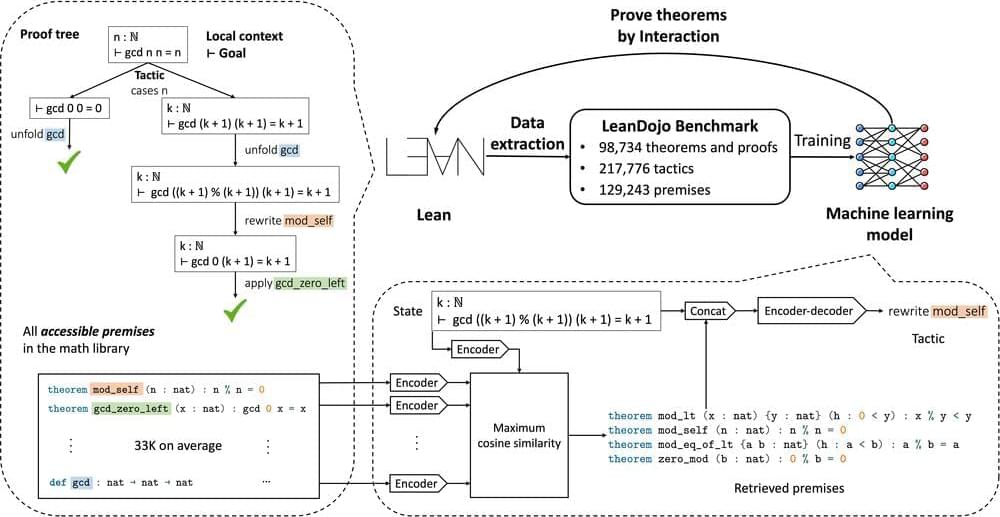

Lean Co-pilot for LLM-human collaboration to write formal mathematical proofs that are 100% accurate.

Top right: LeanDojo extracts proofs in Lean into datasets for training machine learning models. It also enables the trained model to prove theorems by interacting with Lean’s proof environment.

Top left: The proof tree of a Lean theorem ∀n∈N, gcd n n = n, where gcd is the greatest common divisor. When proving the theorem, we start from the original theorem as the initial state (the root) and repeatedly apply tactics (the edges) to decompose states into simpler sub-states, until all states are solved (the leaf nodes). Tactics may rely on premises such as mod_self and gcd_zero_left defined in a large math library. E.g., mod_self is an existing theorem ∀n∈N, n % n = 0 used in the proof to simplify the goal.