Bayreuth scientists are investigating the structure and long-term behavior of galaxies using mathematical models based on Einstein’s theory of relativity. Their innovative approach uses a deep neural network to quickly predict the stability of galaxy models. This artificial intelligence-based method enables efficient verification or falsification of astrophysical hypotheses in seconds.

The research objective of Dr. Sebastian Wolfschmidt and Christopher Straub is to investigate the structure and long-term behavior of galaxies. “Since these cannot be fully analyzed by astronomical observations, we use mathematical models of galaxies,” explains Christopher Straub, a doctoral student at the Chair of Mathematics VI at the University of Bayreuth.

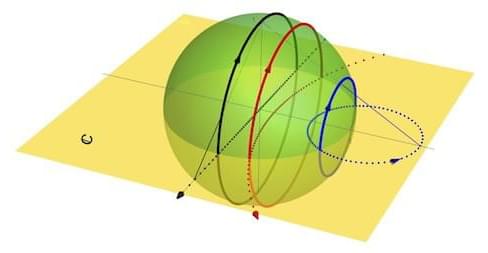

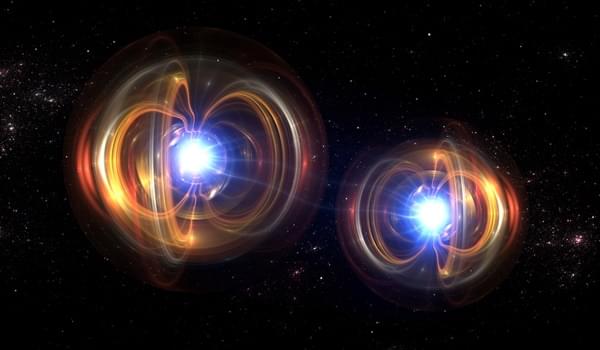

“In order to take into account that most galaxies contain a black hole at their center, our models are based on Albert Einstein’s general theory of relativity, which describes gravity as the curvature of four-dimensional spacetime.”