“Metaphysical Experiments: Physics and the Invention of the Universe” by Bjørn Ekeberg Book Link: https://amzn.to/4imNNk5

“Metaphysical Experiments, Physics and the Invention of the Universe,” explores the intricate relationship between physics and metaphysics, arguing that fundamental metaphysical assumptions profoundly shape scientific inquiry, particularly in cosmology. The author examines historical developments from Galileo and Newton to modern cosmology and particle physics, highlighting how theoretical frameworks and experimental practices are intertwined with philosophical commitments about the nature of reality. The text critiques the uncritical acceptance of mathematical universality in contemporary physics, suggesting that cosmology’s reliance on hypological and metalogical reasoning reveals a deep-seated faith rather than pure empirical validation. Ultimately, the book questions the limits and implications of a science that strives for universal mathematical truth while potentially overlooking its own inherent complexities and metaphysical underpinnings. Chapter summaries:

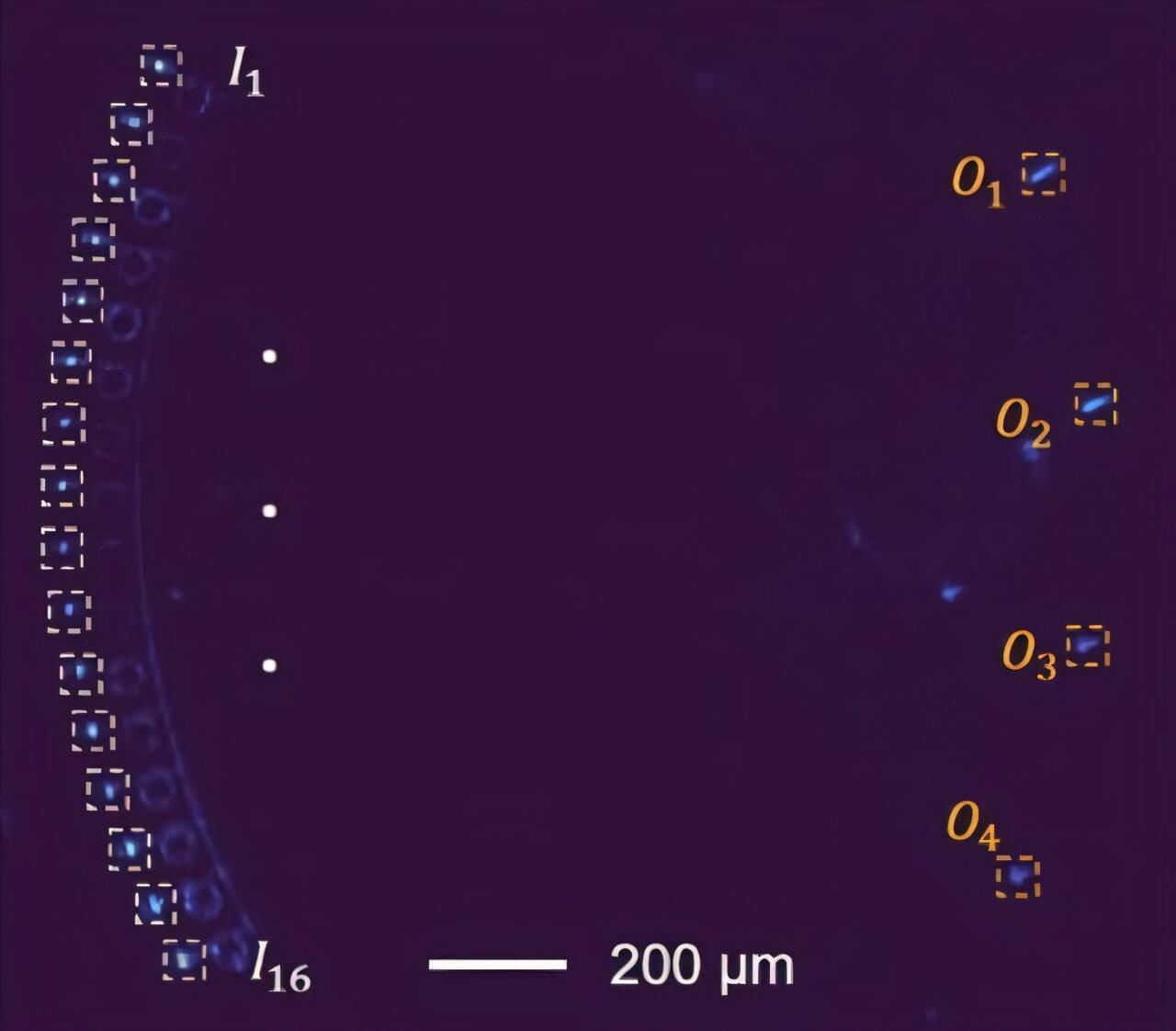

- Cosmology in the Cave: This chapter examines the Large Hadron Collider (LHC) in Geneva to explore the metaphysics involved in the pursuit of a “Theory of Everything” linking subatomic physics to cosmology.

- Of God and Nature: This chapter delves into the seventeenth century to analyze the invention of the universe as a concept alongside the first telescope, considering the roles of Galileo, Descartes, and Spinoza.

- Probability and Proliferation: This chapter investigates the nineteenth-century shift in physics with the rise of probabilistic reasoning and the scientific invention of the particle, focusing on figures like Maxwell and Planck.

- Metaphysics with a Big Bang: This chapter discusses the twentieth-century emergence of scientific cosmology and the big bang theory, shaped by large-scale science projects and the ideas of Einstein and Hawking.

- Conclusion: This final section questions the significance of large-scale experiments like the JWST as metaphysical explorations and reflects on our contemporary scientific relationship with the cosmos.

#Physics.

#Cosmology.

#Universe.

#Science.

#Metaphysics.

#PhilosophyofScience.

#JWST

#LHC

#BigBangTheory.

#DarkMatter.

#DarkEnergy.

#SpaceTelescope.

#ParticlePhysics.

#HistoryofPhysics.

#ScientificInquiry #scienceandreligion #meaningoflife #consciousness #universe #god #spirituality #faith #reason #creationtheory #finetuninguniverse #astrophysics #quantumphysics #intelligentdesign #cosmicconsciousness #reality #Consciousness #QuantumPhysics #Universe #Nonlocality #QuantumEntanglement #CosmicInformation #ScienceOfMind #NatureOfReality #Spirit #BigLibraryHypothesis #NLSETI #QuantumBrain #Multiverse #InformationTheory #ExtrasensoryPerception #SciencePhilosophy #deepdive #skeptic #podcast #synopsis #books #bookreview #ai #artificialintelligence #booktube #aigenerated #history #alternativehistory #aideepdive #ancientmysteries #hiddenhistory #futurism #videoessay