The announcement highlights a partnership with Beijing after an international legal ruling underlined rifts between China and Southeast Asian nations over rival claims to the sea.

Genie out of the bottle.

A new guide into 3D printing rights and responsibilities has been launched to explain what consumers need to know before printing in 3D, including the potential risks in creating and sharing 3D printable files, and what kinds of safeguards are in place.

The website “Everything you need to get started in 3D printing” was developed by staff at the University of Melbourne in response to the growing number of users keen to find, share, and create 3D printed goods online.

A team from the School of Culture and Communications at the University of Melbourne designed the website which includes a scorecard for various 3D printing sites, as well as some useful tips for those getting started in the 3D printing world.

New ink for printers to improve speed and conserve ink. I know a few legal and accounting firms that would love this.

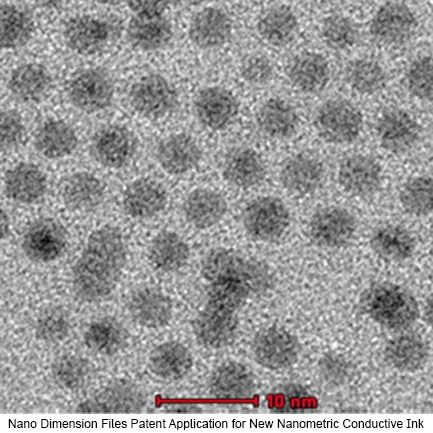

Nano Dimension Ltd has announced that its wholly owned subsidiary, Nano Dimension Technologies, has filed a patent application with the U.S. Patent and Trademark Office for the development of a new nanometric conductive ink, which is based on a unique synthesis.

The new nanoparticle synthesis further minimizes the size of the silver nanoparticles particles in the company’s ink products. The new process achieves silver nanoparticles as small as 4 nanometers.

Nano Dimension believes that accurate control of nanoparticles’ size and surface properties will allow for improved performance of the company’s DragonFly 2020 3D printer, currently in development. The innovative ink enables lower melting temperatures and more complete sintering (fusing of particles into solid conductive trace), leading to an even higher level of conductivity.

Can serve many uses such as geneology, etc. However, the bigger advancement will be with criminal/ legal investigations.

Rice University researchers have developed gas biosensors to “see” into soil and allow them to follow the behavior of the microbial communities within.

In a study in the American Chemical Society’s journal Environmental Science and Technology, the Rice team described using genetically engineered bacteria that release methyl halide gases to monitor microbial gene expression in soil samples in the lab.

The bacteria are programmed using synthetic biology to release gas to report when they exchange DNA through horizontal gene transfer, the process by which organisms share genetic traits without a parent-to-child relationship. The biosensors allow researchers to monitor such processes in real time without having to actually see into or disturb a lab soil sample.

Interesting.

The Science Council of Japan will make clear its position on military-linked research — possibly overturning a decades-long ban — by early next year, the academic group said Friday.

A committee of 15 academics from fields ranging from physics, political science to law held its first meeting to discuss whether to revise statements released by the council in 1950 and 1967 stating that the group will “never engage in military research.”

Over the next several months, the committee will hold five or six sessions to discuss how they should assess changes in the security and technology environment, how to define dual-use research, how studies tied to national security would impact academic transparency and how inflows of defense-related funding would alter the overall nature of research.

My new story for Vice Motherboard on the future of political campaining:

Lest we think future elections are all about the candidates, perhaps the largest possibility on the horizon could come from digital direct democracy—the concept where citizens participate in real time input in the government. I gently advocate for a fourth branch of government, in which the people can vote on issues that matter to them and their decrees could have real legal consequence on Congress, the Supreme Court, and the Presidency.

Of course, that’s only if government even exists anymore. It’s possible the coming age of artificial intelligence and robots may replace the need for politicians. At least human ones. Some experts think superintelligent AI might be here in 10 to 15 years, so why not have a robot president that is totally altruistic and not susceptible to lobbyists and personal desires? This machine leader would simply always calculate the greatest good for the greatest amount of people, and go with that. No more Republicans, Democrats, Libertarians, Greens, or whatever else we are.

It’s a brave new future we face, but technology will make our lives easier, more democratic, and more interesting. Additionally, it will change the game show we go through every four years called the US Presidential elections. In fact, if we’re lucky—given how crazy these elections have made America look—maybe technology will make future elections disappear altogether.

Zoltan Istvan is a futurist, author ofThe Transhumanist Wager, and presidential candidate for theTranshumanist Party. He writes anoccasional columnfor Motherboard in which he ruminates on the future beyond natural human ability.

Topics: the transhumanist wager, politics, Presidential elections, VR, AR, drones, tech, second life, Hillary Clinton, bernie sanders, Donald Trump, America.

Years ago I was expert witness for an IP case involving some very badly designed and coded software. Case was a significant mount of money, and I had to review tons of documents, code, and diagrams to help prepare the case and give my deposition. It would have been nice to have a bot to assist. So, I do like what I am reading in this article.

Rebecca Hawkes, head of marketing at RAVN Systems, discusses why artificial intelligence is showing promise in the legal sector.

There’s been a considerable amount of media hype around artificial intelligence recently, but this isn’t just the latest buzzword.

It has now become a practical reality, with the legal industry paving the way in innovation. By 2024 the market for Enterprise artificial Intelligence systems will increase from $202.5m million in 2015 to $11.1 billion (according to a report from Tractica).

Wow — scary. Not scary for law abiding citizens to do this; scary due to criminals & terrorists. And, we have seen the ammo and high quanity gun clip already produced as well.

The 28-year-old is the face of open-source 3D gun design, an online movement of enthusiasts who use 3D printers and machining tools to build their own homemade weapons – ones that can shoot very real, and very deadly bullets.

Wilson isn’t some gun loon on an online soapbox; he is a well-educated, well-spoken, very argumentative young man who’s as responsible for creating his press portrayal as the journalists who’ve written about him. “I’m now more and more of a self-caricature,” he tells me. “I’ve had to become a fanatic over the past three years just to move the ball another three yards.”