In a new study published in ACM Transactions on the Web, researchers from Queen Mary University of London have unveiled the intricate mechanisms behind one of the most dramatic collapses in the cryptocurrency world: the downfall of the TerraUSD stablecoin and its associated currency, LUNA. Using advanced mathematical techniques and cutting-edge software, the team has identified suspicious trading patterns that suggest a coordinated attack on the ecosystem, leading to a catastrophic loss of $3.5 billion in value virtually overnight.

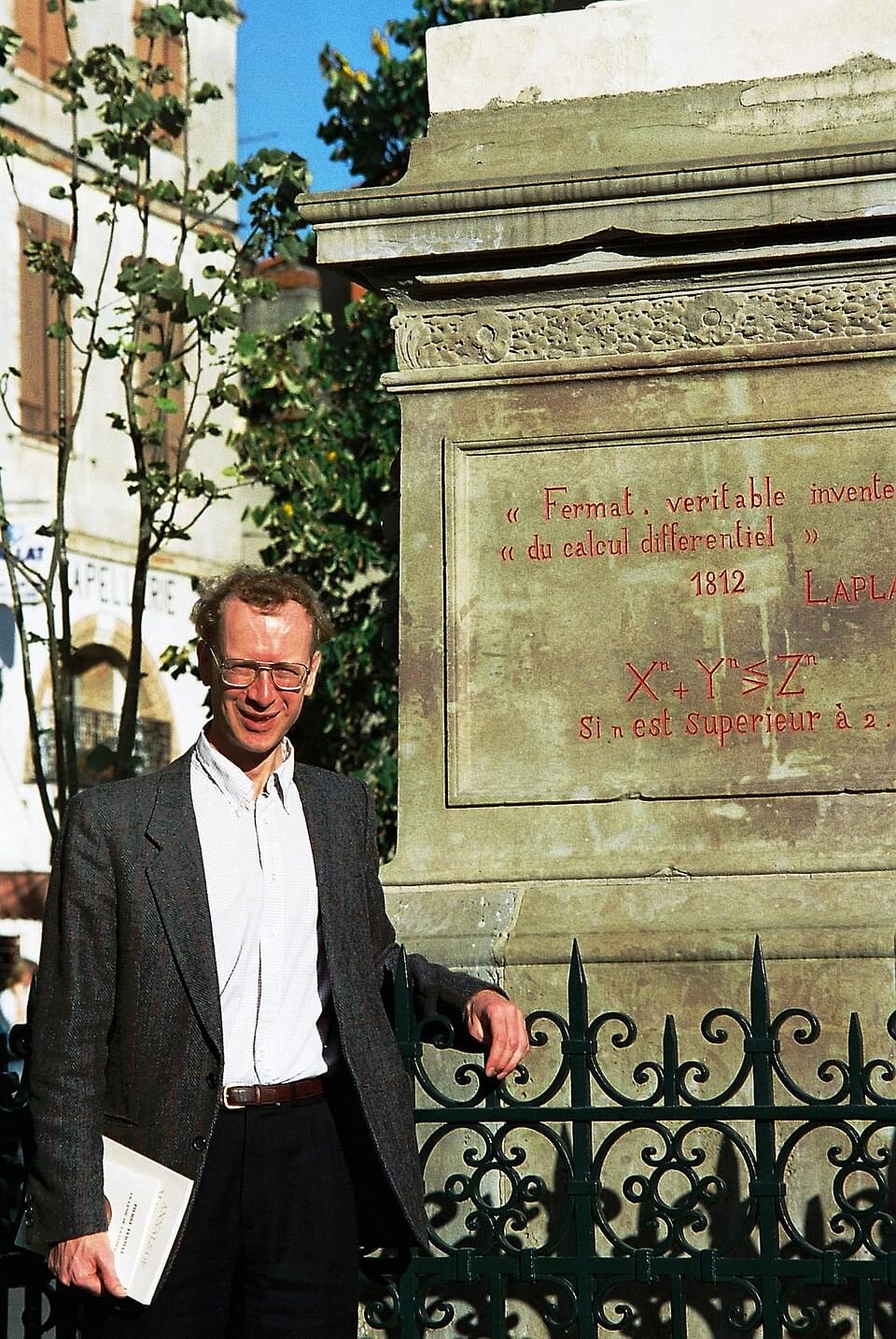

The study, led by Dr. Richard Clegg and his team, employs temporal multilayer graph analysis—a sophisticated method for examining complex, interconnected systems over time. This approach allowed the researchers to map the relationships between different cryptocurrencies traded on the Ethereum blockchain, revealing how the TerraUSD stablecoin was destabilized by a series of deliberate, large-scale trades.

Stablecoins like TerraUSD are designed to maintain a steady value, typically pegged to a fiat currency like the US dollar. However, in May 2022, TerraUSD and its sister currency, LUNA, experienced a catastrophic collapse. Dr. Clegg’s research sheds light on how this happened, uncovering evidence of a coordinated attack by traders who were betting against the system, a practice known as “shorting.”