The juridical metaphor in physics has ancient roots. Anaximander, in the 6th century BCE, was perhaps the first to invoke the concept of cosmic justice, speaking of natural entities paying “penalty and retribution to each other for their injustice according to the assessment of Time” (Kirk et al., 2010, p. 118). This anthropomorphizing tendency persisted through history, finding its formal expression in Newton’s Principia Mathematica, where he articulated his famous “laws” of motion. Newton, deeply influenced by his theological views, conceived of these laws as divine edicts — mathematical expressions of God’s will imposed upon a compliant universe (Cohen & Smith, 2002, p. 47).

This legal metaphor has served science admirably for centuries, providing a framework for conceptualizing the universe’s apparent obedience to mathematical principles. Yet it carries implicit assumptions worth examining. Laws suggest a lawgiver, hinting at external agency. They imply prescription rather than description — a subtle distinction with profound philosophical implications. As physicist Paul Davies (2010) observes, “The very notion of physical law is a theological one in the first place, a fact that makes many scientists squirm” (p. 74).

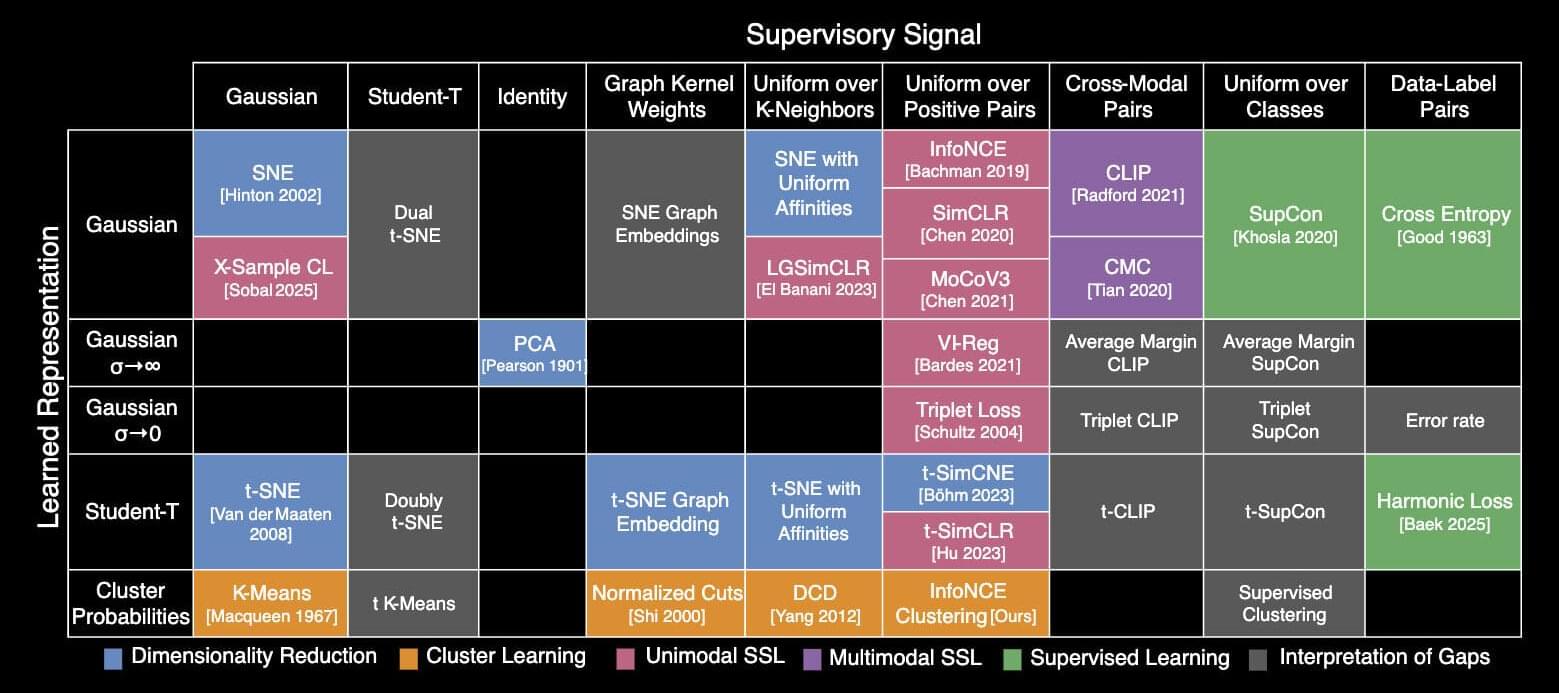

Enter the computational metaphor — a framework more resonant with our digital age. The universe, in this conceptualization, executes algorithms rather than obeying laws. Space, time, energy, and matter constitute the data structure upon which these algorithms operate. This shift is more than semantic; it reflects a fundamental reconceptualization of physical reality that aligns remarkably well with emerging theories in theoretical physics and information science.