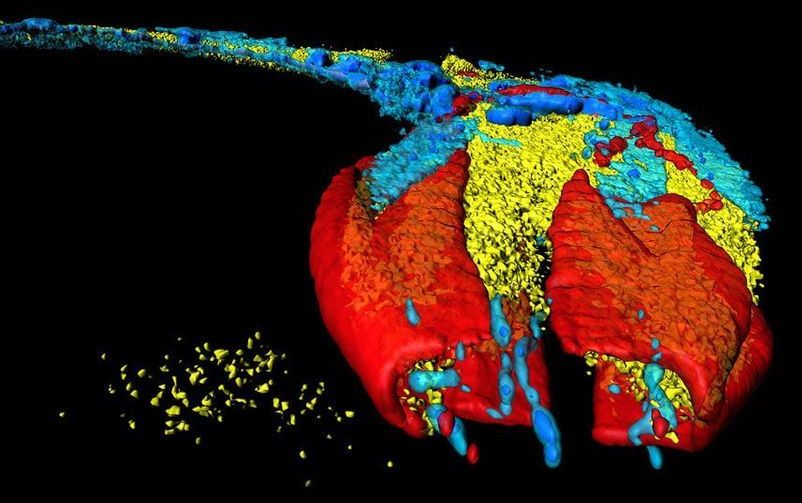

For a humble, microscopic worm with only 302 neurons, C. elegans has had a lot of firsts. It was the first multicellular animal to have its whole genome sequenced. It was also the spark that lit the connectome fire—the revolutionary idea that mapping the entirety of connections among neurons will unveil secrets of our minds, memory, and consciousness. And if the connectomists are to be believed, a map of individual brains may be the blueprint that will one day hurtle AI into human-level intelligence, or reconstruct an entire human mind in digital form.

More than 30 years ago, a pioneering group of scientists painstakingly traced and reconstructed the roundworm’s neural wiring by hand. The “heroic” effort, unaided by modern computers and brain-mapping algorithms, resulted in the first connectome in 1986.

Yet the “mind of the worm” map had significant lapses. For one, it only focused on one sex, the hermaphrodite—a “female” equivalent that can self-fertilize. This makes it hard to tell which connections are universal for the species, and which are dependent on sex and reproduction. For another, because the effort relied entirely on human beings who get tired, bored, and mess up, the map wasn’t entirely accurate. Even with multiple rounds of subsequent refinements, errors could linger, which would royally screw up any interpretation of results using these maps.