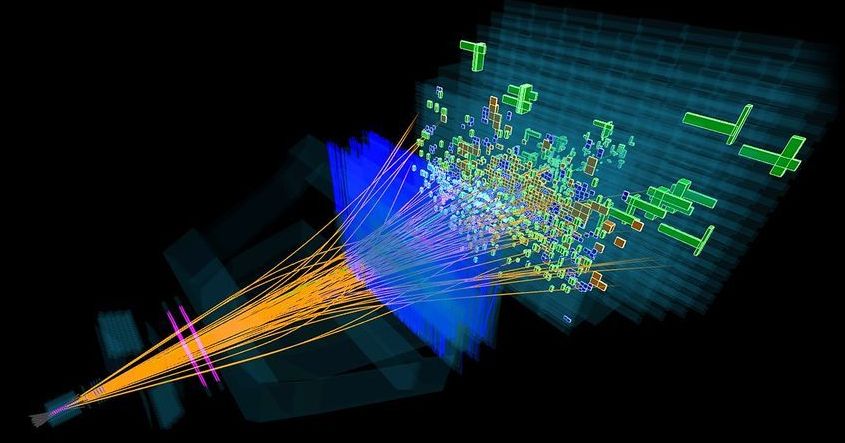

In their latest analysis, first presented at a seminar in March, the LHCb physicists found that several measurements involving the decay of B mesons conflict slightly with the predictions of the standard model of particle physics—the reigning set of equations describing the subatomic world. Taken alone, each oddity looks like a statistical fluctuation, and they may all evaporate with additional data, as has happened before. But their collective drift suggests that the aberrations may be breadcrumbs leading beyond the standard model to a more complete theory.

“For the first time in certainly my working life, there are a confluence of different decays that are showing anomalies that match up,” said Mitesh Patel, a particle physicist at Imperial College London who is part of LHCb.

The B meson is so named because it contains a bottom quark, one of six fundamental quark particles that account for most of the universe’s visible matter. For unknown reasons, the quarks break down into three generations: heavy, medium, and light, each with quarks of opposite electric charge. Heavier quarks decay into their lighter variations, almost always switching their charge, too. For instance, when the negatively charged heavy bottom quark in a B meson drops a generation, it usually becomes a middleweight, positively charged “charm” quark.