For the past six years, Los Alamos National Laboratory has led the world in trying to understand one of the most frustrating barriers that faces variational quantum computing: the barren plateau.

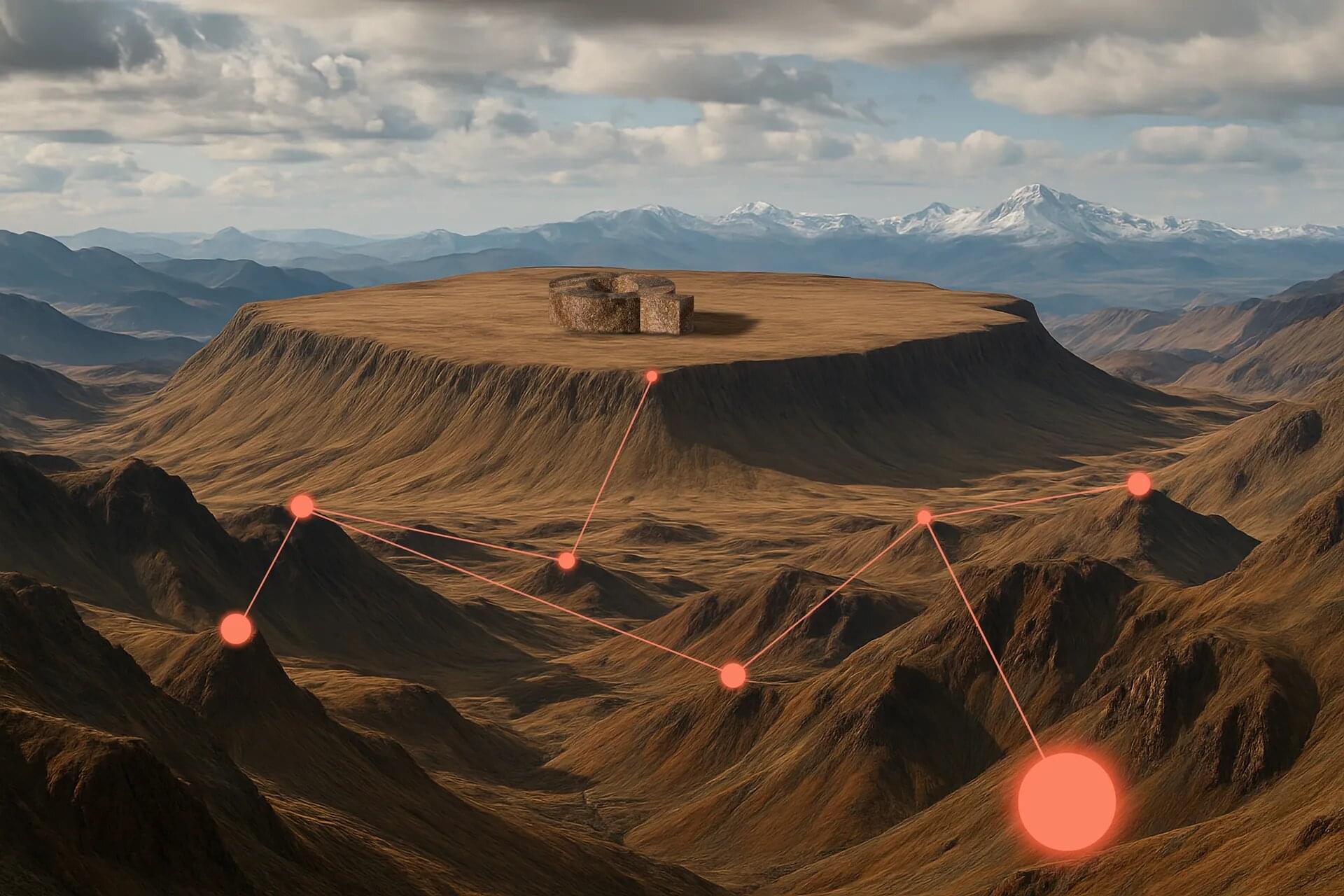

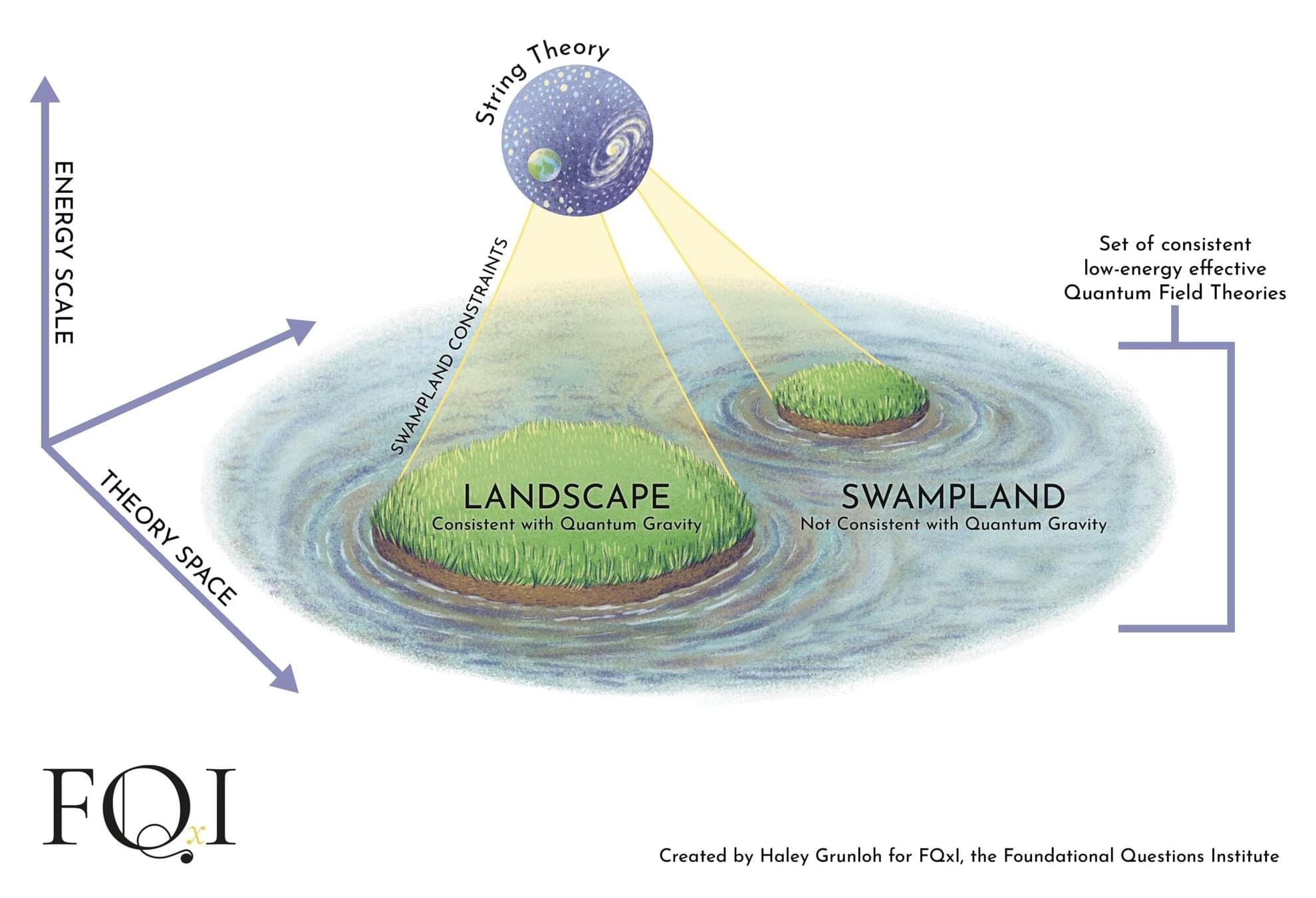

“Imagine a landscape of peaks and valleys,” said Marco Cerezo, the Los Alamos team’s lead scientist. “When optimizing a variational, or parameterized, quantum algorithm, one needs to tune a series of knobs that control the solution quality and move you in the landscape. Here, a peak represents a bad solution and a valley represents a good solution. But when researchers develop algorithms, they sometimes find their model has stalled and can neither climb nor descend. It’s stuck in this space we call a barren plateau.”

For these quantum computing methods, barren plateaus can be mathematical dead ends, preventing their implementation in large-scale realistic problems. Scientists have spent a lot of time and resources developing quantum algorithms only to find that they sometimes inexplicably stall. Understanding when and why barren plateaus arise has been a problem that has taken the community years to solve.