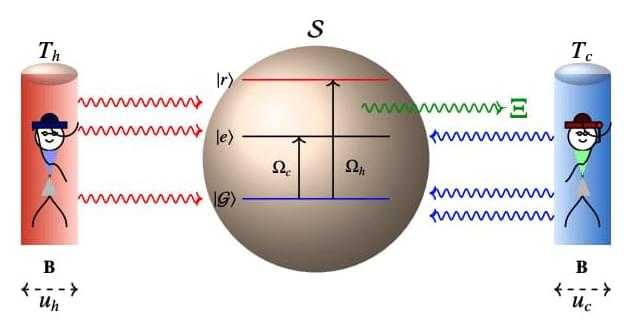

The pursuit of more efficient engines continually pushes the boundaries of thermodynamics, and recent work demonstrates that relativistic effects may offer a surprising pathway to surpass conventional limits. Tanmoy Pandit from the Leibniz Institute of Hannover, along with Tanmoy Pandit from TU Berlin and Pritam Chattopadhyay from the Weizmann Institute of Science, and colleagues, investigate a novel thermal machine that harnesses the principles of relativity to achieve efficiencies beyond those dictated by the Carnot cycle. Their research reveals that by incorporating relativistic motion into the system, specifically through the reshaping of energy spectra via the Doppler effect, it becomes possible to extract useful work even without a temperature difference, effectively establishing relativistic motion as a valuable resource for energy conversion. This discovery not only challenges established thermodynamic boundaries, but also opens exciting possibilities for designing future technologies that leverage the fundamental principles of relativity to enhance performance.

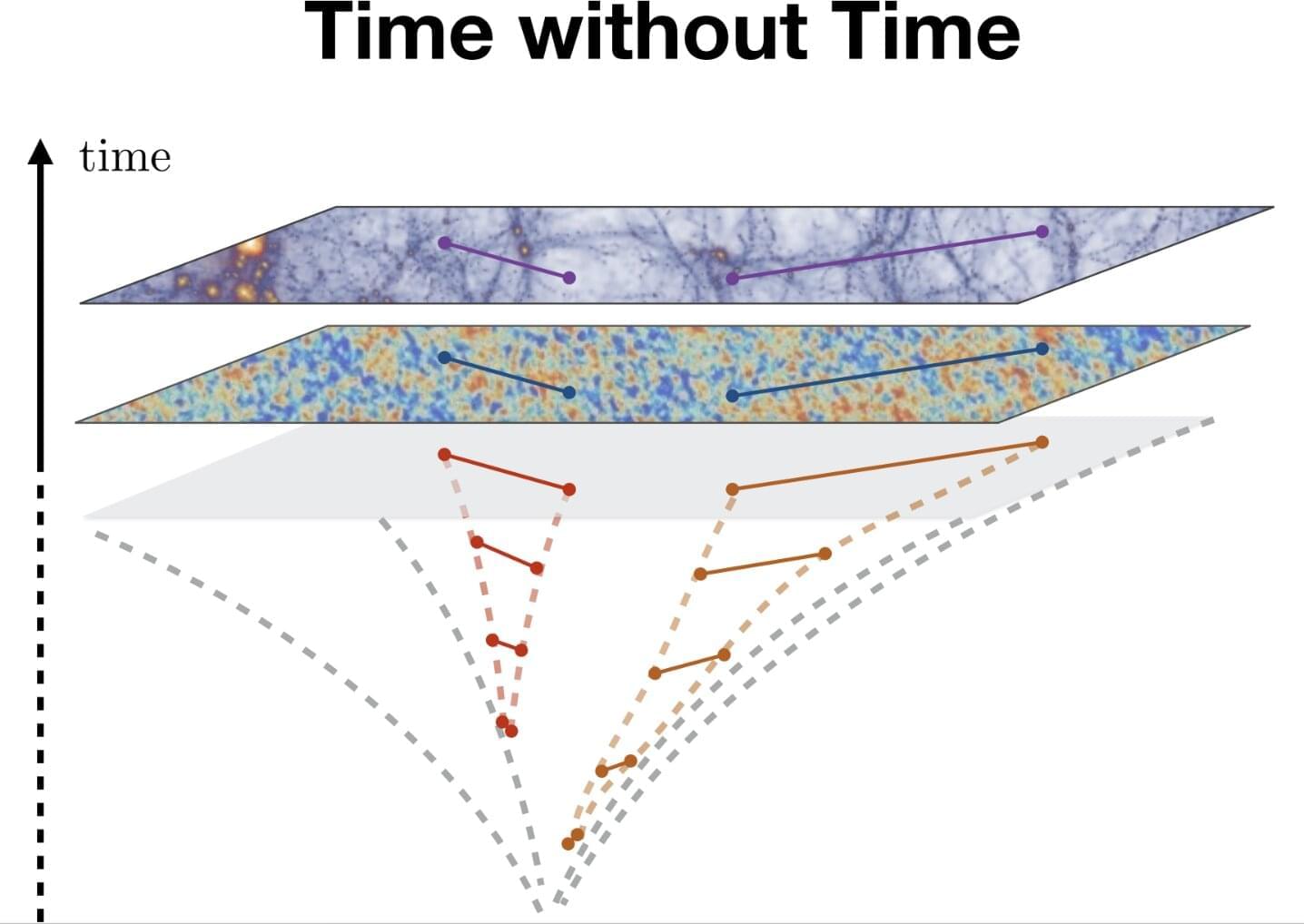

The appendices detail the Lindblad superoperator used to describe the system’s dynamics and the transformation to a rotating frame to simplify the analysis. They show how relativistic motion affects the average number of quanta in the reservoir and the superoperators, and present the detailed derivation of the steady-state density matrix elements for the three-level heat engine, providing equations for power output and efficiency. The document describes the Monte Carlo method used to estimate the generalized Carnot-like efficiency bound in relativistic quantum thermal machines, providing pseudocode for implementation and explaining how the efficiency bound is extracted from efficiency and power pairs. Overall, this is an excellent supplementary material document that provides a comprehensive and detailed explanation of the theoretical framework, calculations, and numerical methods used in the research paper. The clear organization, detailed derivations, and well-explained physical concepts make it a valuable resource for anyone interested in relativistic quantum thermal machines.

Relativistic Motion Boosts Heat Engine Efficiency

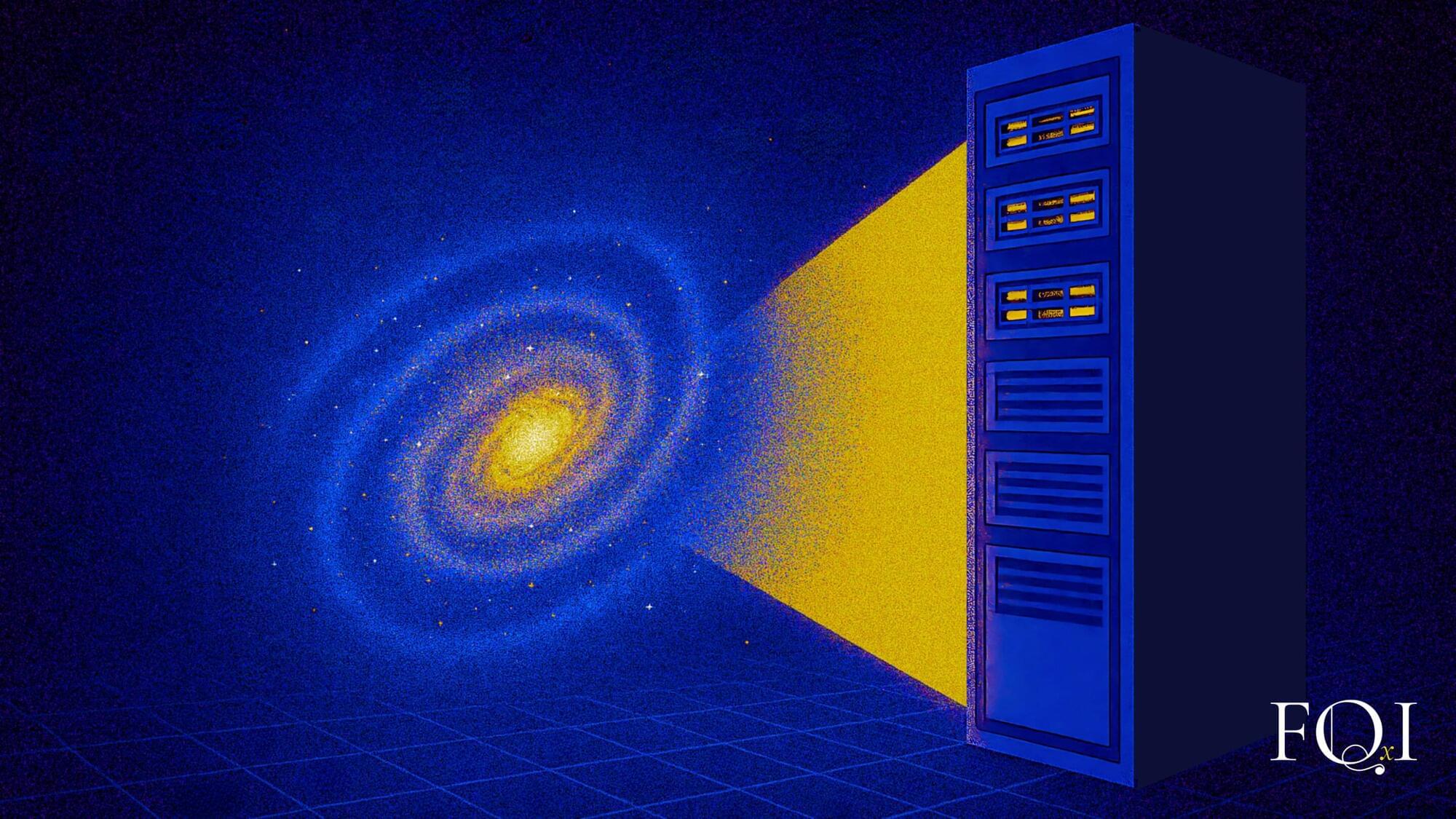

Researchers have demonstrated that relativistic motion can function as a genuine thermodynamic resource, enabling a heat engine to surpass the conventional limits of efficiency. The team investigated a three-level maser, where thermal reservoirs are in constant relativistic motion relative to the working medium, using a model that accurately captures the effects of relativistic motion on energy transfer. The results reveal that the engine’s performance is not solely dictated by temperature differences, but is significantly influenced by the velocity of the thermal reservoirs. Specifically, the engine can operate with greater efficiency than predicted by the Carnot limit, due to the reshaping of the energy spectrum caused by relativistic motion.