Quantum computing and machine learning are two of the most exciting technologies that can transform businesses. We can only imagine how powerful it can be if we can combine the power of both of these technologies. When we can integrate quantum algorithms in programs based on machine learning, that is called quantum machine learning. This fascinating area has been a major area of tech firms, and they have brought out tools and platforms to deploy such algorithms effectively. Some of these include TensorFlow Quantum from Google, Quantum Machine Learning (QML) library from Microsoft, QC Ware Forge built on Amazon Braket, etc.

Students skilled in working with quantum machine learning algorithms can be in great demand due to the opportunities the field holds. Let us have a look at a few online courses one can use to learn quantum machine learning.

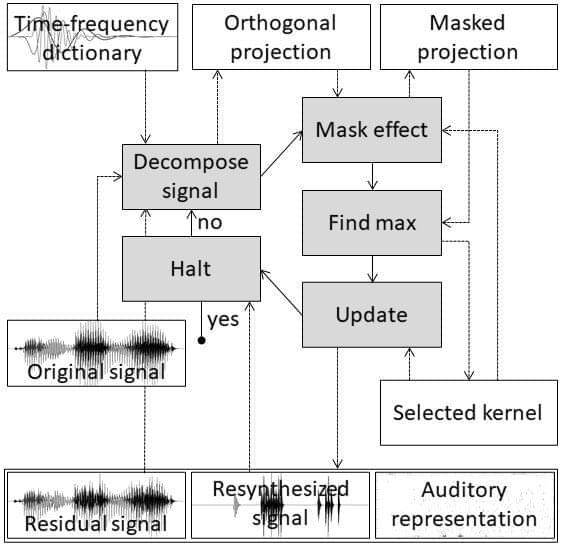

In this course, the students will start with quantum computing and quantum machine learning basics. The course will also cover topics on building Qnodes and Customised Templates. It also teaches students to calculate Autograd and Loss Function with quantum computing using Pennylane and to develop with the Pennylane.ai API. The students will also learn how to build their own Pennylane Plugin and turn Quantum Nodes into Tensorflow Keras Layers.