Nvidia, the $2 trillion AI giant, is moving to lap the market once again.

Anime Production Inspired Real-World Anime Super-Resolution https://huggingface.co/papers/2403.

demo:

While real-world anime super-resolution (SR) has gained increasing attention in the SR community, existing methods still adopt techniques…

Discover amazing ML apps made by the community.

The new decking board not only stores more carbon that is emitted in its production but is also 18 percent cheaper than market prices.

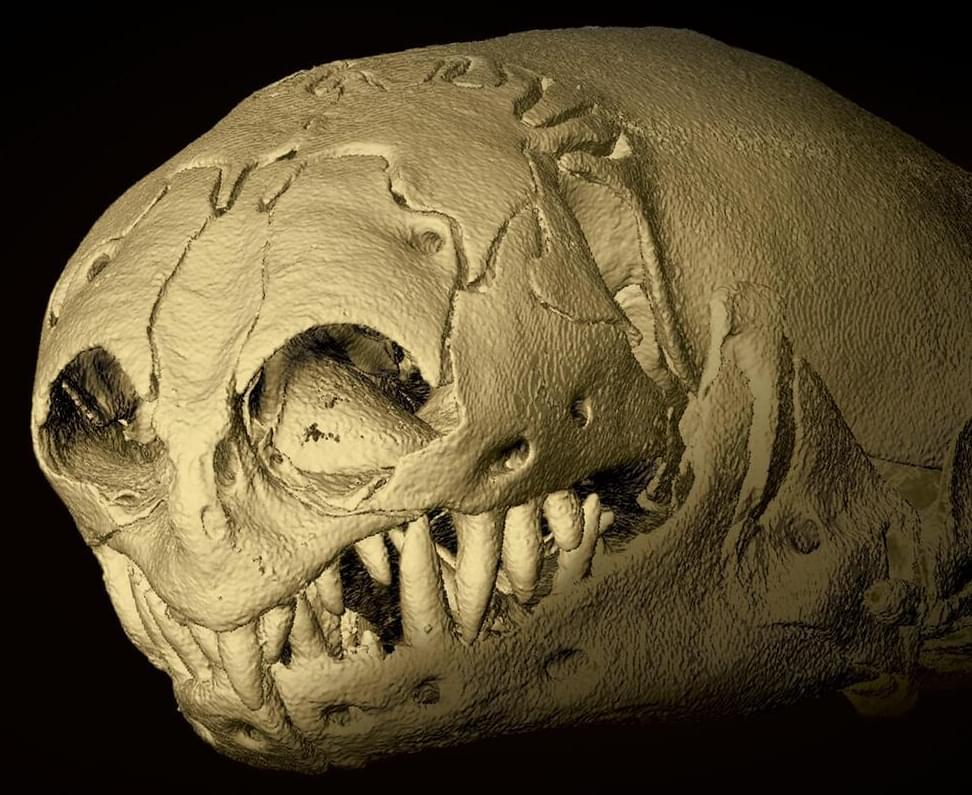

With skull parts that click together like puzzle pieces and a large central tooth, the real-life sandworm is stranger than fiction.

Amphisbaenians are strange creatures. Like worms with vertebrae, scales, a large central tooth, and sometimes small forearms, these reptiles live underground, burrowing tunnels and preying on just about anything they encounter, not unlike a miniature version of the monstrous sandworms from “Dune.”

Even though they’re found around much of the world, little is known about how amphisbaenians behave in the wild because they cannot be observed while in their natural habitat under sand and soil. But thanks to two papers published in the March issue of The Anatomical Record, new light is being shed on these animals and their specialized anatomy.

As data traffic grows, there is an urgent demand for smaller optical transmitters and receivers capable of handling complex multi-level modulation formats and achieving higher data transmission speeds.

In an important step toward fulfilling this requirement, researchers developed a new compact indium phosphide (InP)-based coherent driver modulator (CDM) and showed that it can achieve a record high baud rate and transmission capacity per wavelength compared to other CDMs.

CDMs are optical transmitters used in optical communication systems that can put information on light by modulating the amplitude and phase before it is transmitted through an optical fiber.