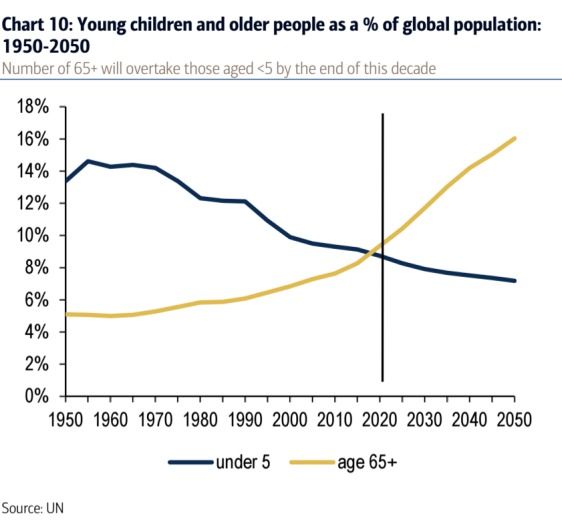

The world is about to see a mind-blowing demographic situation that will be a first in human history: There are about to be more elderly people than young children.

For some time now, demographers and economists have observed that the proportion of elderly adults around the world is rising, while the proportion of younger children is falling.

But within a few years, just before 2020, adults aged 65 and over will begin to outnumber children under the age of 5 among the global population, according to a chart shared by a Bank of America Merrill Lynch team led by Beijia Ma, citing an earlier report from the US Census Bureau.