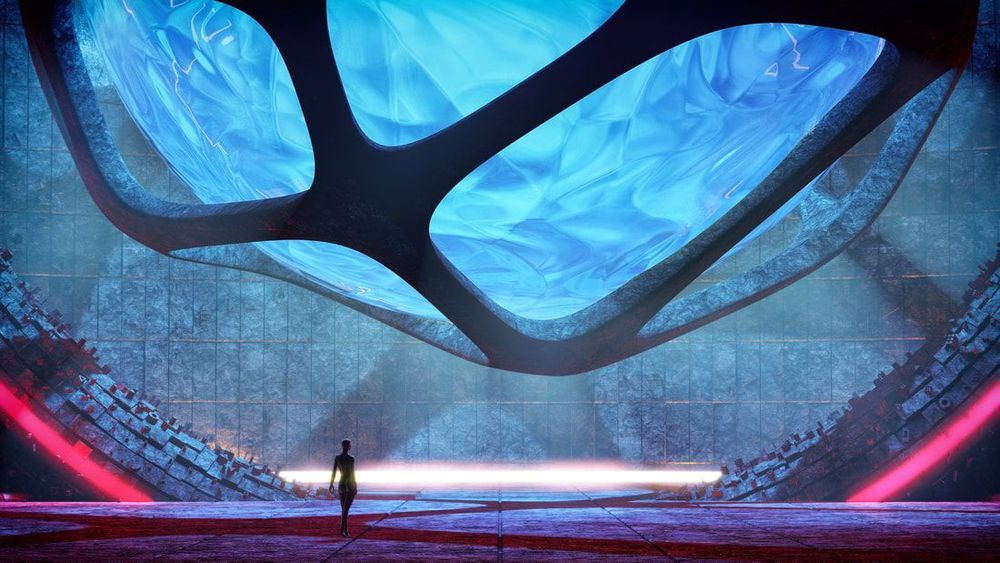

I used to think that we live in some sort of a “cosmic jungle”, so the Zoo Hypothesis (like Star Trek Prime Directive) should be the correct explanation to the Fermi Paradox, right? I wouldn’t completely rule out this hypothesis insofar as a theorist Michio Kaku allegorically compares our earthly civilization to an “anthill” next to the “ten-lane superhighway” of a galactic-type civilization. Over time, however, I’ve come to realize that the physics of information holds the key to the solution of the Fermi Paradox — indications are we most likely live in a “syntellect chrysalis” instead of a “cosmic jungle.”

Just like a tiny mustard seed in the soil, we’ll get to grow out of the soil, see “the light of the day” and network by roots and pollen with others, at the cosmic level of emergent complexity — as a civilizational superorganism endowed with its own advanced extradimensional consciousness. So, one day our Syntellect, might “wake up” as some kind of a newborn baby of the intergalactic family (or multiversal family, for that matter – that remains to be seen) within the newly perceived reality framework. Call it the Chrysalis Conjecture, if you’d like.*.