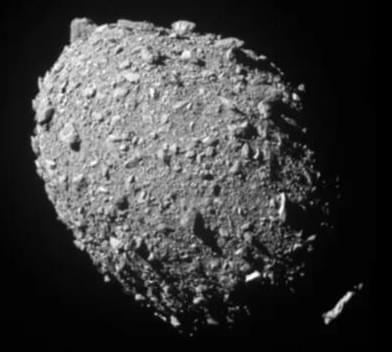

Dr. Shantanu Naidu: “When DART made impact, things got very interesting…the entire shape of the asteroid has changed, from a relatively symmetrical object to a ‘triaxial ellipsoid’ – something more like an oblong watermelon.”

On November 24, 2021, NASA launched the Double Asteroid Redirection Test (DART) mission with the goal of demonstrating that deflecting an incoming asteroid could prevent it from striking Earth by striking the asteroid itself. Just over nine months later, on September 26, 2022, this demonstration was successfully carried out as DART acted as a kinetic impactor and intentionally struck the Dimporphos asteroid, which measures 560 feet (170 meters) in diameter.

But while the impact successfully altered Dimorphos’ orbit around the binary near-Earth asteroid, Didymos, could it have altered other aspects of Dimorphos, as well? This is what a recent study published in The Planetary Science Journal hopes to address as a team of international researchers led by the NASA Jet Propulsion Laboratory (JPL) discovered the impact also altered the shape of Dimporphos.