Viral stowaways could be enhancing survival of algae, and even their evolution.

“We were in the middle of observing one night, with a new spectrograph that we had recently built, when we received a message from our colleagues about a peculiar object composed of a nebulous gas expanding rapidly away from a central star,” said Princeton University astronomer Guðmundur Stefánsson, a member of the team that discovered a mysterious object in 2004 using NASA’s space-based Galaxy Evolution Explorer (GALEX). “How did it form? What are the properties of the central star? We were immediately excited to help solve the mystery!”

A 16-Year-Old Mystery

NASA announced today that it has solved the 16-year-old mystery of the object –similar in size that of a supernova remnant–unlike any they’d seen before in our Milky Way galaxy: a large, faint blue cloud of gas in space with a living star at its center. Subsequent observations revealed a thick ring structure within it, leading to the object being named the Blue Ring Nebula.

Viruses are tiny invaders that cause a wide range of diseases, from rabies to tomato spotted wilt virus and, most recently, COVID-19 in humans. But viruses can do more than elicit sickness — and not all viruses are tiny.

Large viruses, especially those in the nucleo-cytoplasmic large DNA virus family, can integrate their genome into that of their host — dramatically changing the genetic makeup of that organism. This family of DNA viruses, otherwise known as “giant” viruses, has been known within scientific circles for quite some time, but the extent to which they affect eukaryotic organisms has been shrouded in mystery — until now.

“Viruses play a central role in the evolution of life on Earth. One way that they shape the evolution of cellular life is through a process called endogenization, where they introduce new genomic material into their hosts. When a giant virus endogenizes into the genome of a host algae, it creates an enormous amount of raw material for evolution to work with,” said Frank Aylward, an assistant professor in the Department of Biological Sciences in the Virginia Tech College of Science and an affiliate of the Global Change Center housed in the Fralin Life Sciences Institute.

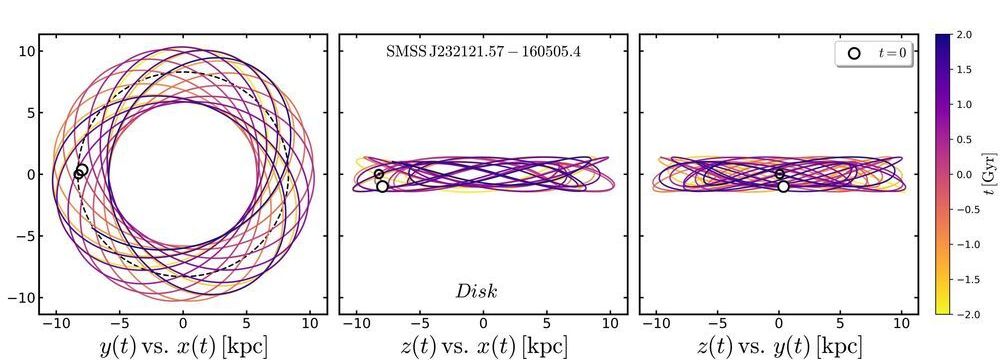

Theories on how the Milky Way formed are set to be rewritten following discoveries about the behavior of some of its oldest stars.

An investigation into the orbits of the Galaxy’s metal-poor stars—assumed to be among the most ancient in existence—has found that some of them travel in previously unpredicted patterns.

“Metal-poor stars—containing less than one-thousandth the amount of iron found in the Sun—are some of the rarest objects in the galaxy,” said Professor Gary Da Costa from Australia’s ARC Center of Excellence in All Sky Astrophysics in 3 Dimensions (ASTRO 3D) and the Australian National University.

When we apprehend reality as the entirety of everything that exists including all dimensionality, all events and entities in their respective timelines, then by definition nothing exists outside of reality, not even “nothing.” It means that the first cause for reality’s existence must lie within ontological reality itself, since there is nothing outside of it. This self-causation of reality is perhaps best understood in relation to the existence of your own mind. Self-simulated reality transpires as self-evident when you relate to the notion that a phenomenal mind, which is a web of patterns, conceives a certain novel pattern and simultaneously perceives it. Furthermore, the imminent natural God of Spinoza, or Absolute Consciousness, becomes intelligible by applying a scientific tool of extrapolation to the meta-systemic phenomenon of radical emergence and treating consciousness as a primary ontological mover, the Source if you will, not a by-product of material interactions.

#OntologicalHolism #ontology #holism #cosmology #phenomenology #consciousness #mind #evolution

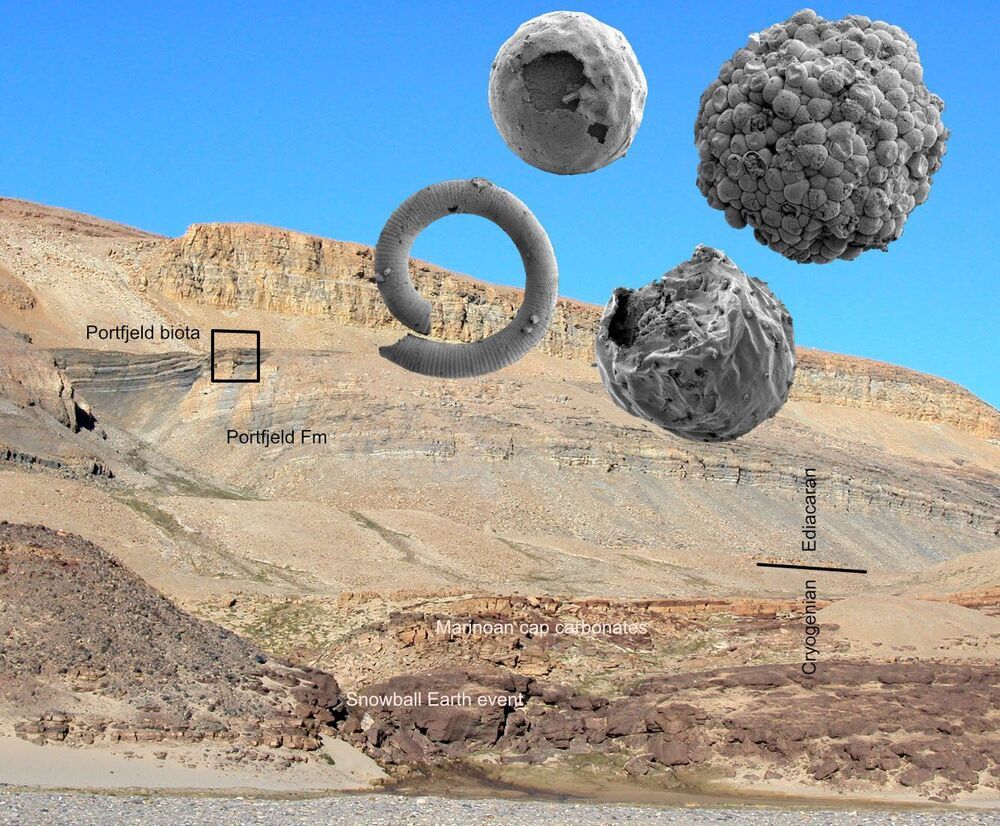

When and how did the first animals appear? Science has long sought an answer to this question. Uppsala University researchers and colleagues in Denmark have now jointly found, in Greenland, embryo-like microfossils up to 570 million years old, revealing that organisms of this type were dispersed throughout the world. The study is published in Communications Biology.

“We believe this discovery of ours improves our scope for understanding the period in Earth’s history when animals first appeared—and is likely to prompt many interesting discussions,” says Sebastian Willman, the study’s first author and a palaeontologist at Uppsala University.

The existence of animals on Earth around 540 million years ago (mya) is well substantiated. This was when the event in evolution known as the “Cambrian Explosion” took place. Fossils from a huge number of creatures from the Cambrian period, many of them shelled, exist. The first animals must have evolved earlier still; but there are divergent views in the research community on whether the extant fossils dating back to the Precambrian Era are genuinely classifiable as animals.

Some scientists believe that the ability of animals to store memory came from a virus that infected a common ancestor hundreds of millions of years ago. 😃

This study is radically changing how we view the process of evolution.

Dr. Frank Marks, Director of the Hurricane Research Division, National Oceanic and Atmospheric Administration (NOAA), discussing improved forecasting technologies.

Ira Pastor, ideaXme life sciences ambassador and founder of Bioquark interviews Dr. Frank D. Marks, MS, ScD, Director of Hurricane Research Division, at NOAA.

Ira Pastor comments:

Weather and climate disasters affect the world’s population.

The total approximate cost of damages from weather and climate disasters in the U.S. alone from 1980 to 2019, is over $1.75 trillion, and a major component of that damage results from hurricanes.

The great powerful guppy can essentially evolve 10 million times faster than usual. Which could lead to humans evolving faster too leading to a biological singularity.

Although natural selection is often viewed as a slow pruning process, a dramatic new field study suggests it can sometimes shape a population as fast as a chain saw can rip through a sapling. Scientists have found that guppies moved to a predator-free environment adapted to it in a mere 4 years—a rate of change some 10,000 to 10 million times faster than the average rates gleaned from the fossil record. Some experts argue that the 11-year study, described in today’s issue of Science,* may even shed light on evolutionary patterns that occur over eons.

A team led by evolutionary biologist David Reznick of the University of California, Riverside, scooped guppies from a waterfall pool brimming with predators in Trinidad’s Aripo River, then released them in a tributary where only one enemy species lurked. In as little as 4 years, male guppies in the predator-free tributary were already detectably larger and older at maturity when compared with the control population; 7 years later females were too. Guppies in the safer waters also lived longer and had fewer and bigger offspring.

The team next determined the rate of evolution for these genetic changes, using a unit called the darwin, or the proportional amount of change over time. The guppies evolved at a rate between 3700 and 45,000 darwins. For comparison, artificial-selection experiments on mice show rates of up to 200,000 darwins—while most rates measured in the fossil record are only 0.1 to 1.0 darwin. “It’s further proof that evolution can be very, very fast and dynamic,” says Philip Gingerich, a paleontologist at the University of Michigan, Ann Arbor. “It can happen on a time scale that’s as short as one generation—from us to our kids.”

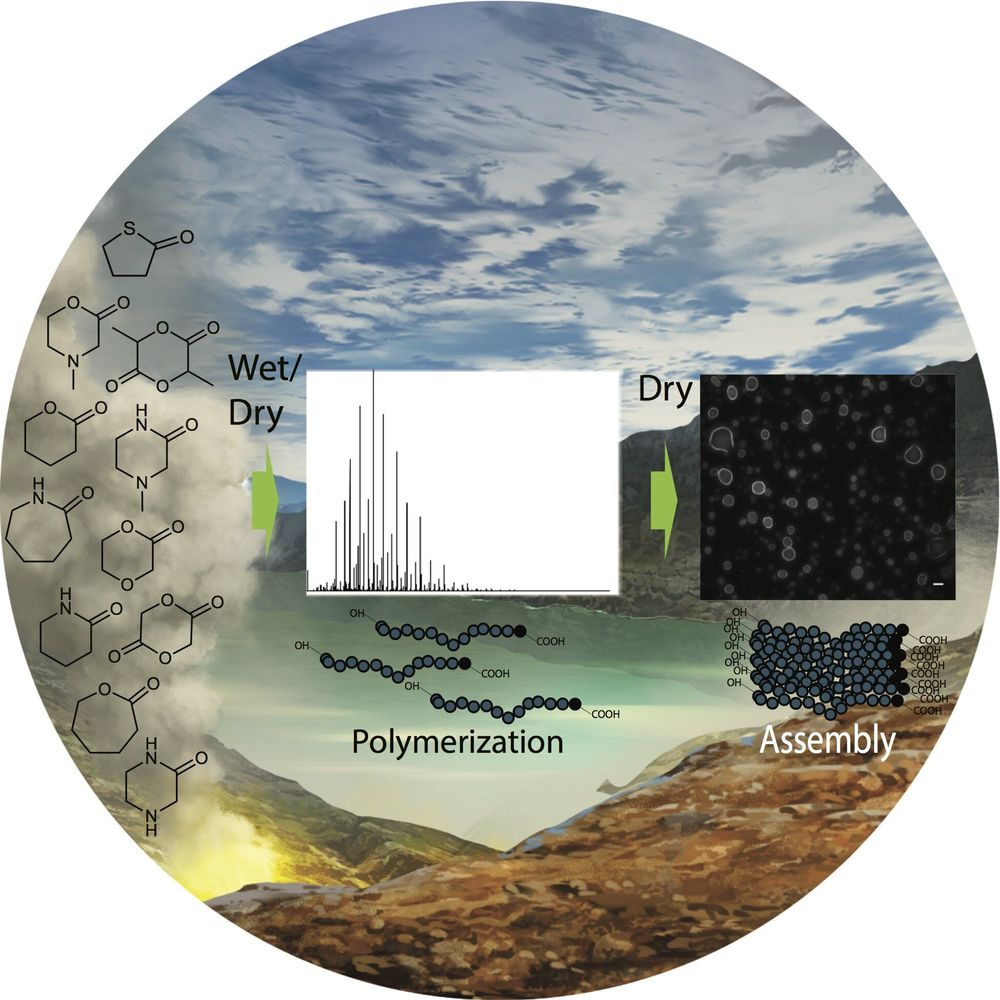

Chemists studying how life started often focus on how modern biopolymers like peptides and nucleic acids contributed, but modern biopolymers don’t form easily without help from living organisms. A possible solution to this paradox is that life started using different components, and many non-biological chemicals were likely abundant in the environment. A new survey conducted by an international team of chemists from the Earth-Life Science Institute (ELSI) at Tokyo Institute of Technology and other institutes from Malaysia, the Czech Republic, the U.S. and India, has found that a diverse set of such compounds easily form polymers under primitive environmental conditions, and some even spontaneously form cell-like structures.

Understanding how life started on Earth is one of the most challenging questions modern science seeks to explain. Scientists presently study modern organisms and try to see what aspects of their biochemistry are universal, and thus were probably present in the organisms from which they descended. The best guess is that life has thrived on Earth for at least 3.5 billion of Earth’s 4.5-billion-year history since the planet formed, and most scientists would say life likely began before there is good evidence for its existence. Problematically, since Earth’s surface is dynamic, the earliest traces of life on Earth have not been preserved in the geological record. However, the earliest evidence for life on Earth tells us little about what the earliest organisms were made of, or what was going on inside their cells. “There is clearly a lot left to learn from prebiotic chemistry about how life may have arisen,” says the study’s co-author Jim Cleaves.

A hallmark of life is evolution, and the mechanisms of evolution suggest that common traits can suddenly be displaced by rare and novel mutations which allow mutant organisms to survive better and proliferate, often replacing previously common organisms very rapidly. Paleontological, ecological and laboratory evidence suggests this occurs commonly and quickly. One example is an invasive organism like the dandelion, which was introduced to the Americas from Europe and is now a commo weed causing lawn-concerned homeowners to spend countless hours of effort and dollars to eradicate.