It is an old-standing theory in evolutionary ecology: animal species on islands have the tendency to become either giants or dwarfs in comparison to mainland relatives. Since its formulation in the 1960s, however, the ‘island rule’ has been severely debated by scientists. In a new publication in Nature Ecology and Evolution on April 15, 2021, researchers solved this debate by analyzing thousands of vertebrate species. They show that the island rule effects are widespread in mammals, birds, and reptiles, but less evident in amphibians.

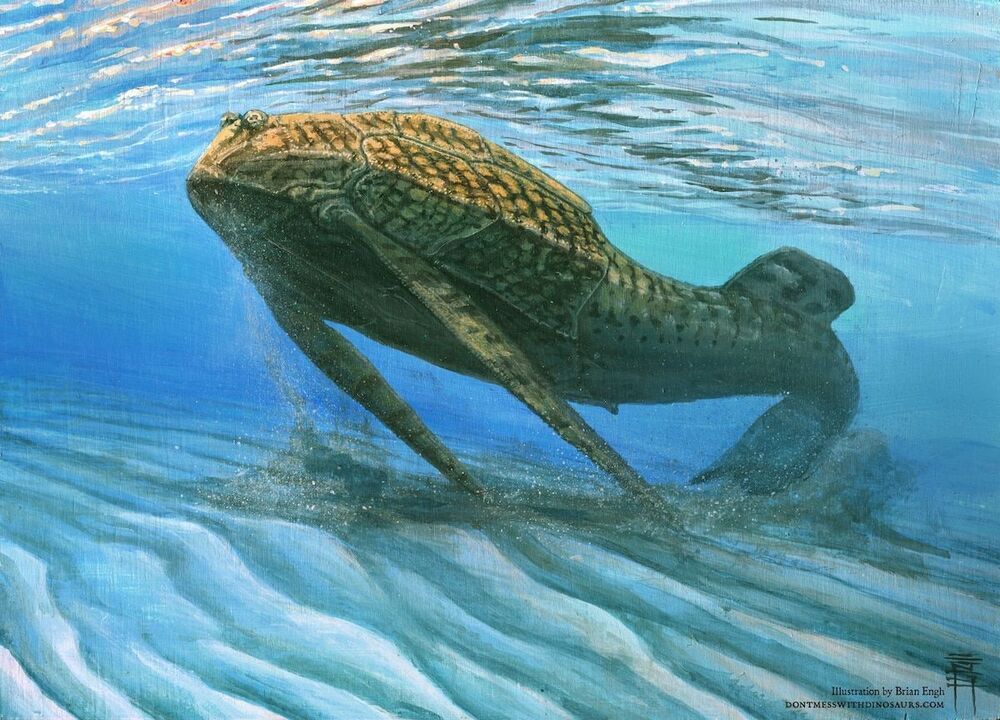

Dwarf hippos and elephants in the Mediterranean islands are examples of large species that exhibited dwarfism. On the other hand, small mainland species may have evolved into giants after colonizing islands, giving rise to such oddities as the St Kilda field mouse (twice the size of its mainland ancestor), the infamous dodo of Mauritius (a giant pigeon), and the Komodo dragon.

In 1973, Leigh van Valen was the first that formulated the theory, based on the study by mammologist J. Bristol Foster in 1964, that animal species follow an evolutionary pattern when it comes to their body sizes. Species on islands have the tendency to become either giants or dwarfs in comparison to mainland relatives. “Species are limited to the environment on an island. The level of threat from predatory animals is much lower or non-existent,” says Ana Benítez-Lopez, who carried out the research at Radboud University, now researcher at Doñana Biological Station (EBD-CSIC, Spain). “But also limited resources are available.” However, until now, many studies showed conflicting results which led to severe debate about this theory: is it really a pattern, or just an evolutionary coincidence?