You can only read this with chrome or a browser that translates to English unless you speak Portuguese. Fascinating read about artificial uteruses in the possible future bought to bring peace to the abortion debate or not, and as a safety measure for an apocalyptic event. This was shared by Zoltan, I think that’s his name, a transhumanist that at one time was hoping to be the first transhumanist elected as president and to base decisions on science or something like that. It’s been a while but he wanted equality and ethics through science/transhumanists goals.

O útero artificial está chegando, para o bem e para o mal. Feministas radicais já lutam pelo direito de matar seus fetos.

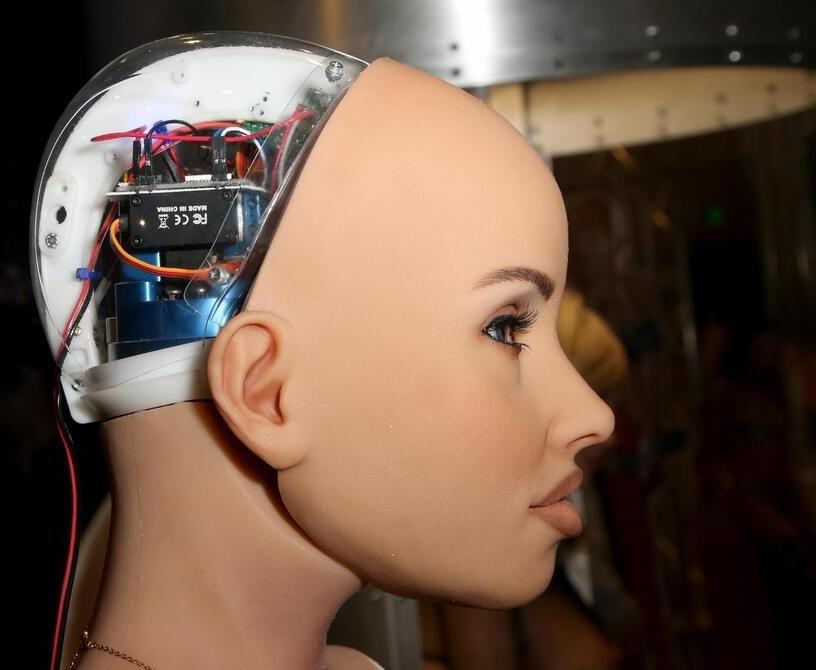

“Ectogenesis” vem do grego e quer dizer “nascimento do lado de fora”. Significa a possibilidade de gestação de uma criança fora do corpo de uma mulher. O termo foi inventado pelo cientista brit nico J. B. S. Haldane e vai completar cem anos no ano que vem. Mas essa possibilidade já era imaginada pelos alquimistas do século 16.

O escritor Aldous Huxley havia previsto, em 1931, no seu livro Admirável Mundo Novo, uma sociedade em que ovários seriam extraídos cirurgicamente das mulheres e cultivados em receptáculos artificiais. Esse mundo novo, admirável ou não, está virando realidade. A possibilidade de ectogênese se acelerou nos últimos meses, com a criação de úteros artificiais autônomos, já em fase final de testes em vários institutos e universidades ao mesmo tempo. É uma questão de tempo (e dinheiro) para que chegue ao mercado.