New research demonstrates that quantum dots solve a key issue with current 3D printing materials. I spoke with Keroles Riad, PhD student at Concordia University Montreal, Quebec, Canada, about his thesis on the photostability of materials used for stereolithography 3D printing. The research was supervised by Prof. Paula Wood-Adams, Prof. Rolf Wuthrich of the Mechanical and industrial engineering department at Concordia and Prof. Jerome Claverie of the Chemistry department at the University of Quebec in Montreal.

While quantum dots have been shown to cure acrylics, Riad says this work is the first demonstration of the process in epoxy resin.

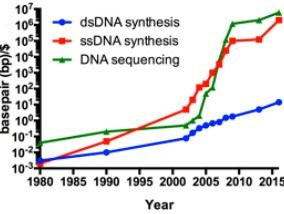

3D printing is often richly rewarding because it spans multiple disciplines. Here we look at a new thesis that advances the critical area of materials. The approach taken uses engineering, chemistry and physics to overcome the issue of stability present in current stereolithography processes. The results could form the basis of superior materials and wider use of 3D printing in many areas.