With the potential to image fast-moving objects.

When asked what superpowers they would like to have, many say the ability to see through things. Now, there may be a camera that could give people that gift.

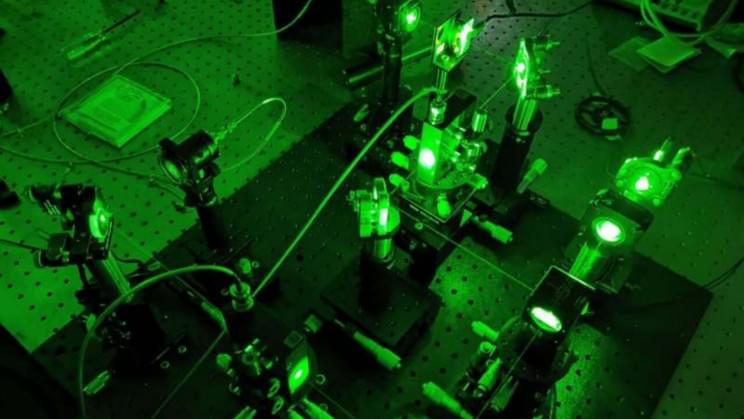

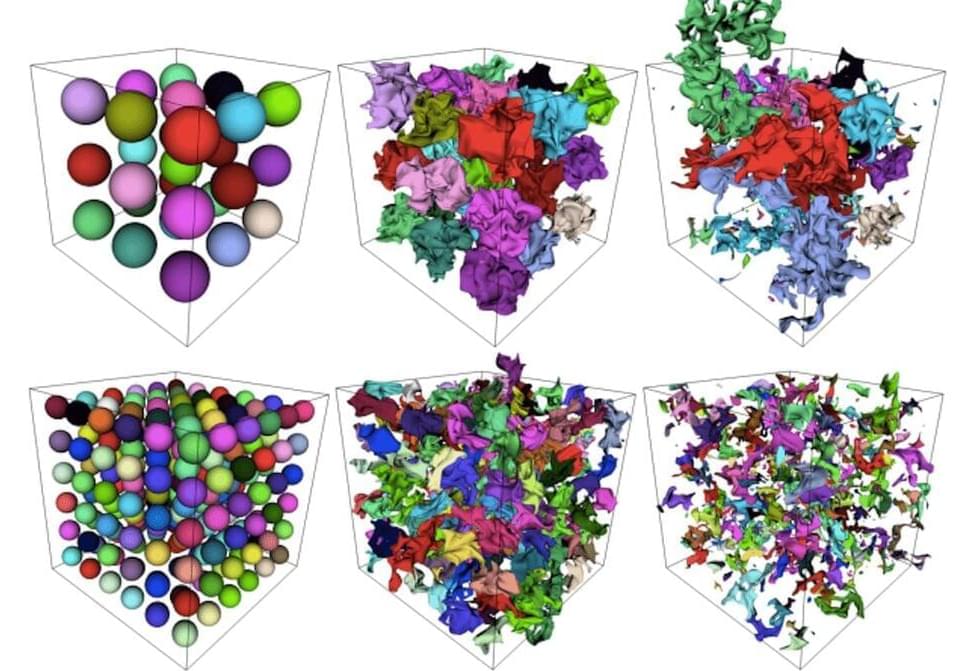

Developed by Northwestern Engineering researchers, the new high-resolution camera can see around corners and through human skin and even bones. It also has the potential to image fast-moving objects such as speeding cars or even the beating heart.

The relatively new research field is called non-line-of-sight (NLoS) imaging and comes with a level of resolution so high that it could even capture the tiniest capillaries at work.

“Our technology will usher in a new wave of imaging capabilities,” said in a statement the McCormick School of Engineering’s Florian Willomitzer, first author of the study.

“Our current sensor prototypes use visible or infrared light, but the principle is universal and could be extended to other wavelengths. For example, the same method could be applied to radio waves for space exploration or underwater acoustic imaging. It can be applied to many areas, and we have only scratched the surface.”

Full Story: