We now make computers small enough to inject into our bodies.

Category: computing – Page 976

Smart Dust Is Coming: New Camera Is the Size of a Grain of Salt

Miniaturization is one of the most world-shaking trends of the last several decades. Computer chips now have features measured in billionths of a meter. Sensors that once weighed kilograms fit inside your smartphone. But it doesn’t end there.

Researchers are aiming to take sensors smaller—much smaller.

In a new University of Stuttgart paper published in Nature Photonics, scientists describe tiny 3D printed lenses and show how they can take super sharp images. Each lens is 120 millionths of a meter in diameter—roughly the size of a grain of table salt—and because they’re 3D printed in one piece, complexity is no barrier. Any lens configuration that can be designed on a computer can be printed and used.

How Amrita University advanced neurological disorders’ prediction using GPUs

Excellent start in using GPU for mapping and predictive analysis on brain functioning and reactions; definitely should prove interesting to medical & tech researchers and engineers across the board should find this interesting.

MIS Asia offers Information Technology strategy insight for senior IT management — resources to understand and leverage information technology from a business leadership perspective.

Research may lead to more durable electronic devices such as cellphones

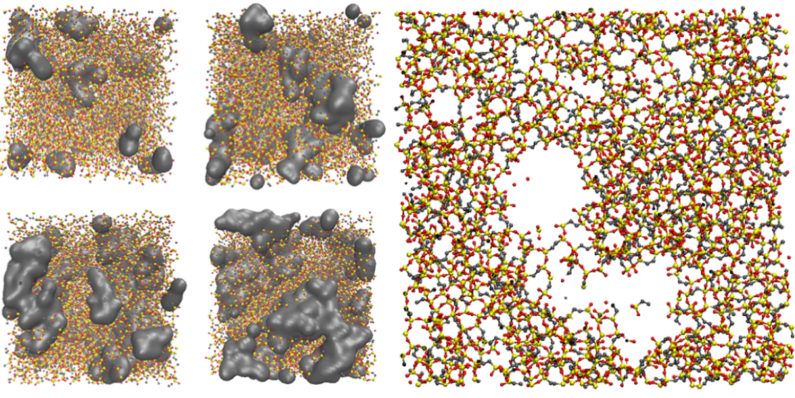

Deep inside the electronic devices that proliferate in our world, from cell phones to solar cells, layer upon layer of almost unimaginably small transistors and delicate circuitry shuttle all-important electrons back and forth.

It is now possible to cram 6 million or more transistors into a single layer of these chips. Designers include layers of glassy materials between the electronics to insulate and protect these delicate components against the continual push and pull of heating and cooling that often causes them to fail.

A paper published today in the journal Nature Materials reshapes our understanding of the materials in those important protective layers. In the study, Stanford’s Reinhold Dauskardt, a professor of materials science and engineering, and doctoral candidate Joseph Burg reveal that those glassy materials respond very differently to compression than they do to the tension of bending and stretching. The findings overturn conventional understanding and could have a lasting impact on the structure and reliability of the myriad devices that people depend upon every day.

DARPA approaches industry for new battlefield network algorithms and network protocols

Very nice.

ARLINGTON, Va., 27 June 2016. U.S. military researchers are asking industry for new algorithms and protocols for large, mission-aware, computer, communications, and battlefield network systems that physically are dispersed over large forward-deployed areas.

Officials of the U.S. Defense Advanced Research Projects Agency (DARPA) in Arlington, Va., issued a broad agency announcement on Friday (DARPA-BAA-16–41) for the Dispersed Computing project, which seeks to boost application and network performance of dispersed computing architectures by orders of magnitude with new algorithms and protocol stacks.

Examples of such architectures include network elements, radios, smart phones, or sensors with programmable execution environments; and portable micro-clouds of different form factors.

The future of storage may be in DNA

Definitely been seeing great research and success in Biocomputing; why I have been looking more and more in this area of the industry. Bio/ medical technology is our ultimate future state for singularity. It is the key that will help improve the enhancements we need to defeat cancer, aging, intelligence enhance, etc. as we have already seen the early hints already of what it can do for people, machines and data, the environment and resources. However, a word of caution, DNA ownership and security. We will need proper governance and oversight in this space.

© iStock/ Getty Images undefined How much storage do you have around the house? A few terabyte hard drives? What about USB sticks and old SATA drives? Humanity uses a staggering amount of storage, and our needs are only expanding as we build data centers, better cameras, and all sorts of other data-heavy gizmos. It’s a problem scientists from companies like IBM, Intel, and Microsoft are trying to solve, and the solution might be in our DNA.

A recent Spectrum article takes a look at the quest to unlock the storage potential of human DNA. DNA molecules are the building blocks of life, piecing our genetic information into living forms. The theory is that we can convert digital files into biological material by translating it from binary code into genetic code. That’s right: the future of storage could be test tubes.

In April, representatives from IBM, Intel, Microsoft, and Twist Bioscience met with computer scientists and geneticists for a closed door session to discuss the issue. The event was cosponsored by the U.S. Intelligence Advanced Research Projects Activity (IARPA), who reportedly may be interested in helping fund a “DNA hard drive.”

New, better way to build circuits for world’s first useful quantum computers

We’re on a roll with QC.

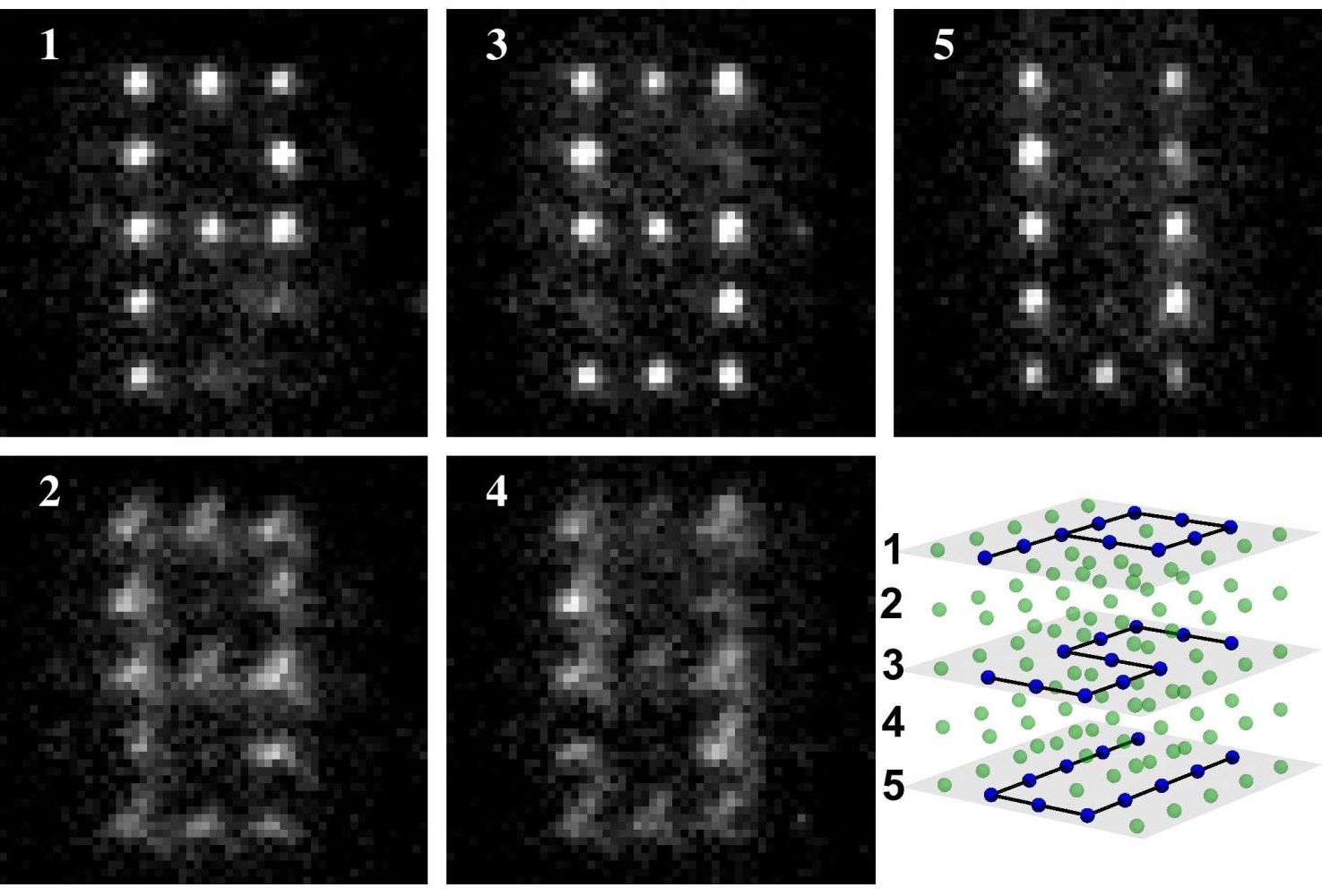

The era of quantum computers is one step closer as a result of research published in the current issue of the journal Science. The research team has devised and demonstrated a new way to pack a lot more quantum computing power into a much smaller space and with much greater control than ever before. The research advance, using a 3-dimensional array of atoms in quantum states called quantum bits—or qubits—was made by David S. Weiss, professor of physics at Penn State University, and three students on his lab team. He said “Our result is one of the many important developments that still are needed on the way to achieving quantum computers that will be useful for doing computations that are impossible to do today, with applications in cryptography for electronic data security and other computing-intensive fields.”

The new technique uses both laser light and microwaves to precisely control the switching of selected individual qubits from one quantum state to another without altering the states of the other atoms in the cubic array. The new technique demonstrates the potential use of atoms as the building blocks of circuits in future quantum computers.

The scientists invented an innovative way to arrange and precisely control the qubits, which are necessary for doing calculations in a quantum computer. “Our paper demonstrates that this novel approach is a precise, accurate, and efficient way to control large ensembles of qubits for quantum computing,” Weiss said.

© iStock/ Getty Images undefined How much storage do you have around the house? A few terabyte hard drives? What about USB sticks and old SATA drives? Humanity uses a staggering amount of storage, and our needs are only expanding as we build data centers, better cameras, and all sorts of other data-heavy gizmos. It’s a problem scientists from companies like IBM, Intel, and Microsoft are trying to solve, and the solution might be in our DNA.

© iStock/ Getty Images undefined How much storage do you have around the house? A few terabyte hard drives? What about USB sticks and old SATA drives? Humanity uses a staggering amount of storage, and our needs are only expanding as we build data centers, better cameras, and all sorts of other data-heavy gizmos. It’s a problem scientists from companies like IBM, Intel, and Microsoft are trying to solve, and the solution might be in our DNA.