Fijitsu retrofitted one of it’s clean rooms in a vertical farm. The project was so successful, they discovered they could enter a new market segment and sell the systems themselves. I definately want one.

Like the giant monolith in Stanley Kubrick’s 2,001 this new head of lettuce is simultaneously a product of this factory’s past and the future. Fujitsu is a space-age R&D innovator with sprawling, specialized factories. But several of its facilities, including this one, went dark when the company tightened its belt and reorganized its product lines after the 2008 global financial crisis. Now in the aftermath, it has retrofitted this facilities to serve tomorrow’s vegetable consumers, who will pay for a better-than-organic product, and who enjoy a bowl of iceberg more if they know it was monitored by thousands of little sensors.

Like the giant monolith in Stanley Kubrick’s 2001, this new head of lettuce is simultaneously a product of this factory’s past and the future. Fujitsu is a space-age R&D innovator with sprawling, specialized factories. But several of its facilities, including this one, went dark when the company tightened its belt and reorganized its product lines after the 2008 global financial crisis. Now in the aftermath, it has retrofitted this facilities to serve tomorrow’s vegetable consumers, who will pay for a better-than-organic product, and who enjoy a bowl of iceberg more if they know it was monitored by thousands of little sensors.

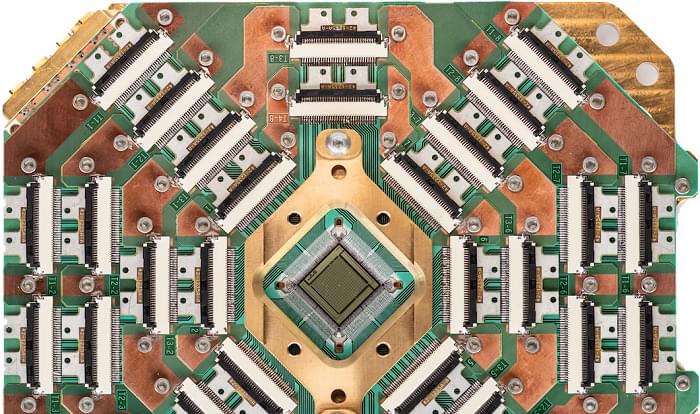

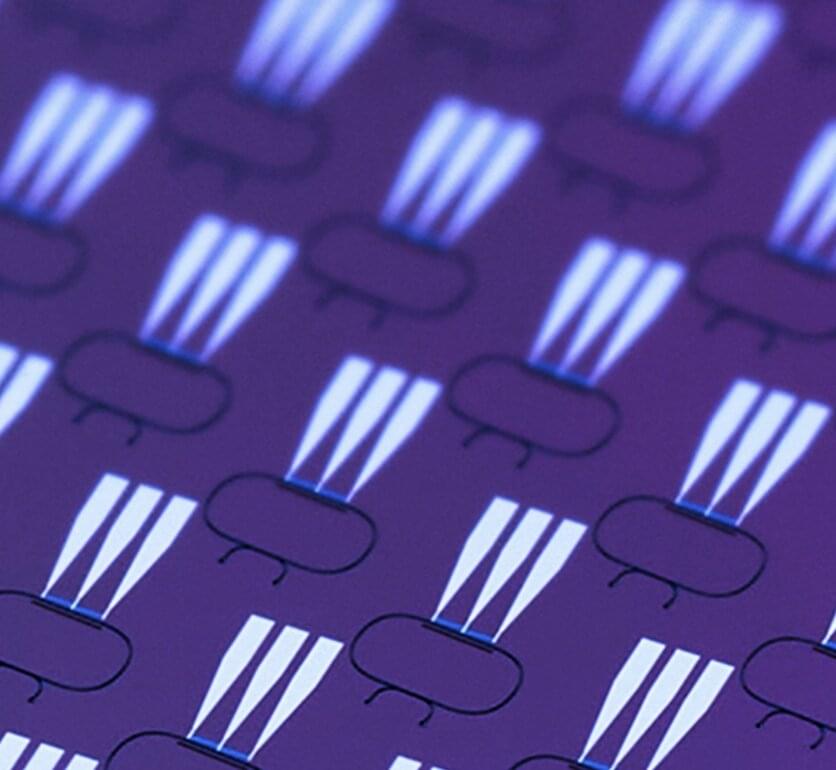

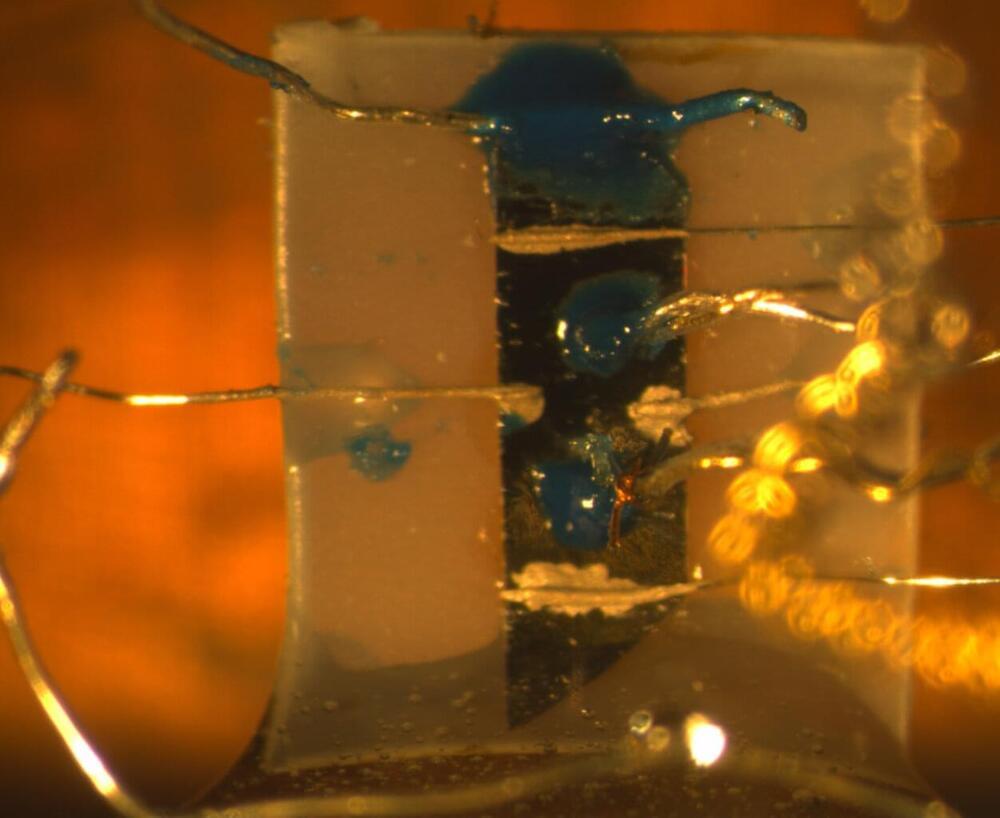

A year into the project, Fujitsu is now producing between 2,500 and 3,000 heads of a lettuce a day that sell for three times the normal price: The company is using its hydroponic lettuce farm to showcase its “smart” farming technologies, in the hopes of nurturing a new agribusiness.

The project is the outgrowth of a company-wide reorganization following the 2008 financial crisis, after which Fujitsu decreased its number of product lines from nine to six. Originally built in 1,967 the building where the company is now growing lettuce was once the largest transistor factory in the world. Over the years, Fujitsu expanded, buying up three other buildings and the remainder of the industrial park, bringing its total footprint in the area to roughly 260,000 square meters.