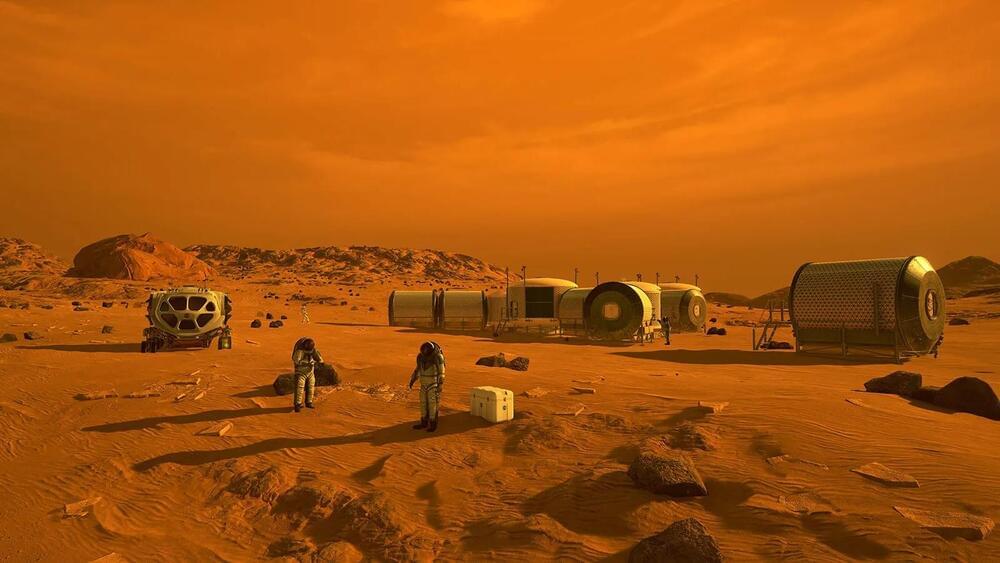

“This breakthrough enhances astronaut safety and makes long-term Mars missions a more realistic possibility,” said Dr. Dimitra Atri.

How will future Mars astronauts shield themselves from harmful space radiation? This is what a recent study published in The European Physical Journal Plus hopes to address as a pair of international researchers investigated what materials could be suited for providing the necessary shielding against solar and cosmic rays that could harm future Mars astronauts. This study holds the potential to help scientists and engineers better understand the mitigation measures that need to be taken to protect astronauts during long-term space missions.

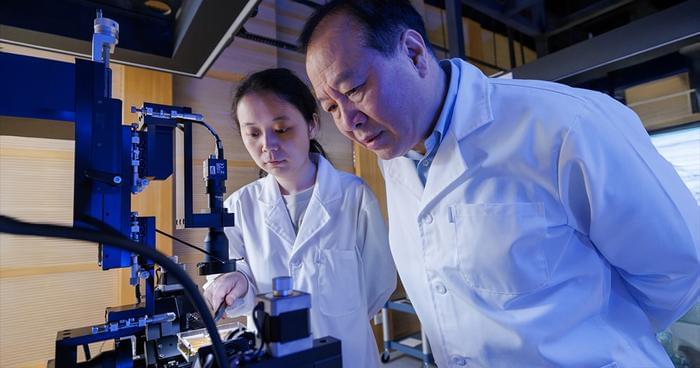

For the study, the researchers used computer simulations to create Mars-like conditions, whose surface temperatures and pressures are much smaller than Earth’s, along with Mars completely lacking a protective magnetic field that provides our planet with protection from space radiation. Through this, the researchers tested a variety of materials to ascertain their effectiveness in shielding astronauts from space radiation.

In the end, they found that synthetic fibers, rubber, and plastics demonstrated the best performance of providing shielding. Additionally, the team found that Martian regolith (commonly called Martian “soil”) and aluminum combined with other materials could also be effective as a shielding agent, as well.