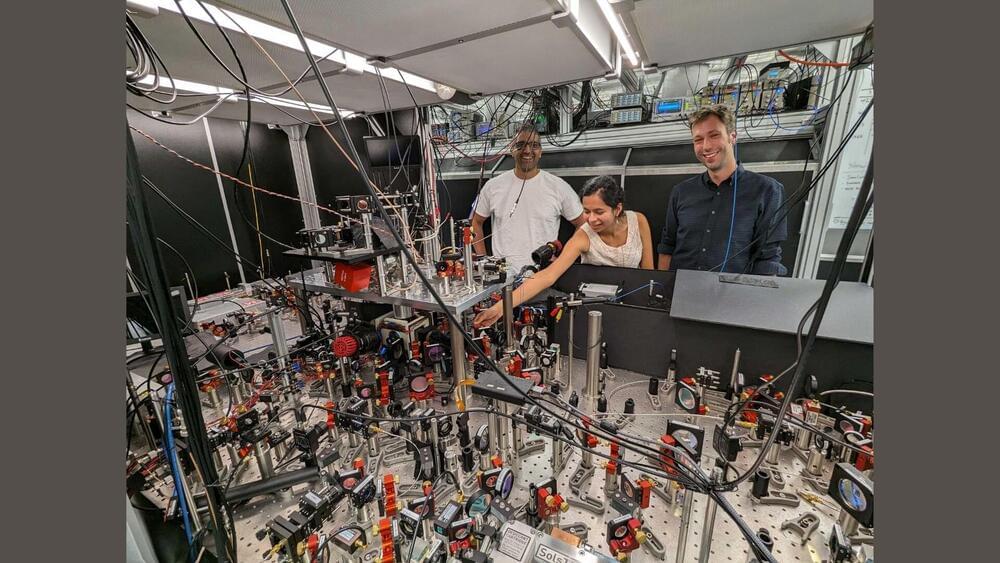

Engineers, physicists, computer scientists and more are needed for the second quantum revolution.

7 linux distributions that feel just like windows.

I often think of Windows 10 as “Windows 8.1 done right”, and Windows 11 as a natural evolution of that refinement, with plenty of improvements under the hood.

However, considering that Windows is still a closed, commercial platform, many users with concerns about privacy or dissatisfaction with Windows 11 may continue to seek alternative operating systems that offer more control while providing a similar experience to the Windows GUI.

In this article, we’ve picked best Linux distributions that offer the best possible Windows-like desktop experience on Linux. Whether you’re transitioning from Windows or just prefer a similar look and feel, these distros are designed to make the switch easy and seamless.

Biological components are less reliable than electrical ones, and rather than instantaneously receive the incoming signals, the signals arrive with a variety of delays. This forces the brain to cope with said delays by having each neuron integrate the incoming signals over time and fire afterwards, as well as using a population of neurons, instead of one, to overcome neuronal cells that temporarily don’t fire.

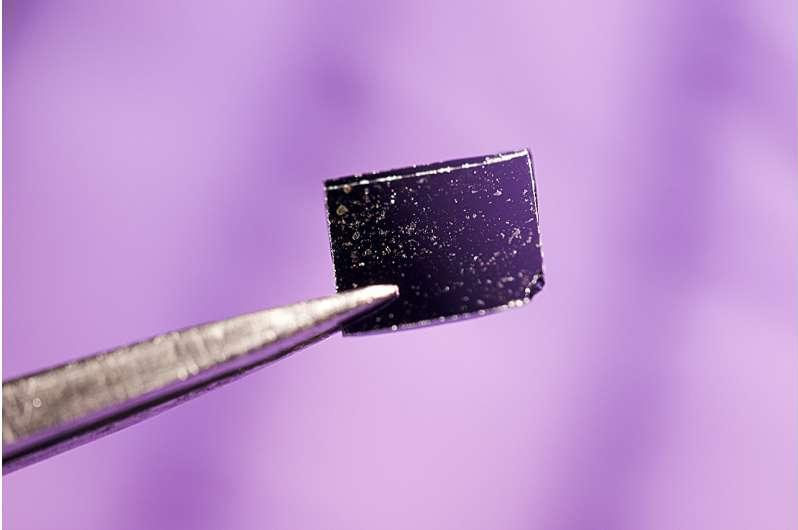

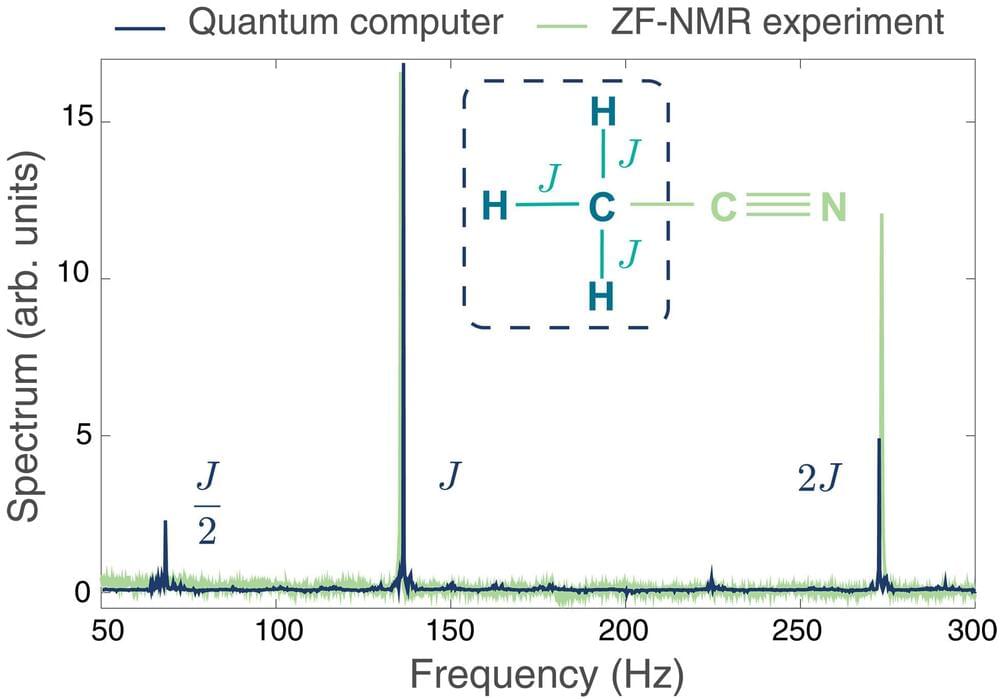

Programmable quantum computers have the potential to efficiently simulate increasingly complex molecular structures, electronic structures, chemical reactions, and quantum mechanical states in chemistry that classical computers cannot. As the molecule’s size and complexity increase, so do the computational resources required to model it.

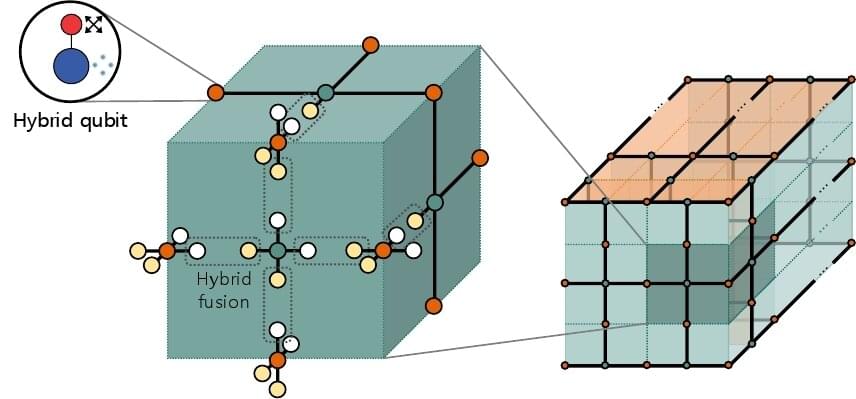

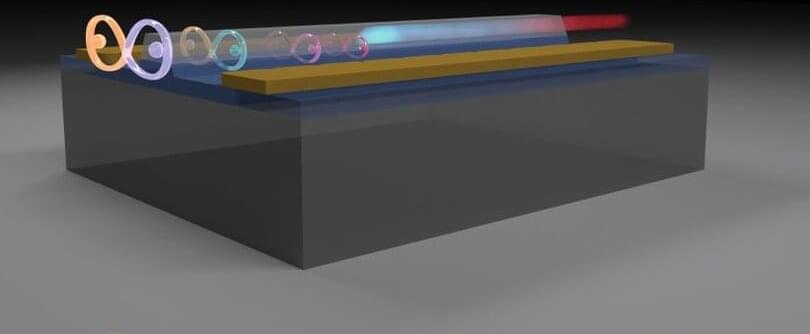

To achieve remarkable performances, quantum computing systems based on multiple qubits must attain high-fidelity entanglement between their underlying qubits. Past studies have shown that solid-state quantum platforms—quantum computing systems based on solid materials—are highly prone to errors, which can adversely impact the coherence between qubits and their overall performance.