Scientists have vastly reduced the temperatures and conditions needed to grow special diamonds for computing, making faster and more efficient computing chips a more realistic proposition.

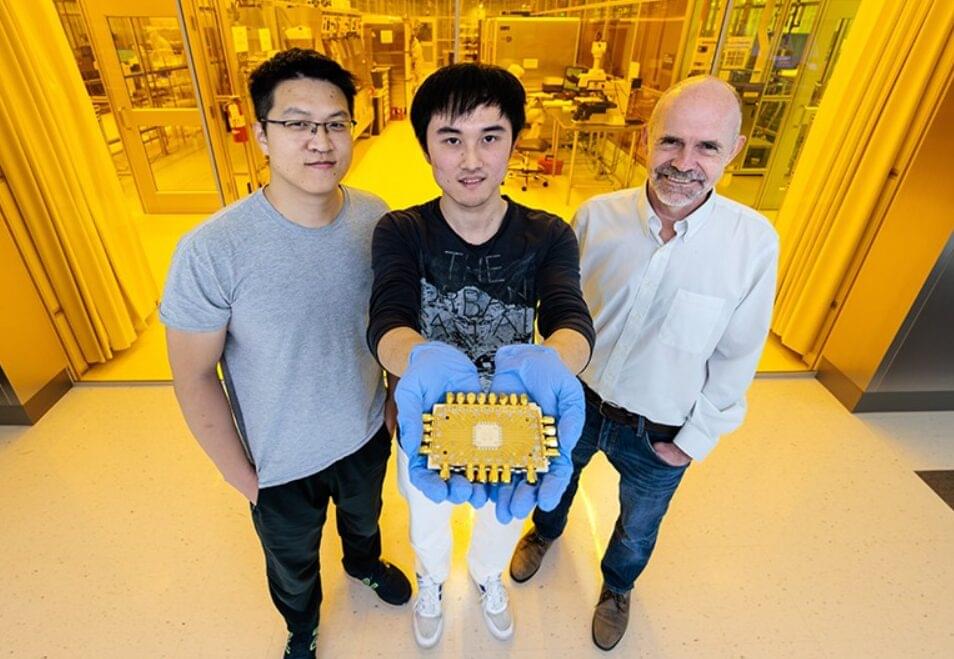

New research demonstrates a brand-new architecture for scaling up superconducting quantum devices. Researchers at the UChicago Pritzker School of Molecular Engineering (UChicago PME) have realized a new design for a superconducting quantum processor, aiming at a potential architecture for the large-scale, durable devices the quantum revolution demands.

Unlike the typical quantum chip design that lays the information-processing qubits onto a 2-D grid, the team from the Cleland Lab has designed a modular quantum processor comprising a reconfigurable router as a central hub. This enables any two qubits to connect and entangle, where in the older system, qubits can only talk to the qubits physically nearest to them.

“A quantum computer won’t necessarily compete with a classical computer in things like memory size or CPU size,” said UChicago PME Prof. Andrew Cleland. “Instead, they take advantage of a fundamentally different scaling: Doubling a classical computer’s computational power requires twice as big a CPU, or twice the clock speed. Doubling a quantum computer only requires one additional qubit.”

MicroAlgo Inc. has announced the development of a quantum algorithm it claims significantly enhances the efficiency and accuracy of quantum computing operations. According to a company press release, this advance focuses on implementing a FULL adder operation — an essential arithmetic unit — using CPU registers in quantum gate computers.

The company says this achievement could open new pathways for the design and practical application of quantum gate computing systems. However, it’s important to point out that the company did not cite supporting research papers or third-party validations in the announcement.

Quantum gate computers operate by applying quantum gates to qubits, which are the basic units of quantum information. Unlike classical bits that represent data as either “0” or “1,” qubits can exist in a superposition of probabilistic states, theoretically enabling quantum systems to process specific tasks more efficiently than classical computers. According to the press release, MicroAlgo’s innovation leverages quantum gates and the properties of qubits, including superposition and entanglement, to simulate and perform FULL adder operations.

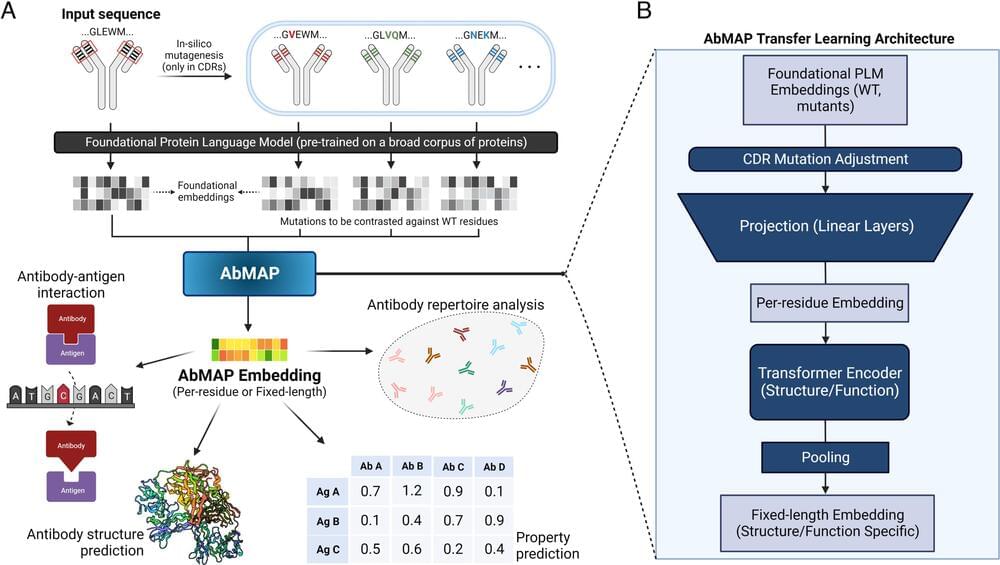

To overcome that limitation, MIT researchers have developed a computational technique that allows large language models to predict antibody structures more accurately. Their work could enable researchers to sift through millions of possible antibodies to identify those that could be used to treat SARS-CoV-2 and other infectious diseases.

The findings are published in the journal Proceedings of the National Academy of Sciences.

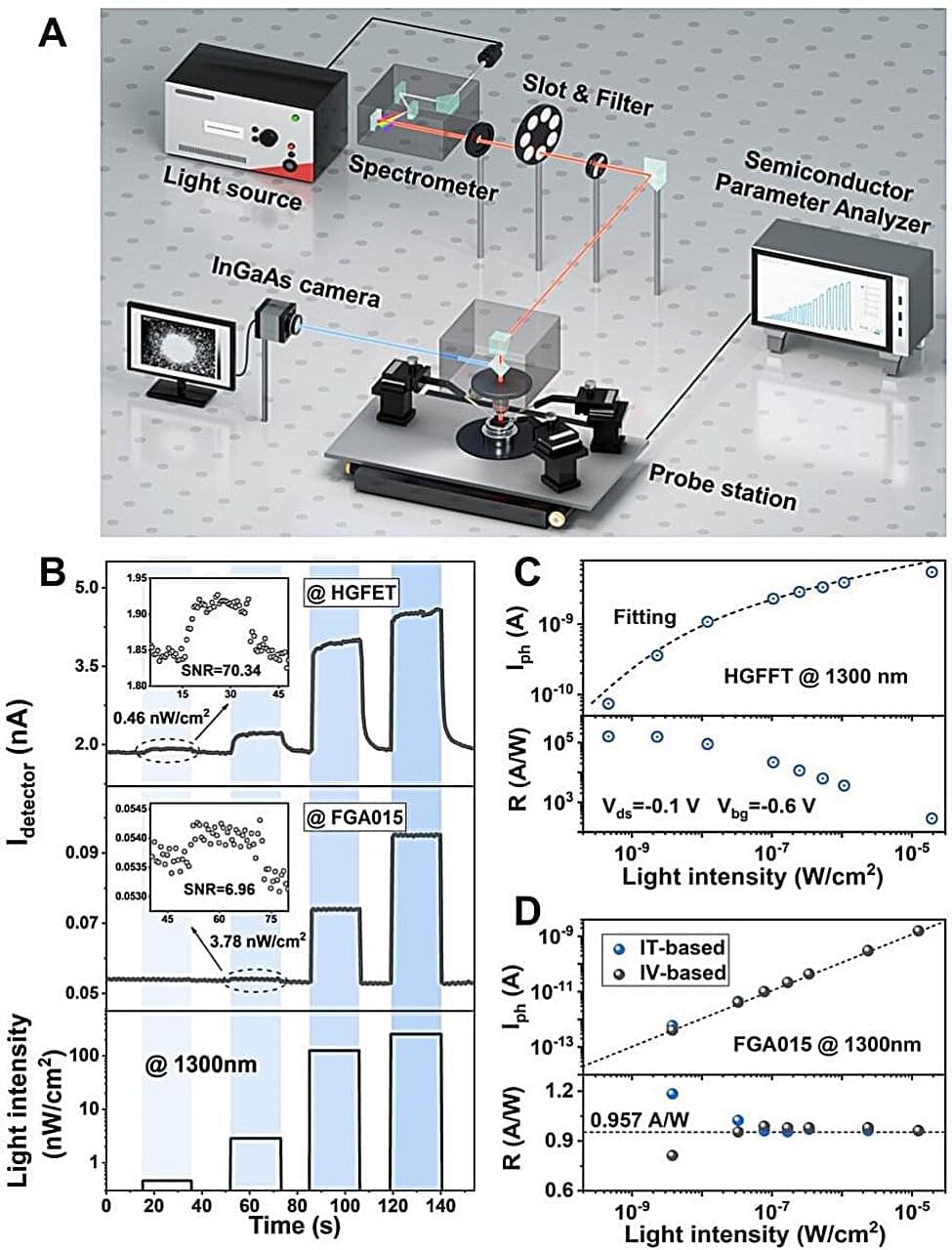

Prof Zhang Zhiyong’s team at Peking University developed a heterojunction-gated field-effect transistor (HGFET) that achieves high sensitivity in short-wave infrared detection, with a recorded specific detectivity above 1014 Jones at 1,300 nm, making it capable of starlight detection. Their research was recently published in the journal Advanced Materials, titled “Opto-Electrical Decoupled Phototransistor for Starlight Detection.”

Highly sensitive shortwave infrared (SWIR) detectors are essential for detecting weak radiation (typically below 10−8 W·Sr−1 ·cm−2 ·µm−1) with high-end passive image sensors. However, mainstream SWIR detection based on epitaxial photodiodes cannot effectively detect ultraweak infrared radiation due to the lack of inherent gain.

Filling this gap, researchers at the Peking University School of Electronics and collaborators have presented a heterojunction-gated field-effect transistor (HGFET) that achieves ultra-high photogain and exceptionally low noise in the short-wavelength infrared (SWIR) region, benefiting from a design that incorporates a comprehensive opto-electric decoupling mechanism.

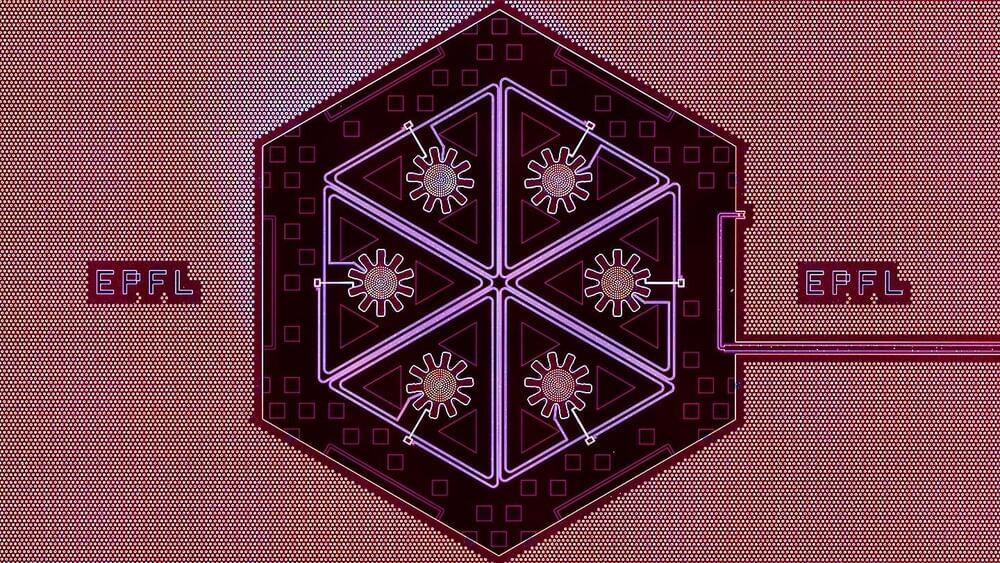

Scientists at EPFL achieved a breakthrough by synchronizing six mechanical oscillators into a collective quantum state, enabling observations of unique phenomena like quantum sideband asymmetry. This advance paves the way for innovations in quantum computing and sensing.

Quantum technologies are revolutionizing our understanding of the universe, and one promising area involves macroscopic mechanical oscillators. These devices, already integral to quartz watches, mobile phones, and telecommunications lasers, could play a transformative role in the quantum realm. At the quantum scale, macroscopic oscillators have the potential to enable ultra-sensitive sensors and advanced components for quantum computing, unlocking groundbreaking innovations across multiple industries.

Achieving control over mechanical oscillators at the quantum level is a critical step toward realizing these future technologies. However, managing them collectively poses significant challenges, as it demands nearly identical units with exceptional precision.

Quantum walks, leveraging quantum phenomena such as superposition and entanglement, offer remarkable computational capabilities beyond classical methods.

These versatile models excel in diverse tasks, from database searches to simulating complex quantum systems. With implementations spanning analog and digital methods, they promise innovations in fields like quantum computing, simulation, and graph theory.

Harnessing Quantum Phenomena for Computation.

It’s time to stop doubting quantum information technology.

Are we there yet? No. Not by a long shot. But the progress on a number of key challenges, the sheer number of organizations fighting to succeed (and make a buck), the no-turning-back public investment, and nasty international rivalry are all good guarantors.

It feels like quantum computing is turning an important corner, maybe not the corner leading to the home stretch, but likely the corner beyond the turning back point. We now have quantum computers able to perform tasks beyond the reach of classical systems. Google’s latest break-through benchmark demonstrated that. These aren’t error corrected machines yet, but progress in error correction is one of 2024’s highlights.

The “expansion protocol” would be a lot more invasive than you’d enjoy.

Background and objectives: Aging clocks are computational models designed to measure biological age and aging rate based on age-related markers including epigenetic, proteomic, and immunomic changes, gut and skin microbiota, among others. In this narrative review, we aim to discuss the currently available aging clocks, ranging from epigenetic aging clocks to visual skin aging clocks.

Methods: We performed a literature search on PubMed/MEDLINE databases with keywords including: “aging clock,” “aging,” “biological age,” “chronological age,” “epigenetic,” “proteomic,” “microbiome,” “telomere,” “metabolic,” “inflammation,” “glycomic,” “lifestyle,” “nutrition,” “diet,” “exercise,” “psychosocial,” and “technology.”

Results: Notably, several CpG regions, plasma proteins, inflammatory and immune biomarkers, microbiome shifts, neuroimaging changes, and visual skin aging parameters demonstrated roles in aging and aging clock predictions. Further analysis on the most predictive CpGs and biomarkers is warranted. Limitations of aging clocks include technical noise which may be corrected with additional statistical techniques, and the diversity and applicability of samples utilized.