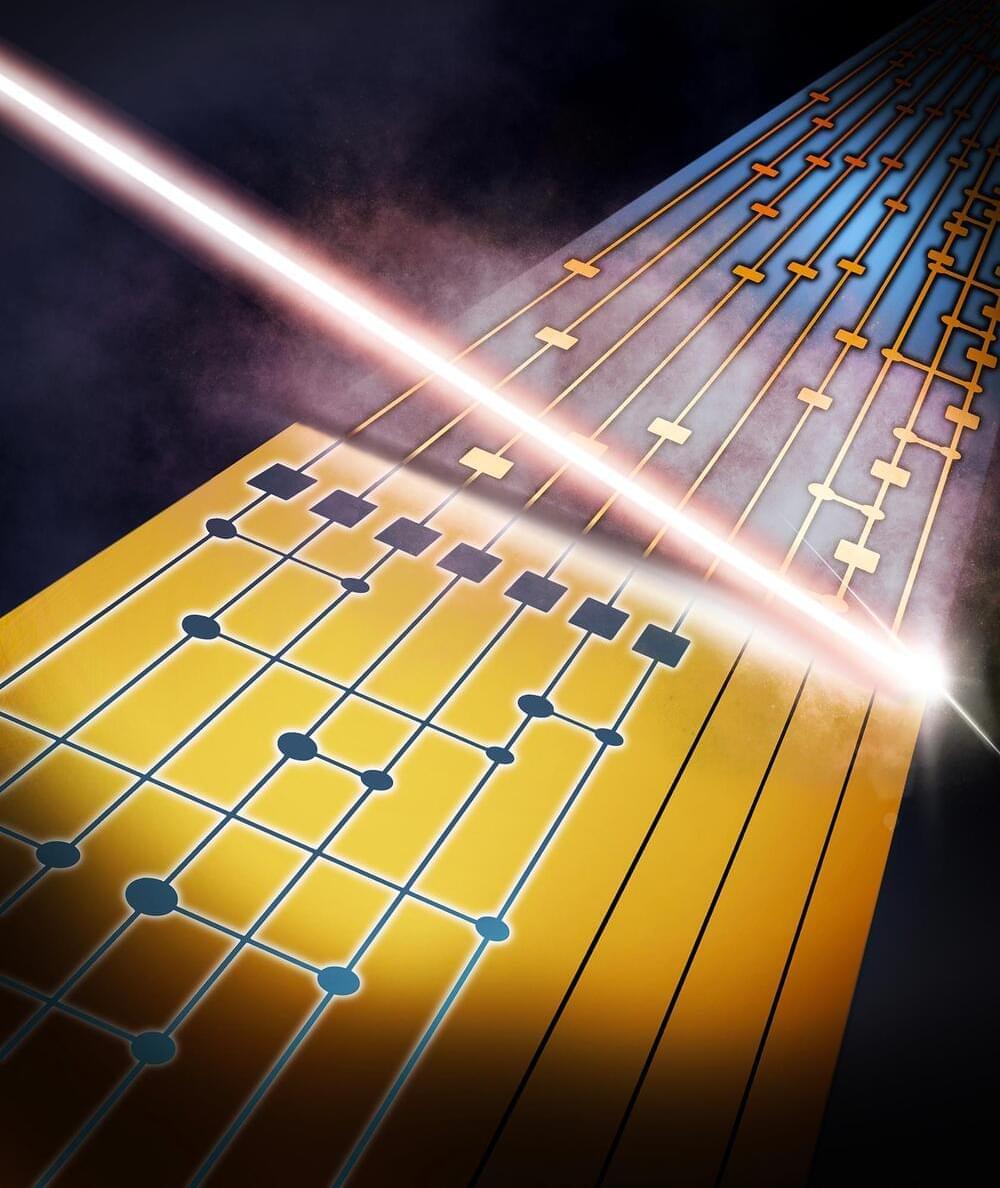

A team of physicists has introduced an innovative error-correction method for quantum computers, enabling them to switch error correction codes on-the-fly to manage complex computations more effectively and with fewer errors.

Error Correction in Quantum Computing

Computers can make mistakes, but in classical systems, these errors are usually detected and corrected using various technical methods. Quantum computers, however, face a unique challenge — quantum states cannot be copied. This limitation means that errors cannot be identified by comparing multiple saved copies, as is done in classical computing.