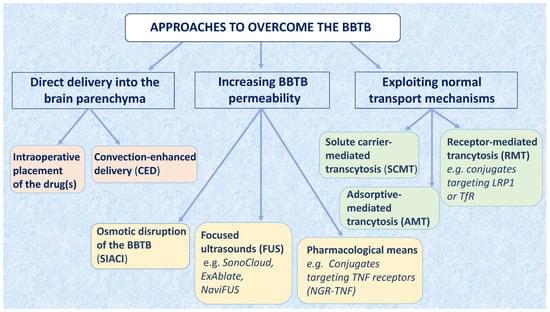

Tumors affecting the central nervous system (CNS), either primary or secondary, are highly prevalent and represent an unmet medical need. Prognosis of these tumors remains poor, mostly due to the low intrinsic chemo/radio-sensitivity of tumor cells, a meagerly known role of the microenvironment and the poor CNS bioavailability of most used anti-cancer agents. The BBTB is the main obstacle for anticancer drugs to achieve therapeutic concentrations in the tumor tissues. During the last decades, many efforts have been devoted to the identification of modalities allowing to increase drug delivery into brain tumors. Until recently, success has been modest, as few of these approaches reached clinical testing and even less gained regulatory approval.