Restoring And Extending The Capabilities Of The Human Brain — Dr. Behnaam Aazhang, Ph.D. — Director, Rice Neuroengineering Initiative, Rice University

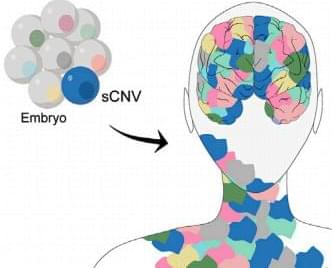

Dr. Behnaam Aazhang, Ph.D. (https://aaz.rice.edu/) is the J.S. Abercrombie Professor, Electrical and Computer Engineering, and Director, Rice Neuroengineering Initiative (NEI — https://neuroengineering.rice.edu/), Rice University, where he has broad research interests including signal and data processing, information theory, dynamical systems, and their applications to neuro-engineering, with focus areas in (i) understanding neuronal circuits connectivity and the impact of learning on connectivity, (ii) developing minimally invasive and non-invasive real-time closed-loop stimulation of neuronal systems to mitigate disorders such as epilepsy, Parkinson, depression, obesity, and mild traumatic brain injury, (iii) developing a patient-specific multisite wireless monitoring and pacing system with temporal and spatial precision to restore the healthy function of a diseased heart, and (iv) developing algorithms to detect, predict, and prevent security breaches in cloud computing and storage systems.

Dr. Aazhang received his B.S. (with highest honors), M.S., and Ph.D. degrees in Electrical and Computer Engineering from University of Illinois at Urbana-Champaign in 1981, 1983, and 1986, respectively. From 1981 to 1985, he was a Research Assistant in the Coordinated Science Laboratory, University of Illinois. In August 1985, he joined the faculty of Rice University. From 2006 till 2014, he held an Academy of Finland Distinguished Visiting Professorship appointment (FiDiPro) at the University of Oulu, Oulu, Finland.

Dr. Aazhang is a Fellow of IEEE and AAAS, and a distinguished lecturer of IEEE Communication Society.

Dr. Aazhang received an Honorary Doctorate degree from the University of Oulu, Finland (the highest honor that the university can bestow) in 2017 and IEEE ComSoc CTTC Outstanding Service Award “For innovative leadership that elevated the success of the Communication Theory Workshop” in 2016. He is a recipient of 2004 IEEE Communication Society’s Stephen O. Rice best paper award for a paper with A. Sendonaris and E. Erkip. In addition, Sendonaris, Erkip, and Aazhang received IEEE Communication Society’s 2013 Advances in Communication Award for the same paper. He has been listed in the Thomson-ISI Highly Cited Researchers and has been keynote and plenary speaker of several conferences.