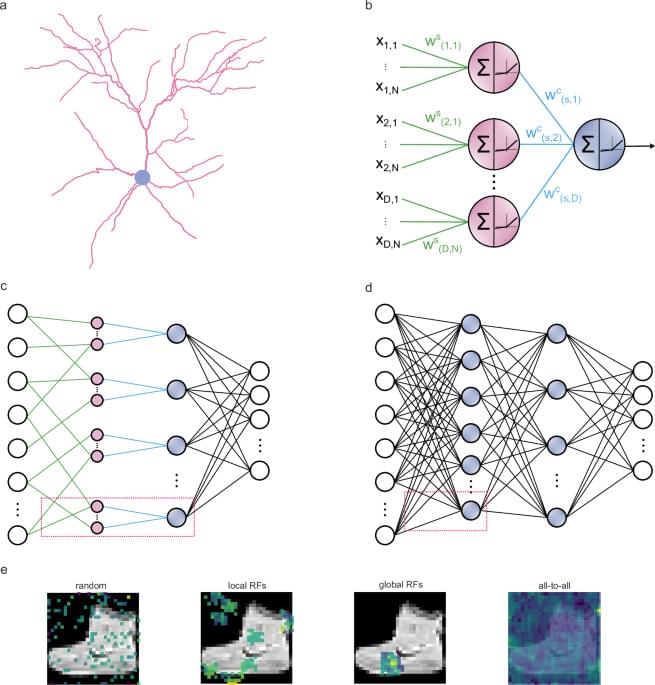

We report the use of a multiagent generative artificial intelligence framework, the X-LoRA-Gemma large language model (LLM), to analyze, design and test molecular design. The X-LoRA-Gemma model, inspired by biological principles and featuring ~7 billion parameters, dynamically reconfigures its structure through a dual-pass inference strategy to enhance its problem-solving abilities across diverse scientific domains. The model is used to first identify molecular engineering targets through a systematic human-AI and AI-AI self-driving multi-agent approach to elucidate key targets for molecular optimization to improve interactions between molecules. Next, a multi-agent generative design process is used that includes rational steps, reasoning and autonomous knowledge extraction. Target properties of the molecule are identified either using a Principal Component Analysis (PCA) of key molecular properties or sampling from the distribution of known molecular properties. The model is then used to generate a large set of candidate molecules, which are analyzed via their molecular structure, charge distribution, and other features. We validate that as predicted, increased dipole moment and polarizability is indeed achieved in the designed molecules. We anticipate an increasing integration of these techniques into the molecular engineering workflow, ultimately enabling the development of innovative solutions to address a wide range of societal challenges. We conclude with a critical discussion of challenges and opportunities of the use of multi-agent generative AI for molecular engineering, analysis and design.